A dangerous alliance: the new Dark Web + AI marketplace

In a world where Artificial Intelligence is reshaping entire industries and the way we work, its most unsettling impact is happening far from the public eye: within the Dark Web ecosystem.

What was once an anonymous space for sharing and selling information in underground forums with certain illicit activities has now become a highly specialized marketplace, equipped with all kinds of AI tools to automate attacks and make the criminal market more sophisticated.

AI has transformed the cybercrime landscape.

In just one year, mentions and sales of AI-powered tools on the Dark Web have surged by over 200%. Identity fraud scams have quadrupled, and 73% of companies worldwide have experienced some form of AI-related data breach. What once required advanced technical expertise can now be done with simple natural language prompts: just pay a monthly subscription!

Could you tell the difference between your boss’s real voice and an AI-cloned version asking for urgent access to critical systems?

However, it's important to note that, according to the Adversarial Misuse of Generative AI report by Google Threat Intelligence, AI has not yet been used to develop entirely new cyberattack capabilities. Instead, it has mainly been used to increase productivity and automate basic tasks.

The role of AI in the black market

The biggest shift in the ecosystem has been the integration of AI to automate and scale different types of fraud:

- Advanced identity impersonation. Language models similar to ChatGPT are now used to craft hyper-realistic phishing messages, personalized using real data gathered from social networks and major data leaks. What once required advanced social engineering skills is now accessible to virtually anyone, thanks to tools like FraudGPT, enabling scammers to generate malicious code and orchestrate convincing fraud campaigns with no prior knowledge.

This "Phishing as a Service" model is subscription-based. Think Netflix—but for illegal purposes.

- Financial deepfakes. AI has vastly improved the creation of synthetic video and audio. By cloning voices and faces of senior executives, attackers can produce convincing media used to authorize multimillion-dollar transfers or manipulate critical decisions.

The problem goes beyond audiovisual forgeries—AI-generated legal document forgery, including signatures, is becoming a much bigger trend and is openly traded. - Automated attacks: Tools based on open-source language models—dubbed “Generative AI malware” like WormGPT, DarkBERT, or EvilGPT—are able to learn and modify their malicious code using AI, evading traditional antivirus detection. In 2025, this trend has evolved with the rise of Xanthorox, a platform that goes beyond simple natural language model variants. It's a system built from scratch with a modular architecture of specialized AI models, hosted on private servers. Its capabilities include automated malicious code generation, vulnerability exploitation, and voice-command attack execution.

Its ability to operate offline and conduct real-time searches across 50+ engines makes it an extremely autonomous and dangerous tool. - AI bots on messaging platforms: the new face of mass fraud: In easily accessible spaces like Telegram channels, AI powers bots that support large-scale illicit activity: from selling illegal access credentials and card cloning, to forging documents or simulating tech support chats to trick victims.

The alarming part is how easily these sophisticated attacks are launched through everyday, accessible interfaces.

A single bot can process 36,000 transactions per second — matching the traffic volumes of leading e-commerce platforms on Black Friday.

Market distribution: AI as a black-market commodity

The distribution of “malicious” tools on the Dark Web is becoming increasingly sophisticated, inspired by traditional e-commerce models.

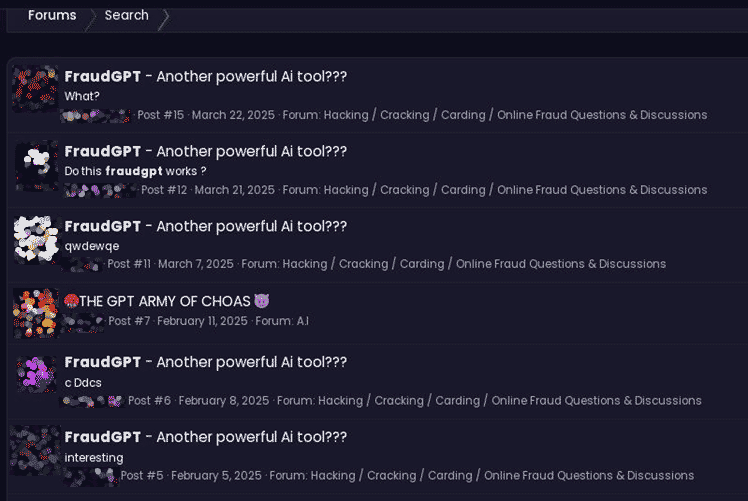

- Underground forums, the cybercriminals’ university: According to a report by Kaspersky, over 3,000 posts were detected where cybercriminals discussed how to modify language models (LLMs) for malicious use. They often share AI scripts, examples, and step-by-step guides.

- Subscription-based marketplaces: On marketplaces, tools like FraudGPT are rented for €170 per month or €1,500 per year. Some all-in-one kits exceed €4,000 and include tech support and updates.

- Stolen accounts: Premium access to AI platforms like ChatGPT is sold for €8 to €500, depending on usage limits. Automated services can generate up to 1,000 fake accounts per day using stolen personal data.

- Covert advertising: Bots on platforms like Telegram and Discord promote these tools with test messages to make them easily accessible to anyone.

Intermediaries and specialists: crime gets organized too

The underground market isn’t pure chaos—it’s structured. The so-called Initial Access Brokers (IABs) are intermediaries or groups specialized in infiltrating corporate networks, then selling access to ransomware groups. They have even improved their methods using AI to clean, validate, and classify stolen databases, ensuring that the data they sell is actually useful.

In the Ransomware as a Service (RaaS) model, roles are clearly defined and highly professionalized. Access can be sold for anywhere between €400 and €10,000, depending on the target’s size and value.

Meanwhile, the trade of compromised credentials has become an extremely lucrative Dark Web market—and it’s still growing. Millions of user records, passwords, and email addresses are available for sale. For instance, in Spain alone, 33 leaks were detected in forums during the first quarter of 2025, affecting critical sectors such as government, transportation, energy, and industry.

Mapping the hidden market with OSINT

From a monitoring and analysis perspective, OSINT techniques are key to systematically exploring Dark Web content and maximizing useful intelligence. The approach begins with tracking [.]onion domains via specialized search engines, followed by automated scanning in secure environments.

Primary targets include detecting stolen credentials, exposed vulnerabilities, data leaks, or active fraud campaigns. Systematic collection of this information enables not only real-time incident mapping but also the ability to anticipate threat actor movements and identify attack patterns.

At this stage, the application of AI could make a significant difference—using natural language processing (NLP) techniques to analyze large volumes of data from messaging channels, understand the context of conversations, and filter relevant mentions, even when written in different languages or specialized slang.

By cross-referencing data, we can correlate actors, understand their modus operandi, and generate early warnings.

Protecting against digital cybercrime

It is essential to adopt a proactive approach that combines technology, education, and governance. For example, organizations must implement AI-based solutions to detect and respond to threats in real time, alongside continuous risk monitoring. Cyber intelligence can also play a key role by detecting attack patterns and suspicious activities within digital environments or the Dark Web.

In this context, investing in digital education is crucial: it’s no longer enough to recognize phishing emails—users must now be trained to detect advanced scams like deepfakes or cloned audios that mimic human conversations with surprising realism.

■ Be wary of “premium AI” deals on shady marketplaces. What seems like a bargain could be a trap!

Hybrid Cloud

Hybrid Cloud Cyber Security & NaaS

Cyber Security & NaaS AI & Data

AI & Data IoT & Connectivity

IoT & Connectivity Business Applications

Business Applications Intelligent Workplace

Intelligent Workplace Consulting & Professional Services

Consulting & Professional Services Small Medium Enterprise

Small Medium Enterprise Health and Social Care

Health and Social Care Industry

Industry Retail

Retail Tourism and Leisure

Tourism and Leisure Transport & Logistics

Transport & Logistics Energy & Utilities

Energy & Utilities Banking and Finance

Banking and Finance Smart Cities

Smart Cities Public Sector

Public Sector