Detecting the undetectable: AI tools to identify AI-generated content

In just a few years, Generative AI has gone from being a technological curiosity to something we use every day. From assistants that write emails to chatbots that hold conversations that feel human. AI has radically changed how people work, write and think: what used to take hours can now be done in seconds with a well-crafted prompt. This democratisation of content creation has opened up infinite possibilities.

But as with every technological revolution, not everything is positive. The same ease that makes AI such a powerful tool also creates a problem. How can we tell whether a text was written by a human or by an AI?

How AI-generated content detectors work

This question has important practical implications. When content authenticity matters (for example, when verifying authorship in education), it is essential to ensure that the work is original. That’s why we need reliable tools. And this is where AI-generated content detectors come into play.

These are tools that, interestingly, use AI itself to detect its own presence. But how exactly do they work?

AI text detectors are based on something that may seem contradictory: using AI to identify content created by other AIs. Although it sounds paradoxical, language models leave distinctive "digital fingerprints" in their texts, patterns that can be recognised and analysed.

AI-generated content detectors use artificial intelligence itself to unmask what other AIs have created.

Perplexity and burstiness: the keys to detecting AI text

To understand how they work, we need to explain two key concepts:

- The first is perplexity, which measures how predictable a text is. AI tends to choose likely words, generating predictable texts with low perplexity. Humans, on the other hand, are more unpredictable: we use unexpected turns of phrase or less obvious words, and that’s why our texts show higher perplexity.

- The second concept is burstiness or variability: when we write, we alternate between short and long sentences and between simple and complex ones, while AI maintains a fairly uniform rhythm.

AI generates predictable texts with low perplexity, while humans write in a more varied and unpredictable way.

In terms of technology, these detectors use transformer-based models, the same technology that powers systems like GPT or Claude. The most common ones are adapted versions of BERT, RoBERTa or DistilBERT, trained on millions of examples of both human and AI-generated text. They also analyse embeddings (mathematical representations of text), since human and AI-generated texts tend to cluster in different areas of the vector space.

They also rely on complementary statistical techniques: repetition pattern analysis, vocabulary diversity measurement (humans use more variety), and syntactic complexity analysis. There is also watermarking or digital watermarks, imperceptible patterns that some AIs embed in their texts, although this only works if the text has not been edited afterwards.

Are AI text detectors really reliable?

None of these techniques is perfect. We are in a kind of technological race: as generative models become better and sound more human, they also become harder to detect. Hybrid texts (partially human-edited) complicate things significantly. And then there are false positives (human writers with very structured styles that resemble AI) and false negatives (AI texts that go undetected).

The reality is that these tools work reasonably well in many cases, but they have clear limits. They perform better with long texts than with short ones, and better with general content than with highly specialised texts. In addition, they need constant updates to keep up with new generative models as they emerge.

Even the best AI text detection tools are far from foolproof.

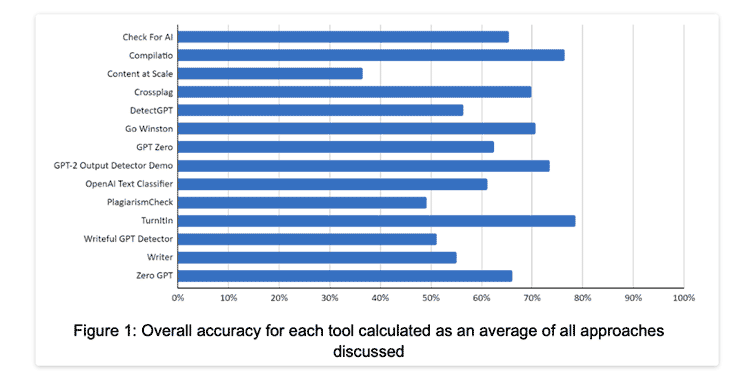

In fact, the numbers confirm this. The study Testing of detection tools for AI-generated text recently evaluated the accuracy of the main available tools, and the results are revealing: TurnItIn and Compilatio achieve accuracy rates close to 80%, making them the most reliable.

How reliable are AI-generated text detectors? Source: Testing of detection tools for AI-generated text.

How reliable are AI-generated text detectors? Source: Testing of detection tools for AI-generated text.

Other tools like Content at Scale or PlagiarismCheck barely reach 50% (equivalent to flipping a coin), while more popular ones like GPTZero or ZeroGPT score between 65 and 70%. This shows that even the best options are still far from infallible.

When does it make sense to use these detectors?

Despite their limitations, these tools are useful in several sectors. In education, many institutions are integrating them into their academic assessment processes. In journalism and digital media, they are used to manage large-scale content production. In human resources, they are used in selection processes that involve analysing large volumes of applications.

They are also gaining relevance in areas where originality is especially critical. In the audiovisual sector, public funding calls for film scripts are beginning to incorporate them in the evaluation phase.

In general, they make sense wherever large amounts of text need to be analysed and content authenticity matters, provided they are combined with human judgement.

Conclusion

Detecting AI-generated content is not an exact science. It is a constantly evolving field that must always be complemented by human judgement and contextual analysis. We cannot blindly trust a percentage from a tool.

And now comes the inevitable question: was the article you just read written entirely by a human, or did it have help from an AI? I encourage you to check for yourself using one of the tools we’ve mentioned!

Hybrid Cloud

Hybrid Cloud Cyber Security & NaaS

Cyber Security & NaaS AI & Data

AI & Data IoT & Connectivity

IoT & Connectivity Business Applications

Business Applications Intelligent Workplace

Intelligent Workplace Consulting & Professional Services

Consulting & Professional Services Small Medium Enterprise

Small Medium Enterprise Health and Social Care

Health and Social Care Industry

Industry Retail

Retail Tourism and Leisure

Tourism and Leisure Transport & Logistics

Transport & Logistics Energy & Utilities

Energy & Utilities Banking and Finance

Banking and Finance Smart Cities

Smart Cities Public Sector

Public Sector