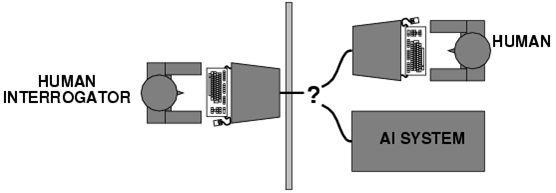

Figure 3. The Chinese Room mental experiment. Does the person in the room understand Chinese?

Figure 3. The Chinese Room mental experiment. Does the person in the room understand Chinese? The key question here is whether you understand the Chinese language. Whatyou have done is received an input note and followedinstructions to produce the output, without understanding anything aboutChinese. The argument is that a computer can never understand what itdoes, because - like you - it just executes the instructions of asoftware program. The point Searle wanted to make is that even if thebehavior of a machine seems intelligent, it will never be really intelligent.And as such, Searle claimed that the Turing Test was invalid.

The Intentional Stance

Related to the Turing test and the Chinese Room argument, the Intentional Stance,coined by philosopher Daniel Dennett in the seventies, is also of relevance for this discussion. The Intentional Stancemeans that "intelligent behavior" of machines is not a consequence of howmachines come to manifest that behavior (whether it is you followinginstructions in the Chinese Room or a computer following programinstructions). Rather it is an effect of people attributing intelligenceto a machine because the behavior they observe requires intelligence ifpeople would do it. A very simple example is that we say that ourpersonal computer is thinking when it takes more time than weexpect to perform an action. The fact that ELIZA was able to fool somepeople refers to the same phenomenon: due to the reasonable answers thatELIZA sometimes gives, people assume it must have some intelligence.But we know that ELIZA is a simple pattern matching rule-based algorithmwith no understanding whatsoever of the conversation it is engaging in.The more sophisticated software becomes, the more we are likely toattribute intelligence to that software. From the Intentional Stanceperspective, people attribute intelligence to machines when theyrecognize intelligent behavior in them.

To what extend can machines have "general intelligence"?

One of the main aspects of human intelligence, is that we have a generalintelligence which always works to some extent. Even if we don't havemuch knowledge about a specific domain, we are still able to make senseout of situations and communicate about them. Computers are usually programmedfor specific tasks, such as planning a space trip or diagnosing aspecific type of cancer. Within the scope of the subject, computerscan exhibit a high degree of knowledge and intelligence, butperformance degrades rapidly outside that specific scope.

In AI, this phenomenonis called brittleness (as opposed to graceful degradation, which is how humans perform). Computer programs perform very well in theareas they are designed for, outperforming humans, but don't performwell outside of that specific domain. This is one of the main reasons whyit is so difficult to pass the Turing Test, as this would require the computer to be able to "fool" the human tester in any conversation,regardless of the subject area.In the history of AI, several attempts have been made to solve the brittleness problem. The first expert systems were based on the rule-based paradigm representing associations of the type if X and Y then Z; if Z then A and B,etc. For example, in the area of car diagnostics, if the car doesn't start, then the battery may be flat orthe starter motor may be broken. In this case, the expert system wouldask the user (who has the problem) to check the battery or the check thestarter motor.

The computer drives the conversation with the user toconfirm observations, and based on the answers, the rule engine leads tothe solution of the problem. This type of reasoning was called heuristicor shallow reasoning.However, the program doesn't have any deeper understanding of how a carworks; it knows the knowledge that is embedded in the rules, but cannotreflect on this knowledge. Based on the experience of thoselimitations, researchers started thinking about ways to equip a computerwith more profound knowledge so that it could still perform (to someextent) even if the specific knowledge was not fully coded. Thiscapability was coined "deep reasoning" or "model-based reasoning",and a new generation of AI systems emerged, called "Knowledge-BasedSystems".

In addition to specific association rules about the domain,such systems have an explicit model about the subject domain. If thedomain is a car, then the model would represent a structural model ofthe parts of a car and their connections, and a functional model of howthe different parts work together to represent the behavior of the car. Inthe case of the medical domain, the model would represent the structureof the part of the body involved and a functional model of how it works.With such models the computer can reason about the domain and come tospecific conclusions, or can conclude that it doesn't know the answer.

The more profound the model is a computer can reason about, the lesssuperficial it becomes and the more it approaches the notion of general intelligence.Thereare two additional important aspects of general intelligence wherehumans excel compared to computers: qualitative reasoning and reflectivereasoning.

Figure 4: Both qualitative reasoning and reflective reasoning differenciate us from computers.

Figure 4: Both qualitative reasoning and reflective reasoning differenciate us from computers.

Qualitative reasoning

Qualitative reasoningrefers to the ability to reason about continuous aspects of thephysical world, such as space, time, and quantity, for the purpose ofproblem solving and planning. Computers usually calculate things in aquantitative manner, while humans often use a more qualitative way ofreasoning (if X increases, then Y also increases, thus ...). The qualitative reasoning area of AI is related to formalism and the process to enable a computer to perform qualitativereasoning steps.

Reflective reasoning

Another important aspect ofgeneral intelligence is reflective reasoning. During problem-solvingpeople are able to take a step back and reflect on their ownproblem-solving process, for instance, if they find a dead-end andneed to backtrack to try another approach. Computers usually justexecute a fixed sequence of steps which the programmer has coded, withno ability to reflect on the steps they make. To enable computers toreflect on their own reasoning process, it needs to have knowledge aboutitself; some kind of meta knowledge. For my PhD research, I built an AI program for diagnostic reasoning that was able to reflecton its own reasoning process and select the optimal method depending onthe context of the situation.

Conclusion

Having explained the above concepts, it should be somewhat clearer that there is no concrete answer to the question posed in the title of the post, it depends on what one wants to believe and accept. By reading this series, you will have learned some basic concepts which will enable you to feel more comfortable talking about the rapidly growing world of AI. The third and last post will discuss the question whether machines can think, or whether humans are indeed machines. Stay tuned and visit our blog soon to find out.

Figure 2. The set up of the original Turing Test.

Figure 2. The set up of the original Turing Test.

Figure 3. The Chinese Room mental experiment. Does the person in the room understand Chinese? The key question here is whether you understand the Chinese language. Whatyou have done is received an input note and followedinstructions to produce the output, without understanding anything aboutChinese. The argument is that a computer can never understand what itdoes, because - like you - it just executes the instructions of asoftware program. The point Searle wanted to make is that even if thebehavior of a machine seems intelligent, it will never be really intelligent.And as such, Searle claimed that the Turing Test was invalid.

Figure 3. The Chinese Room mental experiment. Does the person in the room understand Chinese? The key question here is whether you understand the Chinese language. Whatyou have done is received an input note and followedinstructions to produce the output, without understanding anything aboutChinese. The argument is that a computer can never understand what itdoes, because - like you - it just executes the instructions of asoftware program. The point Searle wanted to make is that even if thebehavior of a machine seems intelligent, it will never be really intelligent.And as such, Searle claimed that the Turing Test was invalid.

Figure 4: Both qualitative reasoning and reflective reasoning differenciate us from computers.

Figure 4: Both qualitative reasoning and reflective reasoning differenciate us from computers.

Hybrid Cloud

Hybrid Cloud Cyber Security & NaaS

Cyber Security & NaaS AI & Data

AI & Data IoT & Connectivity

IoT & Connectivity Business Applications

Business Applications Intelligent Workplace

Intelligent Workplace Consulting & Professional Services

Consulting & Professional Services Small Medium Enterprise

Small Medium Enterprise Health and Social Care

Health and Social Care Industry

Industry Retail

Retail Tourism and Leisure

Tourism and Leisure Transport & Logistics

Transport & Logistics Energy & Utilities

Energy & Utilities Banking and Finance

Banking and Finance Smart Cities

Smart Cities Public Sector

Public Sector