If you want to change your employees’ security habits, don’t call their will, modify their environment instead

- They produce predictable results: they influence towards a predictable direction.

- They fight against irrationality: they intervene when people don’t act rationally in their self-interest due to their cognitive boundaries, biases, routines and habits.

- They tap into irrationality: they exploit people’s cognitive boundaries, heuristics, routines and habits to influence towards a better behavior.

- It produces predictable results: more users turn to the most secure choices.

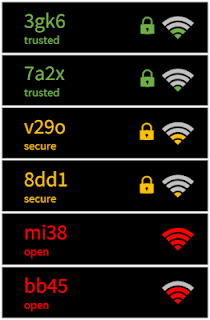

- It fights against irrationality: it fights against the unthinking impulse of connection, that may be satisfied by the first Wi-Fi network with good signal appearing within the list, regardless of if it is open or secured.

- It taps into such irrationality: green elements are seen as more secure than red ones, we privilege the first options of a list against the last ones, we pay more attention to visual cues (locks) than to textual ones, we privilege (the supposed) speed over security, etc.

- Well-designed nudges have the power to influence decisions.

- This capacity for modifying behavior is greater as the probability that the user shows insecure behaviors increases.

- The power to alter behavior raises if several types of nudges are combined, so calling Systems I and II.

- If I download and install this app, will it involve a risk for my security?

- If I plug this USB into the laptop, will it be an input vector for viruses?

- If I create this short and easy-to-remember password, will I be cracked?

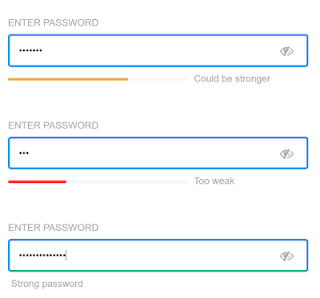

- Codes: they involve manipulating the environment to make the undesirable (insecure) behavior (almost) impossible. For instance, if you want your system’s users to create secure passwords, you may refuse any password that does not follow the password security policy: "at least 12 characters long including both alphanumeric and special characters as well as upper and lower cases, and non-repeated regarding last 12 passwords". By doing so, the user does not have another choice than buckle under it or they will not be able to access the system. In general, all security guidelines not leaving options are included in this category: blocking USB ports to prevent potentially dangerous devices from being connected; restricting sites to be connected to a white list; limiting the size of e-mail attachments; and many others typically foreseen within organizational security policies. Codes are really effective to modify behaviors, but they do not leave choice neither exploit limited rationality, so they cannot be considered as "nudges". In fact, many of these measures do not have success among users and may lead to search for circumventions that betray completely their purpose, such as writing complex passwords in a post-it stuck to the monitor: by doing so, users are protected against remote attacks, but in-house attacks are eased and even fostered.

- Nudges: they exploit cognitive biases and heuristics to influence users towards wiser (secure) behaviors. For example, going back to passwords, if you want your system’s users to create more secure passwords according your security policy guidelines previously mentioned, you can add a password strength indicator for signup forms. Users feel the need to get a stronger password, so they are more likely to keep adding characters in order to complicate it until the result is a flamboyant green "robust password". Even if the system does not forbid weak passwords, so respecting users self-determination, this simple nudge increases drastically the complexity of created passwords.

- Notices: they are purely informative interventions intended to cause reflection. For instance, the introductory new-password form may include a message reporting on the expected characteristics of new passwords as well as on how important are strong passwords to prevent attacks from happening, etc. Unfortunately, informative messages are really ineffective, since users tend to ignore them and often do not consider them even intelligible. These notices cannot be considered either as "nudges", since they do not exploit biases neither cognitive boundaries. Nevertheless, their efficacy can be notably increased if they are combined with a nudge: for instance, by including the message and the strength indicator in the same password creation page. These hybrid nudges are aimed to call System I, quick and fast, as well as System II, slow and thoughtful, through informative messages.

- Default options: provide more than an option, but always make sure that the default option is the most secure one. By doing so, even if you allow users to select a different option, most of them will not do it.

- (Subliminal) information: a password creation website induces users to create stronger passwords if there are images of hackers, or simply of eyes, or even if the text is modified: “enter your secret” instead of “enter your password”.

- Targets: present a goal to the user, for instance: a strength indicator, a percentage indicator, a progress bar, etc. Like this, they will strive to accomplish the task. This type of intervention can be categorized as a feedback as well.

- Feedback: provide the user with information for them to understand if each action is achieving the expected result while a task is being executed. For example, by reporting on the security level reached over the set-up process of an application or service, or on the risk level of an action before tapping on “Send”. Mind you, the language must be carefully adapted to the recipient’s skill level. For instance, in this research, thanks to the use of metaphors known as "locked doors" and "bandits" users understood better the information and consequently made better choices. In this other study, researchers confirmed how to report periodically Android users on the permissions used by installed apps, so making them to check the permissions granted. In this other study, the same researchers reported users on how their location was being used. Consequently, they limited app access to their location. In another research, the fact of reporting about how many people can view your post in social networks led a high number of users to delete the post in order to avoid regretting.

- Conventional behavior: show the place of each user in relation to users’ average. Nobody likes to be behind, all the people want to be over the average. For instance, following a password selection, the message “87% of your colleagues have created a strong password” make those users that had created a weak password to reflect and create a more secure one.

- Order: present the most secure option at the top of the list. We tend to select the first option we see.

- Standards: use pictographic conventions: green means “secure”, red indicates “danger”. A lock represents security, and so on.

- Prominence: by highlighting the most secure options you attract people’s attention over them, so you facilitate their selection. The more visible an option is, the higher is its probability to be selected.

- Frames: you can present an action’s result as “making a profit” or “avoiding a loss”. Loss aversion tends to be a more powerful momentum.

- Autonomy: the end user must be free to choose any of the provided offers, regardless of the direction to where the nudge is addressed. In general terms, no option will be forbidden or removed from the environment. If any option is required to be limited due to security reasons, such fact must be justified.

- Benefit: the nudge must only be deployed when it provides a clear benefit, so the intervention is totally justified.

- Justice: as many people as possible must benefit from the nudge, and not only its author.

- Social responsibility: both nudge’s anticipated and unanticipated results must be considered. Pro-social nudges progressing towards the common good must be always contemplated.

- Integrity: nudges must be designed with scientific support, whenever possible.

Hybrid Cloud

Hybrid Cloud Cyber Security & NaaS

Cyber Security & NaaS AI & Data

AI & Data IoT & Connectivity

IoT & Connectivity Business Applications

Business Applications Intelligent Workplace

Intelligent Workplace Consulting & Professional Services

Consulting & Professional Services Small Medium Enterprise

Small Medium Enterprise Health and Social Care

Health and Social Care Industry

Industry Retail

Retail Tourism and Leisure

Tourism and Leisure Transport & Logistics

Transport & Logistics Energy & Utilities

Energy & Utilities Banking and Finance

Banking and Finance Smart Cities

Smart Cities Public Sector

Public Sector