The trillion dollar mistake

We owe Tony Hoare or Sir C.A.R. Hoare (1934) a great deal for his discoveries in the field of computing. His name may not ring a bell, but if you have studied computer science you will almost certainly have come across one or two (or more) of his discoveries, for example, the QuickSort algorithm.

With that business card, perhaps I should add that he also invented a formal language for concurrent processes to live in harmony: CSP or Concurrent Sequential Processes, which today is used in the Go (or Golang) programming language as the basis for its multithreaded implementation.

Suffice it to say that it is one of the "Turings". The prize was awarded to him in 1980 for his contributions to the design of programming languages. And it is precisely here, at this stop, that we get off and continue on foot.

ALGOL's cursed inheritance

In 1965, Hoare was working on the successor to ALGOL 60, a programming language that at the time was a modest commercial success and was mainly used in research. This language, called ALGOL W in a display of originality, was a joint design with another of the greats, Niklaus Wirth, and was in turn based on a version of ALGOL named (surprise) ALGOL X.

ALGOL W failed to win the hearts of the ALGOL 60 programmers as its successor. In the end, they opted for the other pretender to the throne: ALGOL 68 (yes, naming languages with catchy names was not mainstream until decades later).

But what ALGOL W did achieve was to lay the foundations for something bigger. Much bigger: Niklaus Wirth adopted it to create the basis for one of the most widely used programming languages of all time and the one for which Wirth is perhaps best known: Pascal.

However, Pascal and its lineage are the subject of another article. Let's keep the focus on ALGOL W. He possessed something evil inside him. A diabulus in music that inadvertently but consciously crept into its design and of which, to this day, many programming languages carry like a cursed inheritance...

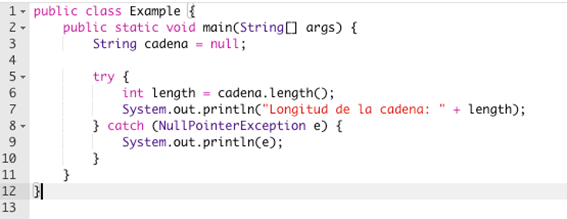

An example of a null reference

Let's give an example to help us get the hang of it. Moreover, we will do it in Java, since it will surely sound familiar to those of you who have programmed a little in this language:

The example is almost self-explanatory:

- In line 3 we declare a variable with the String type but we initialise it to 'null'. That is, the variable 'string' does not have an object of the String class, in fact, it does not have anything. null' is indicative that the variable is waiting to reference a String object.

- The small catastrophe is about to happen on line 6, when we are going to use that variable. At that moment, when we indicate that we are going to use the "length" method that objects of the String class possess, the Java runtime invokes the reference that should possess 'string', but in doing so there is nothing, zero, null.

What does this cause?

Well, first of all, the program stops its execution if we do not control the exception. The problem is not that it stops in this trivial but illustrative example: can you imagine if it stops in a program that is responsible for calculating the approach to the runway in the middle of a commercial flight?

⚠️ This error, when it manifests itself (and it is not difficult for it to do so) has led to tremendous repair costs. In fact, Tony Hoare calls it "the trillion dollar bug" and his conference with the same title is famous, where he exposes real cases in which in one way or another his "invention" has been involved.

If we think of a program as a big state machine, we will realise that, at a given instant of time, a variable does not always contain an object, but the variable is there.

In addition, that variable can be used as a parameter to a function or method, be inserted into a collection: a vector, list, etc. If we delete the object pointed to by that variable or its memory is collected, the variable will contain a null reference ready for disaster when it is invoked.

We have already seen in previous posts how in languages like C or C++, where we handle memory manually, we can run into variables that no longer contain the object they point to in memory.

How do we avoid null references?

Let's continue on the basis of Java, for illustrative purposes. We assume that the rest of the programming languages where there is the possibility of an object having a null reference have or are developing similar defensive mechanisms.

First of all, the curious thing is that if we have a String type, why does the compiler allow us to initialise it with 'null'? Does 'null' possess a generic type? Not really, it doesn't. In the particular case of Java, it is a generic type. In the particular case of Java, it is a language keyword without any type.

In fact, strictly speaking, since it has no type, the mere fact of assigning 'null' should be an exception since it does not match the type to which the variable is designated. That is, the very fact of doing "String string = null;" should cause a compilation error and at runtime, if a variable has a 'null' value, it should at least cause a warning even before the variable is used.

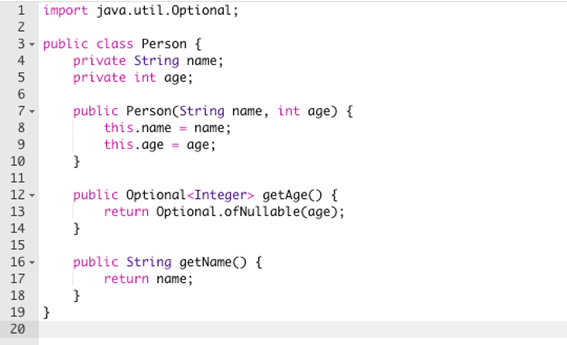

And this is precisely a strategy to define a class that "root" does not admit to be null: Optional.

Such a class does not free us from the evils of null, but it does give us a very powerful mechanism to manage its risks. That is to say, instead of hiding the use of null, what is done is just the opposite: it is openly manifested. An example to see it better:

In this case we have a Person class, if we want to get the age, we have a getter method for it. So far so normal, but the funny thing is that as we don't know if that class will have the field 'age' with a value or not or if it is null, what it returns is not an integer but an object of the Optional class.

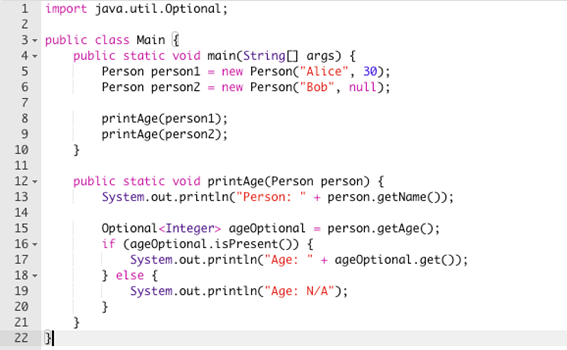

This object is not the value itself but a wrapper on which to work safely. In this case, let's take an example with the Person class in place:

As we can see, we do not have the value directly (notice line 6), but we are somehow forced to treat it as what it is, something optional. In this case, we simply ask (line 16) if it has some well-defined value and use it, or else we handle its absence appropriately (no exceptions in between).

Are there languages without null types?

Almost all languages in which there is the possibility that a reference (or pointer, etc.) is null, it is a headache to eliminate them through good programming practices, defensive programming or simple error correction.

The possibility of an object being invalid (null) is present in practically all languages, but what does change is the way the language sees it and, above all, the tools it provides to programmers.

Rust, Haskell, Kotlin and Swift, among many others, have native or integrated capabilities from day one in their development and execution environment.

The basis is: if a type can be null, you must handle it accordingly. Almost all of them work in a similar way to Optional (present in Java since version 8).

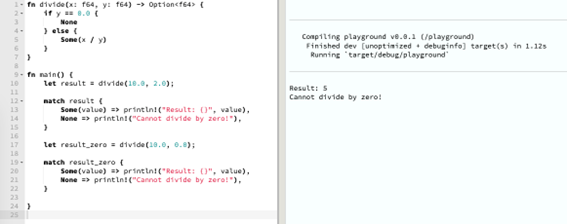

An example in Rust (the code repetition is for teaching purposes):

The treatment of dividing by zero is similar to not having a proper reference or the type being null. As we know we cannot divide by zero and so the function is designed to be prepared in case the divisor is zero (lines 1 to 7).

The function does not return the result of the division but an Option on which we must do a pattern capture (lines 12 to 15, for example) to determine if the operation has been correct or not.

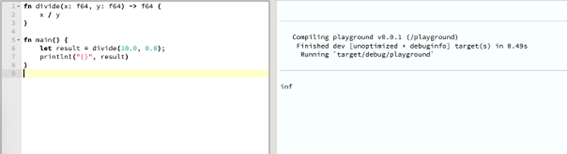

You may ask yourself... But is it possible to do the operation WRONG and not return an Option, but directly an f64? Of course, you can:

But it is precisely how not to program a function. If you design and implement it to be fail-safe, you don't deliver back a value f64 that might be null or invalid, but you force the user of the function to worry that the value is present and to deal appropriately with the case that it is not.

Conclusions

A software bug can cost us a lot of money. Both in repairing it and in mitigating the damage it has caused; not to mention cases that have made headlines in the general media for the disaster they have caused.

One of the most common bugs and present since the infancy of programming languages are null references. Invented precisely by one of the most laureate scientists in the field.

Modern languages have mechanisms that make it easier for us to deal with these errors and more difficult for us to try to commit them.

Finally, a simile from real life: Imagine a letter that has no sender. Where does the letter return to if the address is wrong? If there is a sender, it has a mechanism in case the value or destination address is not valid: return it to the sender.

As we see, we often deal with old problems and we don't see that the solution was already there centuries ago...

Image: Freepik.

Hybrid Cloud

Hybrid Cloud Cyber Security & NaaS

Cyber Security & NaaS AI & Data

AI & Data IoT & Connectivity

IoT & Connectivity Business Applications

Business Applications Intelligent Workplace

Intelligent Workplace Consulting & Professional Services

Consulting & Professional Services Small Medium Enterprise

Small Medium Enterprise Health and Social Care

Health and Social Care Industry

Industry Retail

Retail Tourism and Leisure

Tourism and Leisure Transport & Logistics

Transport & Logistics Energy & Utilities

Energy & Utilities Banking and Finance

Banking and Finance Smart Cities

Smart Cities Public Sector

Public Sector