How AI enhances accessibility and breaks down language barriers

Imagine the frustration of encountering digital content you cannot consume. Millions of people remain excluded from digital content due to disabilities, learning differences, or language barriers, limiting their educational, professional, and social opportunities.

AI and machine learning are opening up a world of possibilities to make content and knowledge accessible to everyone. These technologies have the potential to personalize and adapt the user experience, reducing the gaps faced by individuals with disabilities, learning differences, or varying abilities.

The transformative role of AI in accessibility

AI has enabled the development of tools and resources that facilitate access to information in different formats and languages. Text, images, audio, and video translation makes it easier to access technical information, courses, entertainment, books, and more. It also expands the possibilities for consuming internet and social media content, navigating in unfamiliar places, and interacting in physical spaces.

Accessibility technologies benefit everyone: they expand access to content, enhance social interaction and inclusion, and enrich user experiences.

Some of the most impactful technologies breaking down these barriers include:

- Natural Language Processing (NLP): Allows machines to comprehend and generate human language, including sentiment analysis, information extraction, and contextual understanding.

- Language models: Ranging from basic statistical models to large language models (LLMs), these systems handle complex contexts and generate coherent responses.

- Computer vision: Analyzes and interprets visual content, such as object recognition, scene analysis, text recognition in images, and assisted navigation.

- Text-to-Speech (TTS) with neural synthesis: Converts written text into natural, expressive audio, delivering appropriate intonation and rhythm.

- Neural machine translation: Enables real-time adaptation and interpretation of content between languages.

- Automatic speech recognition (ASR): Accurately converts spoken audio into text, accommodating diverse accents and contexts.

In addition to these, emerging technologies like haptic interfaces, eye-tracking systems, gesture-based controls, and even neural interfaces are enhancing accessibility in unprecedented ways.

Multilingual video dubbing

One significant breakthrough is the automatic dubbing of videos into multiple languages. Advanced AI technologies can now recreate the speaker's original voice in different languages, preserving its tonal characteristics and authenticity.

Lip synchronization algorithms further enhance this experience by matching the audio with the speaker’s lip movements, creating a viewing experience as seamless as watching the original version.

This not only makes content more accessible to global audiences but also preserves the original emotional and performative nuances, allowing viewers to connect more intimately with the characters.

Success Story: The Prado Museum

Prado Babel initiative by the Museo Nacional del Prado is a shining example of this technology. Through AI, the museum has translated and dubbed its video presentations into multiple languages. In the video, museum director Miguel Falomir retains his voice and image while speaking in over a dozen languages.

With the support of Telefónica, its technological partner, the museum offers visitors the opportunity to listen to explanations about its identity and collections in their own language. This fosters a more personal, inclusive, and accessible experience.

For this project, we at Telefónica Tech evaluated various tools to achieve the required dubbing and lip-sync quality. ElevenLabs ensured expressivity and emotional fidelity in voice reproduction, while HeyGen preserved the natural synchronization of lip movements with AI-generated audio.

✅ By using these technologies, the museum ensured that dubbed versions in diverse languages, such as German, Chinese, or Greek, maintained the authenticity of the original, creating a seamless audiovisual experience that reflected Falomir’s personality.

Automatic subtitles and Speech-to-Text transcription

Automatic video subtitles, widely available on platforms like YouTube and social media, significantly enhance accessibility. These systems contextualize translations and expand the number of supported languages, generating subtitles in real time.

By analyzing video audio, these technologies transcribe speech and synchronize it with visual content, providing written captions. This feature is invaluable for individuals with hearing impairments and also benefits those consuming content in another language or in silent environments.

— Automatically generated YouTube subtitles.

— Automatically generated YouTube subtitles.

Speech-to-text transcription enables the quick capture of conversations or speeches, converting them into written text. This tool is helpful for people with hearing disabilities and in contexts like courses, conferences, or meetings. It is commonly integrated into virtual assistants, voice memo apps, language models, translators, and word processors, improving accessibility and productivity.

■ VideoLAN recently showcased a demo of its VLC video player, which, using open-source AI models, generates subtitles automatically and translates them into over 100 languages in real time as the video plays.

'Universal' web page translation

Real-time website translation is becoming increasingly common. Tools like Google Translate and Microsoft Translator, integrated into browsers, translate content into multiple languages. Furthermore, these technologies are now native to web development, enabling browsers to interpret and translate text within images.

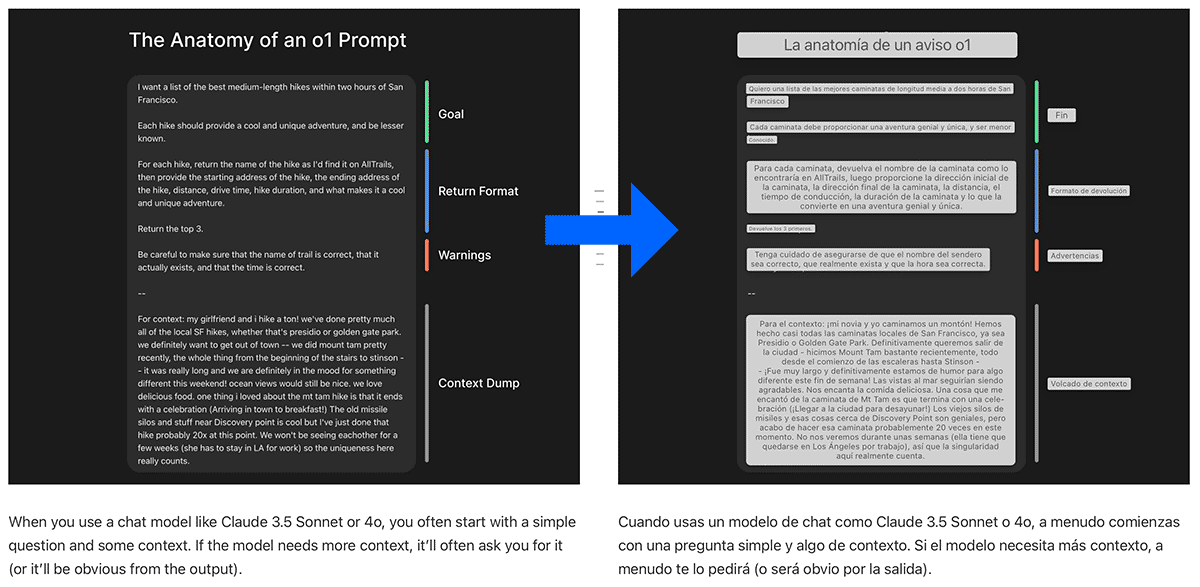

—Current web browsers can identify, interpret, and translate text in images on a web page (original page in English translated into Spanish).

—Current web browsers can identify, interpret, and translate text in images on a web page (original page in English translated into Spanish).

This capability has the potential to eliminate language barriers and 'universalize' access to information and knowledge, offering seamless, accurate translations that make content navigation and understanding effortless for users.

Text-to-Speech (TTS)

TTS technology automatically converts text into speech and has been significantly enhanced by AI. Modern TTS systems leverage NLP to analyze text and generate speech with appropriate intonation, rhythm, and emotion, handling pauses, accents, and pronunciation nuances specific to each language.

TTS applications span virtual assistants, GPS systems, educational tools, language learning apps, and audiobook narration. It is also critical for automatic video dubbing.

For many, this technology transforms their interaction with the world. It empowers individuals with disabilities—especially those with visual impairments—to use mobile devices, computers, and other tools, enabling communication and access to previously inaccessible content.

■ Coqui TTS is an open-source voice synthesis engine that supports multiple languages and allows voice cloning with minimal audio samples. It benefits individuals with visual impairments, reading difficulties, and minority languages, as it can be trained to support new languages.

Computer Vision for environmental understanding

Computer vision has unlocked new possibilities for accessibility through advanced recognition of visual content using algorithms that analyze and comprehend images captured or viewed through devices like mobile cameras.

These systems analyze and describe the content of an image or video in real-time, providing descriptions in text or speech to individuals with visual impairments. This enables users to understand the context and meaning of visual media that was previously inaccessible, such as what is happening in a movie scene.

Additionally, this technology identifies and describes text within images, which benefits individuals with dyslexia or other reading challenges, including those related to language differences.

With applications like Google Lens, users can point their cameras at signage, restaurant menus, documents, or other text, and the app overlays the translation directly onto the original image. This allows anyone—travelers, students, or people living in multilingual environments—to gain an instant, accessible understanding of their surroundings.

■ Models like Cloud Vision or Ollama OCR, which can be implemented in various projects and developments, are capable of precisely extracting text from photographs, images, and complex documents.

Computer vision enhances accessibility for people with visual impairments or reading difficulties while also enabling interactive tools useful for anyone.

Challenges and opportunities

While progress is undeniable, several challenges remain:

- Digital divide: Many lack access to the technology or the knowledge required to use these tools.

—A person with a hearing impairment may benefit from this technology but cannot access it due to the lack of a suitable device or the necessary digital skills. - Bias in AI models: Insufficient linguistic diversity in training datasets can lead to errors in understanding regional accents, idiomatic expressions, or cultural nuances.

—A poorly trained system might translate (from Spanish) “Me tomas el pelo” as “You take my hair” instead of the correct idiomatic equivalent, “You’re pulling my leg” losing its intended meaning. - Cost and technological dependence: While many AI tools are technically accessible, they may require significant investments in infrastructure, training, and maintenance.

—Accessing AI-based translation tools might require owning and knowing how to use a smartphone or computer, which isn’t feasible for everyone.

Overcoming these challenges requires multidisciplinary collaboration among developers, educators, end-users, and public institutions. Continuous evaluation of AI systems is essential—not only in terms of technical performance but also of their social and ethical impact.

■ Ethical guidelines are imperative to respecting user privacy and digital rights. These tools must operate efficiently, transparently, and minimize risks such as unauthorized data collection.

Conclusion

AI presents an unprecedented opportunity to build a more accessible and inclusive digital world. However, implementation is not without its challenges. Addressing ethical, technical, and social issues proactively is essential to ensuring these tools benefit everyone, regardless of their abilities or circumstances.

The journey toward digital accessibility is a continuous and collective effort, demanding commitment, innovation, and sensitivity. These technological advancements close gaps, improve lives, and open the door to a future accessible to all.

Hybrid Cloud

Hybrid Cloud Cyber Security & NaaS

Cyber Security & NaaS AI & Data

AI & Data IoT & Connectivity

IoT & Connectivity Business Applications

Business Applications Intelligent Workplace

Intelligent Workplace Consulting & Professional Services

Consulting & Professional Services Small Medium Enterprise

Small Medium Enterprise Health and Social Care

Health and Social Care Industry

Industry Retail

Retail Tourism and Leisure

Tourism and Leisure Transport & Logistics

Transport & Logistics Energy & Utilities

Energy & Utilities Banking and Finance

Banking and Finance Smart Cities

Smart Cities Public Sector

Public Sector