AI & Data

Quantum Intelligence (part II): Problems (complex) and solutions (complex)

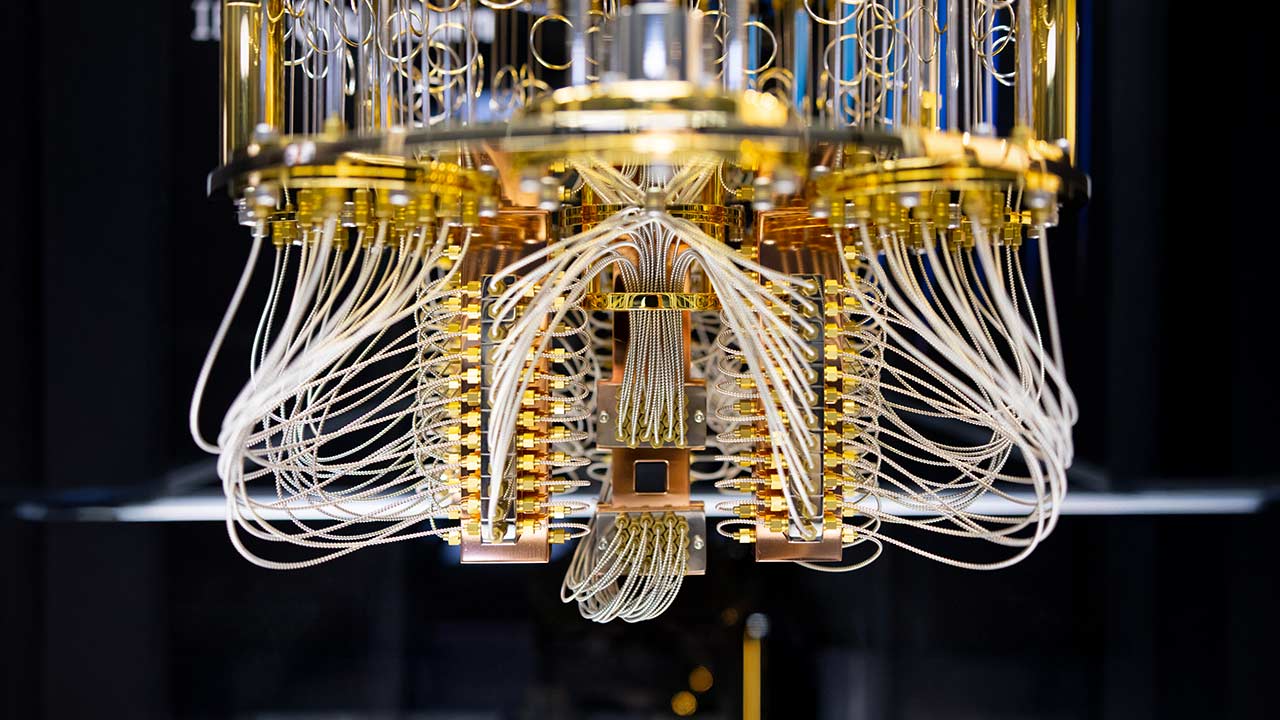

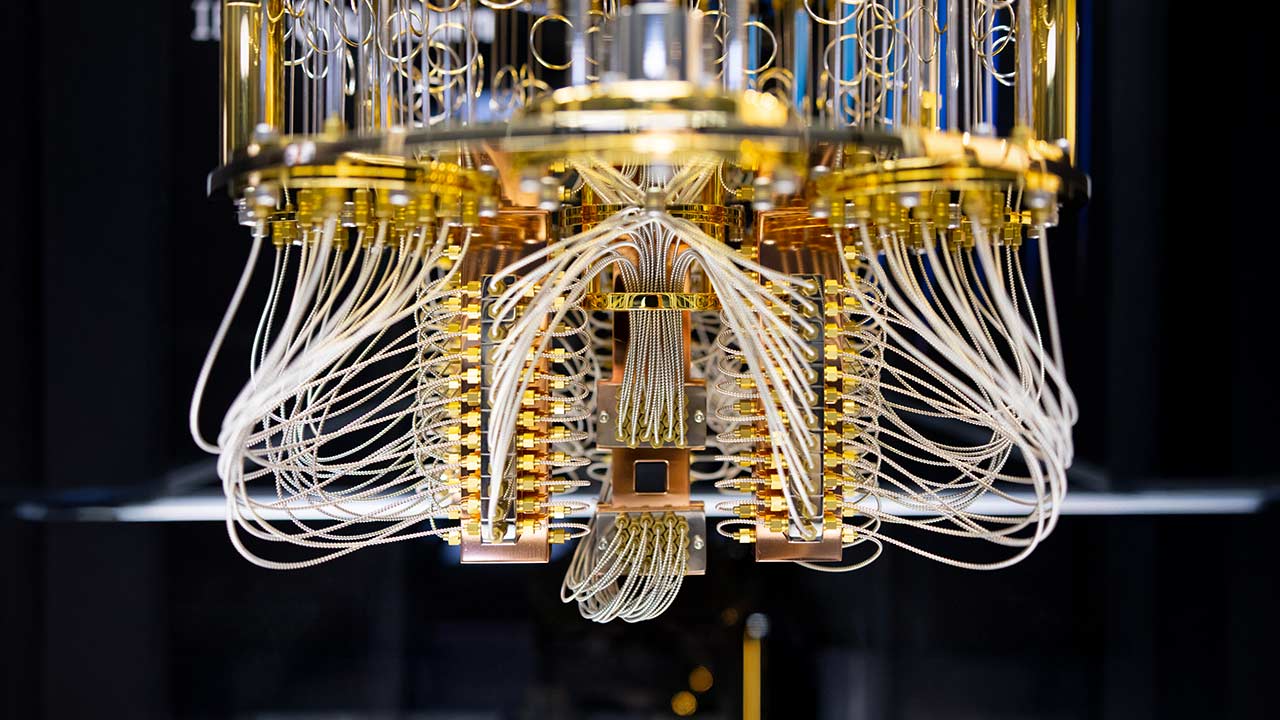

Quantum computing is starting to be applied in areas such as machine learning and optimization. Find out about the real possibilities of this technology to address complex problems and learn about current trends, from Quantum-as-a-Service to our position in the quantum ecosystem. I invite you to read a practical and realistic vision of this field. In this second part we will talk about where it is useful (and where it is not) and the tools available. _____ Quantum computing is exploring its potential to address complex challenges in three key areas: optimization, simulation of complex systems, and process improvement in artificial intelligence and machine learning. It is already being applied in practical cases where its ability to process information differently from classical methods offers promising advantages, although it is still an emerging technology. Optimization Optimization problems, which seek the best solution among a large number of possibilities, are an area where quantum computing stands out. Quantum algorithms already exist to tackle such problems. Examples such as logistics route planning or supply chain management require evaluating millions of combinations to find the optimal solution. Quantum computing addresses these challenges through algorithms such as the Quantum Approximate Optimization Algorithm (QAOA), which explores solutions in parallel by exploiting quantum superposition. In practice, the results of these algorithms have to be combined with classical systems in systems that we can call hybrids. Simulation This is one of the most natural applications for quantum computing, as classical computers face limitations in modeling systems that follow the laws of quantum mechanics. Simulating complex molecules or advanced materials, for example, requires computational resources that grow exponentially with the size of the system. Quantum processors, on the other hand, can inherently represent these states, which accelerates the study of atomic interactions. Researchers and companies are using this capability to design more effective drugs or innovative materials. Improving AI and machine learning In the specific field of machine learning, quantum computing is being investigated to accelerate tasks such as parameter optimization in predictive models. Quantum algorithms could identify patterns in large data sets by processing information in high-dimensional spaces. However, current hardware limitations force the use of hybrid approaches, where the quantum part is reserved for specific subtasks, such as the selection of relevant models within an ensemble algorithm (an approach that is already available on some platforms). Ecosystems and technologies The quantum computing industry is made up of large technology companies as well as specialized startups. Large tech companies are creating ecosystems that combine quantum processors with classical servers and Quantum as a Service (QaaS) offerings, providing access to quantum computers through the cloud. Hyperscalers are also active in this market, developing their own hardware (Willow, Majorana, Ocelot, etc.) along with libraries and programming languages (Cirq, Q#). Among emerging hardware manufacturers, notable companies include D-Wave Systems, Atom Computing, Xanadu, IonQ, and Quantinuum, among many others. Developer tools Quantum development relies on frameworks that abstract hardware complexity. Qiskit has become the de facto standard, followed by Cirq and Pennylane. While these libraries can be used in popular programming languages like Python, there are also quantum-specific programming languages, such as Q#. Access to quantum technology There are currently two main approaches to working with quantum computers: First, you can deploy a quantum computer. This is the more expensive option but also the one that allows intensive use with controlled budget. Typically, a hybrid quantum-classical system will be installed where quantum processors will be used for specific subtasks where they can offer an advantage. Secondly, and as we have advanced, there is Quantum-as-a-Service (QaaS), which is a cloud service model that provides remote access to quantum computing resources. This approach allows experimentation with quantum technologies without significant upfront investments. QaaS offers key advantages, such as access to up-to-date state-of-the-art technology or scalability according to project needs. Quantum vs Quantum inspired There are also companies in the market that sell quantum inspired computing rather than quantum computing. It is important to understand the differences. Ilustración 4: Quantum vs Quantum Inspired Quantum-inspired computing is an approach that applies mathematical principles and methods from quantum physics to develop improved classical algorithms, without the need for actual quantum hardware. It should be noted that it cannot match the potential of quantum computers for complex problems and its performance is limited by the classical hardware that supports it. Although they do not take advantage of quantum phenomena, these methods seek to replicate some theoretical advantages of quantum algorithms to solve specific problems. In this line, HPC (High Performance Computing) systems can act as a temporary bridge towards the adoption of quantum computing, especially in tasks that require massive processing, but do not rely on quantum advantages. On the other hand, and as you should know by now, quantum computing is a radically new paradigm that leverages the principles of quantum mechanics to process information. Telefónica and quantum computing Telefónica is consolidating its position in the European quantum ecosystem through a strategy based on public-private collaboration and deployment of specialized infrastructures. Several key projects stand out in 2025: Alliance with Diputación de Vizcaya Here at Telefónica España became a technology partner of the Diputación de Vizcaya in February 2025 to drive its quantum industrial strategy. This collaboration includes the installation of Fujitsu's first Digital Annealer outside Japan, a hybrid system that combines quantum-inspired techniques with classical supercomputing. Hosted at our Telefónica headquarters in Vizcaya, this equipment will solve problems in multiple industries. Center of Excellence in Quantum Technologies Unveiled at MWC 2025, this center coordinates all of our Telefónica's quantum initiatives, focusing on three pillars: Quantum communications and cyber security: Development of secure quantum networks and migration to post-quantum algorithms to protect critical infrastructures. Computation and simulation: Integration of quantum processors with classical supercomputing for practical cases in optimization and machine learning. Quantum sensors: Research in precision metrology for telecommunications and network monitoring. Telefónica Tech and IBM collaboration At Telefónica Tech we also collaborate with IBM on quantum-safe solutions, integrating technologies to protect critical data against future quantum computing threats. This alliance includes the deployment of cryptographic infrastructures in Madrid and the creation of a joint laboratory. In addition to these public collaborations, Telefónica Tech continues to develop its internal capabilities to be able to offer its customers quantum computing and tackle projects to solve problems by combining AI and quantum. As you can see, while we continue to see advances in hardware and real applications, one thing is certain: quantum computing is not only the future, it is already here. So, get ready for an exciting journey. Don't miss out! Photo (cc) of a model of IBM Quantum System One, at Shin-Kawaski for the University of Tokyo. IBM Research.

April 30, 2025

AI & Data

Quantum Intelligence (part I): quantum principles and market

Quantum computing is starting to be applied in areas like machine learning and optimization. Find out about the real possibilities of this technology to address complex problems and learn about current trends, from Quantum-as-a-Service to our position in the quantum ecosystem. I invite you to read a practical and realistic view of this field. In this first part we will make an introduction to the field and talk about quantum business. ______ Introduction to quantum computing Quantum computing is a discipline that takes advantage of the principles of quantum physics to process information in a radically different way than classical computers. It takes advantage of these three phenomena to encode information and process it: Figure 1: Physical principles of quantum computation. Superposition allows a qubit (quantum bit) to exist in multiple states simultaneously. While a classical bit can only be 0 or 1, a qubit can be 0, 1 or any combination of both at the same time. This is as if a coin could be both heads and tails at the same time before it drops. Quantum interference allows you to manipulate the probabilities of the possible outcomes of a calculation. Certain outcomes can be strengthened and others cancelled out. It is as if we can influence how a coin will fall while it is still spinning in the air. Entanglement is a phenomenon whereby two or more qubits become connected in such a way that the state of one instantly affects the state of the other, no matter how far apart they are. It is as if two spinning coins were synchronized to always fall on the same side, even when they are far apart ■ Qubits can exist in superposition (0 and 1 simultaneously) and intertwine with each other allowing multiple solutions to be explored in parallel, in a phenomenon called quantum parallelism. —For example, a circuit with 50 qubits can analyze up to 2^50 possibilities in a single operation. Despite its potential, this technology faces some challenges: Quantum decoherence: qubits lose their quantum state due to environmental interferences (vibrations, temperature), limiting their useful time for computations to microseconds. Noise and errors: Quantum operations have high error rates that accumulate quickly in complex circuits. This forces you to have to repeat each operation (called shot) thousands of times to get reliable results. Demanding infrastructure: Superconducting qubits, such as those from IBM, require cooling near absolute zero (-273°C), isolation in vacuum chambers, and radiation shields to minimize disturbances. Building the chandelier Quantum computers, unlike classical computers, lack elements such as RAM or hard disks, and their design focuses on maintaining quantum coherence and minimizing interference. Superconducting qubits require temperatures close to absolute zero (-273°C) to operate. This is achieved by cooling systems using helium and liquid nitrogen. These ultra-low temperatures reduce the thermal motion of atoms, crucial for maintaining quantum states. Dilution refrigerator is the name given to the structure that supports the entire system. It is the most iconic image of quantum computing and because of its shape, it is informally called the chandelier. The core of the system houses the qubits, usually in superconducting circuits of niobium or aluminum deposited on silicon wafers. These are protected with electromagnetic shielding to prevent external interference. In addition, vacuum chambers eliminate vibrations and residual particles that could disturb the qubits. Manipulation of the quantum states of the qubits is performed by microwave pulses. As you can see, these computers differ greatly from a conventional computer. Let us now see what the quantum market looks like. The current quantum market The quantum computing market has experienced growth in recent years. This growth is divided into three key areas, as outlined in the World Economic Forum 2025 report: Illustration 2: The Quantum Market. The main goal of quantum security and communications is to mitigate the threats that quantum computing poses to classical cryptography. This includes the development of post-quantum cryptography (PQC), which resists quantum attacks, and quantum key distribution (QKD), providing secure transmission of keys using entangled photons. These technologies are crucial for protecting critical infrastructures such as banks and power grids. Quantum sensors take advantage of properties such as entanglement and superposition to perform ultra-precise measurements. Technologies such as SQUIDs can detect weak magnetic fields. These sensors have applications in health or energy. Quantum computing is being explored for its application in artificial intelligence, machine learning or optimization, among others. In optimization, its potential to analyze complex combinations in logistics and resource planning problems is being investigated. In machine learning, new ways of processing data in high-dimensional spaces are being studied, with possible applications in classification and predictive analysis. Funding for this market, from both public and private sources, is focusing on two areas. On the one hand, investment in hardware and in the development of larger (more qubits), less noisy computers. Manufacturers are creating increasingly advanced systems, such as IBM and its 156-qubit Heron chips announced in 20231. On the other hand, Quantum-as-a-Service platforms are becoming available (such as IBM Quantum) that democratize access to quantum processors. In the next post of the series, we will talk about where they are useful (and where they are not) and the tools available. Stay tuned! ___ 1. Although the number of qubits is not the only metric by which quantum computers are measured, it is the easiest to understand. In general, it is preferred to use the term quantum volume, which includes the number of qubits, the error rate and the computational speed (circuits executed per second). Photo (cc) of a model of IBM Quantum System One at Shin-Kawaski for the University of Tokyo. IBM Research.

April 23, 2025

AI & Data

Decoding Data Spaces (part II): Technological Aspects

This article is part of a series dedicated to Data Spaces. We have already published a first article that offers a guide for companies and addresses the main pillars of a data space, roles, benefits and challenges. We invite you to consult it for a complete overview of the conceptual and strategic framework of Data Spaces. In this article we will focus on the technological aspects that differentiate Data Spaces from other technologies, exploring key elements such as their main components and giving specific examples of Sector Data Spaces, including EONA-X and Catena-X. What differentiates Data Spaces from other technologies? Data spaces aim to become a key pillar in driving the data economy in various sectors. However, what differentiates them from other technologies such as hyperscale clouds, cloud computing, data warehouses or data lakes? Let's take a look: Differentiating technological aspects At a technological level, we can highlight 4 aspects that are an intrinsic part of the Data Space and that differentiate it from other technologies. Identity verification: Data Spaces use robust mechanisms to verify the identity of participants and ensure trust in transactions. Clearing houses: A component that facilitates the exchange of data in a secure and transparent manner, acting as an intermediary between participants. Marketplaces: Enables the discovery, purchase, and sale of data (and other assets such as apps or services) in a secure and regulated way, creating value for participants. Contracts: Data Spaces use smart contracts to automate the access and use of data, ensuring compliance with the conditions agreed by whoever offers the data, such as the use to which it can be put, or the regions to which the data can be moved. They are legally binding contracts. Technological components Data Spaces are composed of several technical components that work together to create a secure and decentralized environment. Although each organization (such as IDSA or GAIA-X) is describing its own technical standards about the elements that have to compose a Data Space, we can extract the common elements: Connector: Essential for communication, the Connector enables data providers and data consumers to exchange information within the ecosystem. It acts as a bridge between industrial data clouds, enterprise clouds, local applications or individual devices, connecting them to the ecosystem. It is usually a component that is installed by each participant on its premises, although there are companies that offer connector services in the cloud or hosted in the Data Space itself. Broker: Facilitates the exchange of data through real-time search services. Its main function is to connect data providers with data consumers within the ecosystem. Identity Provider: Responsible for managing and authenticating the identities of participants within the Data Space. Ensures secure access to the ecosystem, controlling and verifying the identity of each user or entity interacting with the space. App Store: The App Store is a component that allows participants to discover and access various applications, data, and services within the data space. It acts as a marketplace for digital services and innovations. Vocabulary Center: It plays a crucial role in the integration of domain-specific data vocabularies within the Data Space. It contributes to the standardization and harmonization of data formats and structures, facilitating interoperability between different information sources. They are usually defined by business vertical or scope of application. The OMOP standard, for example, is available for the healthcare sector ✅ Beyond these core components, different organizations and developers include other elements to complement the ecosystem such as software version repository, data verification, etc. Examples of Sector Data Spaces (EONA, CATENA) Data Spaces are no longer an abstract idea, but a developing reality with initiatives that aim to transform the way information is managed and shared. Data Spaces Radar provides a complete overview of the Data Spaces landscape worldwide. ✅ Data Spaces Radar includes information on Data Spaces initiatives, use cases and relevant projects from platforms such as GAIA-X, IDSA, FIWARE and others. This central repository is a tool to be considered to understand the evolution and scope of Data Spaces. Below we explore some of the most prominent initiatives that are shaping the future of Data Spaces: Catena-X: This collaborative and open data ecosystem emerges from the automotive industry. It connects global players across the entire value chain, from design to production to service. Catena-X promotes transparency and collaboration in data management, driving innovation as well as efficiency in the automotive sector. EONA-X: This project, part of the GAIA-X LightHouse ecosystem (lighthouse projects), seeks to create a trusted environment for data exchange in mobility, transportation and tourism. EONA-X focuses on the optimization of multimodal travel, contributing to the reduction of emissions and the sustainability of transportation. MDS – Mobility Data Space: Another project of the GAIA-X LightHouse ecosystem, MDS focuses on sovereign data exchange in the mobility sector. It provides an ecosystem for the development of innovative, sustainable and user-friendly mobility solutions, promoting fair and equitable participation in the data economy. HEALTH-X dataLOFT: Based on the dataLOFT platform, this GAIA-X LightHouse ecosystem project seeks to implement health data in a secure and transparent manner. HEALTH-X dataLOFT will enable the individual use of health data and the development of innovative business models in the healthcare industry. These initiatives show the transformative potential of Data Spaces in a variety of sectors. As technology evolves and the demand for collaboration and transparency in data management increases, Data Spaces will play an increasingly important role in building a more connected and smarter future. ◾ Now you know a bit more about the technology behind Data Spaces. In the next article we try to sort out all the acronyms and organizations you have seen during the article (IDSA, GAIA-X, etc.) AUTHORS Santiago Morante AI Alliances and Solutions Development Manager Paula Valles Data Sales Consulting * * * IA & Data Data visualization: Choosing the right chart December 13, 2023 Imagen: rawpixel.com / Freepik.

September 16, 2024

AI & Data

Artificial Intelligence in Fiction: The Bestiary Chronicles, by Steve Coulson

It is common in the era of Artificial Intelligence (AI) to hear about how this technology can revolutionise different areas of human life, from medicine to manufacturing. However, in our previous posts, we have mainly dealt with the treatment of AIs in fictional films, series and books. Today we are going to shift focus and explore how generative Artificial Intelligences can be used in the creation of art. While the idea of using technology to create art may seem baffling to some, there are actually many ways in which AI can help artists generate ideas, experiment with different approaches and enrich their final works. In this post, we are going to look at how AIs can be used as tools to support the creative process for artists and content creators. If you are interested in finding out how Artificial Intelligences can help boost your creativity, keep reading! Text generators One of the best-known ways in which Artificial Intelligences can be used in the creation of art is through the use of generative language models. These models, such as OpenAI's GPT-3, can generate text autonomously, following certain previously established instructions or language patterns. Generative language models do this by using a technique known as "transfer learning", in which they are fed large amounts of text and learn to mimic the style and way it is written. They can then use this to generate new text that follows the same pattern or style. An example of a generative language model is ChatGPT, a model developed by OpenAI that was designed to simulate human conversations. ChatGPT has been used to generate dialogue in video games, chatbots and messaging applications. Photo: Onur Binay / Unsplash Another potential use for generative language models is in screenwriting and book writing. Fed with information about a specific topic or genre, these models could help writers generate ideas and structure their stories in a more efficient way. However, it is important to keep in mind that these models still have their limitations and cannot completely replace the creative work of a human writer. Generative language models are a powerful tool that can help artists and creators generate ideas and experiment with different approaches in their work. Although they still have their limitations, their potential is enormous, and it is likely that we will see more and more uses of these AIs in the future. AI of Things Artificial Intelligence in Fiction: The Circle (2017), by James Ponsoldt November 24, 2022 Image generators Another way in which Artificial Intelligences can be used in the creation of art is through the use of image generation models. These models, such as OpenAI's DALL-E, are able to generate images from a textual description or a set of keywords. The image generation models do this by using a technique similar to the transfer learning used by generative language models. They are fed large numbers of images and learn to imitate the style and way they are drawn. They can then use this to generate new images that follow the same pattern or style. One example of an image generation model is Midjourney, developed by an independent company. Users can use Midjourney to create images from simple textual descriptions, such as "a cat sitting in a window". This can be useful for artists and designers looking for inspiration or a quick way to generate sketches and concepts. Photo: Chen / Unsplash Another potential use for image generation models is in book illustration or in the creation of graphs and diagrams. Fed with information about a specific theme or style, these models could help illustrators generate ideas and create images that fit a specific theme more efficiently. However, it is important to keep in mind that these models still have their limitations and cannot completely replace the creative work of a human illustrator. The Bestiary Chronicles Chema Alonso describes in his blog the comic series "The Bestiary Chronicles" as an example of how generative AIs can be used in the creation of art. This series was created using MidJourney, an AI that allows artists to build the graphic style of a comic book one vignette at a time, similar to models such as Dalle-2 or Stable Diffusion. Photo: Donovan Reeves / Unsplash Stories from "The Bestiary Chronicles", such as "The Lesson", "Exodus" or "Summer Island", have proven the potential of using generative AI as a tool for creating art. In "Exodus", a sci-fi odyssey is depicted that unfolds over 35 spectacular pages, all generated using MidJourney. "Summer Island" is a folk horror story that follows a photojournalist on assignment in a remote Scottish village, where he discovers that the villagers are hiding a dark secret. "The Lesson" is a dystopian story that follows the last remnants of humanity gathered in an underground location to learn about the monsters that have destroyed their planet. While the use of generative AI in the creation of art can have many advantages, such as the ability to experiment with different approaches and styles more quickly and efficiently, there are also disadvantages to be considered. AI models, for example, still have their limitations and cannot completely replace the creative work of a human artist. It is also important to keep in mind that the use of generative AI in the creation of art may raise ethical and copyright issues. Conclusion… In conclusion, generative Artificial Intelligences can be used as tools to support the creative process of artists and content creators in different ways, such as in the generation of text and images. Although they still have their limitations, these AIs have great potential, and we are likely to see more and more uses of them in the future. It is important to note, however, that the use of generative AI in the creation of art can also raise ethical and copyright issues. Despite this, the use of generative AI can be an efficient and useful way to experiment with different approaches and styles in art and content creation. …And ¡surprise! This entire post has been written using ChatGPT and the images generated by Midjourney![1] This proves the great potential of generative AI as support tools in the creative process and how they can be used to create high quality content. We hope you enjoyed reading this post and learned something about the use of generative AIs in the creation of art! Author's Note (human) Let this little experiment serve, not as a mockery of the reader, but as a demonstration of the capabilities of the new AIs. While it is true that the text has been generated by ChatGPT, some human intelligence has had to be applied when making the requests (with several repetitions in some cases). Rather than replacing artists, this proves that knowing what to ask an AI to do may be a new skill for content creators to develop. [1] The images generated by Midjourney could not be included in the post for copyright reasons. The images included are from an image bank and are intended to represent the meaning of the text.

January 10, 2023

AI & Data

Artificial Intelligence in Fiction: The Circle (2017), by James Ponsoldt

Plot The film The Cricle by James Ponsoldt, based on Dave Eggers' novel of the same title, introduces Mae Holland (Emma Watson) as a young intern who joins The Circle company. A Big-Tech headed by Eamon Bailey (Tom Hanks) that develops everything from hardware to social media (any resemblance to big tech is purely accidental). Dazzled by the company's apparent modernity and openness, Mae attends the corporate event at which an innovative technology is unveiled to place, everywhere, cameras that broadcast in real time to social networks. From this point the plot descends hand in hand with the main character into the darker side of the loss of privacy and unregulated corporate practices. https://www.youtube.com/watch?v=Z4wu6J6DLLM The cult of IA technology Somehow reminiscent of 1984, the main theme of the film's plot is clearly that of privacy, which in the real world has been greatly affected, and even challenged, in the last decade, mainly due to social media. If we have included this film in our section on Artificial Intelligence, it is not because the film is specifically about Artificial Intelligence (AI), but because of the cult of technology that underpins the plot. Technology serves in the film, in an obvious way, as a lever for The Circle to gain power. But this would not be possible if it were not for the mass of users who support it wholeheartedly. Technology for technology's sake. Technology as belonging to a group. The unquestioning defence of developments. And this is where it connects with AI. There is a tendency to rely on Artificial Intelligence, particularly if they reinforce one's previous opinion on a subject. AI is difficult to develop, and it is even more difficult to understand why it makes the decisions it does, especially the new developments in Deep Learning. That is why there is a tendency to rely on AI uncritically and assume the correctness of the results, particularly if they reinforce one's previous opinion on a subject. I believe it is necessary to put any technological development into perspective, no matter how modern, trendy, attractive and cool it may seem, because the basis of technology is to make people's lives better. If it does not meet this objective, the technology is worthless. We should not develop for development's sake. This is one of the clearest messages that the film sends us. It is pointless to develop technology if it does not improve people's lives. Collective Intelligence as Artificial Intelligence The technology presented in the film basically works by collective intelligence, i.e. many people looking at the images emitted by the cameras, such as the identification of people using all the cameras in the world that occurs in (spoiler alert!) one of the scenes. It is a logical step that this work would end up being done by an AI, which the film does not address. In a sense, the mass of users performs the function of an artificial intelligence by not judging what it is doing and delivering results. This way of working, doing small jobs manually and aggregating the results, already exists in the real world and is offered by several platforms, which pay small amounts of money to many people who do small tasks and then aggregate the results. Thus, collective intelligence becomes the source of data that AI drinks from, with users tagging the data for AI to do its work. The users working for the AI, so that the AI can then work for the users. A curious circle that in the film remains a semicircle, addressing only the first part. Rating The Circle is a dystopia that is not too far from our current reality. The film falls short of estimating the use of AI in technology, resorting to collective intelligence instead. In that sense it has been less ambitious than similar offerings such as Person of Interest. Instead, it offers a stark view of why companies push technologies that dilute people's privacy. In order to be able to make a ranking of future films and series, we will rate the degree of realism of each technology presented, using a scale (out of 5): Artificial intelligence: 1/5 (it underestimates its use) Other technologies: 5/5 (social media and cameras everywhere) Result: 3/5 technological realism Availability: The Circle is available through Prime Video.

November 24, 2022

AI & Data

AI in Science Fiction Films: A Recurring Pattern of Fascination and Horror

In today's post we are going to explore how Hollywood films have dealt with the subject of technological advances, especially robotics and Artificial Intelligence. You will be surprised to discover that many of the films follow the same pattern, which we can say started at the beginning of the 20th century and became popular with Terminator. Let's start! From the Jewish Golem to "Star Wars" C3PO There is a narrative that has proved recurrent in the history of human culture, namely the creation of artificial life to use it for our benefit. There are plenty of examples, be it Mary Shelley's famous "Frankenstein" (1818) or the cute R2D2 and C3PO from Star Wars (see our previous post on "The Mandalorian"). This recurring narrative can be traced back to Jewish mythology, with the clay Golem that comes to life when you put instructions in its mouth with a piece of paper and performs the tasks you ask it to do without complaint. It is certainly an interesting concept, to be able to offload tasks onto another being who does not suffer or question the task. It is, to say the least, useful, and that is why we find this story repeated in all areas of culture, including cinema. This is where a literary genre comes into play that is based on telling fictional stories in a context where technology and science have evolved beyond our reality. This is what we know as Science Fiction. When the Golem narrative and Science Fiction come together we have the robot books and movies. The "R.U.R. pattern" common in Science Fiction While it is true that not all films about robots and artificial intelligence treat the subject in exactly the same way, we do find a recurring pattern, especially in the Western world, which goes something like this: Humans have developed technology to the point where they can create an entity capable of performing tasks autonomously. An entity (a company, an army or an individual) decides to put this entity in charge of a critical asset or process for humanity, with the excuse of improving productivity, reliability or profitability. The entity develops autonomously beyond what the designers expected. The entity decides that the human being is an obstacle to its new vision of the universe and that it has to be eliminated, imprisoned or subjugated so that it does not disturb. And we could end with the fact that the entity is difficult to shut down or destroy and no one has thought to put a safety mechanism in place. I'm sure that reading these points has made you think of a film or book that you have read. It is normal, this pattern is repeated more often than we realise. In fact, we can find this pattern already in the first work that includes the word robot (from the Czech word robota, that is, slave). This book is "R.U.R." (1920) by the Czech Karel Čapek, which in its plot tells us the story of a company that manufactures artificial beings (1) to reduce the workload of humans (2). At this point, Harry Domin and Helena Glory, the owner of the robot factory and his wife respectively, decide to endow the beings with feelings, who end up developing beyond the initial objective (3), becoming aware of the slavery to which humans subject them, starting a rebellion and conquering the planet (4). AI of Things Deep Learning in Star Wars: May Computation Be With You March 31, 2022 The story ends with all humans eliminated from Earth, because the robots were too strong and had no weak points (5). Knowing the pattern, which we will call the "R.U.R. pattern" in deference to its origin, let's go to Hollywood for a spin. A (non-exhaustive) list of films that follow the R.U.R. pattern (Spoiler alert!) If the film appears in this list, you can imagine the plot, but if you prefer not to know it, skip the following! "The terminator" (1984): Probably the most famous and the one that made the genre fashionable, it tells us how Skynet, a military AI takes control of all machines and computers with the aim of exterminating humans. Good thing we have Schwarzenegger on our side. "Small soldiers" (1998): As fate would have it, a military chip ends up in articulated toy dolls which, once they become conscious, decide to wipe out the human race. "The Matrix" (1999): Once they become self-aware, the machines decide to wipe out the human race. Humans decide to cut off their power source (the sun) and the machines, in return, start harvesting humans and using them as batteries while keeping them in a simulation. "Red planet" (2000): A friendly robot dog accompanies astronauts on their exploration of Mars, but due to a glitch in its military programming, it decides to wipe out all human beings. Photo: Samuel Regan "I, robot" (2004): Una IA empresarial decide que los humanos han de ser protegidos de sí mismos y, oh sorpresa, los intenta encarcelar a todos. "Stealth" (2005): A secret military programme puts an AI at the controls of a plane capable of starting a nuclear war and the plane decides to stop obeying orders. "Eagle eye" (2008): A highly advanced military AI coordinates the lives of many people to overthrow a government it sees as impeding its plans for world domination. "Echeron Conspiracy" (2009): Word for word the same plot as The Panic Plot. "TRON: legacy" (2010): A world in which the ruling Artificial Intelligences have evolved to the point of enslaving all beings. Fortunately for us, in this case the domination is reduced to a subatomic world. "Avengers: Age of Ultron" (2015): Half-alien, half-computer robot aims to take over the entire planet and wipe out humans. "Westworld" (2016): In this case, the robots are created for recreational purposes, until they realise what they are... and rebel. "I am mother" (2019): Human-created AIs see how humans are destroying themselves and decide to wipe the slate clean with humans - to their regret, of course. I'm sure we could go on like this for a bit longer, but the point has been made, we are fascinated by technology, but we are afraid to stop understanding it. I'm sure we'll continue to see films in the future that follow this pattern - I hope you recognise it when you see it!! Micro epilogue: The Eastern visión To be fair, I have to admit that this is the Western view of the genre. In the East there is a completely different view of robots. These tend to be the good guys in the story, who are here to help us. But that will be in a different post. I’ll be back! Note: If you know more films that follow this pattern, let us know in the comments! AI OF THINGS Movies and Big Data: how to make your heart pound July 5, 2017

May 12, 2022

AI & Data

Deep Learning in Star Wars: May Computation Be With You

Today we are going to talk about how Artificial Intelligence and, Deep Learning in particular, are being used in the filming of movies and series, achieving better results in special effects than any studio had ever achieved before. And is there any better way to prove it than with one of the best science fiction sagas, Star Wars? To paraphrase Master Yoda: “Read or do not read it. There is no try”. Deepfake Facial Rejuvenation Since the release of A New Hope in 1977 until now, a multitude of Star Wars films and series have been made, without following a continuous chronological order. This means that characters that were played when the actors were young have to be played many years later... by the same actors, who are no longer that young. This is a problem that Hollywood has solved by using "classic" special effects, such as CGI, but the advance of Deep Learning has resulted in a curious fact, as fans with home computers have managed to match or improve the work of these studios. One example is DeepFake technology. DeepFake is an umbrella term for neural network architectures trained to replace a face in an image with that of another person. Among the neural network architectures used are autoencoders and generative adversarial networks (GANs). Since 2018 this technology has developed rapidly, with websites, apps and open-source projects ready for development, lowering the barrier to entry for any user who wants to try it out. And how does this relate to Star Wars? On December 18th, 2020, episode 8 of season 2 of The Mandalorian series was released, which included a scene with a "young" Luke Skywalker made by computer (the original actor, Mark Hamill, was 69 years old). Just 3 days later, the youtuber Shamook uploaded a video in which he compared the facial rejuvenation of Industrial Light&Magic (responsible for the special effects) with the one he had done himself using DeepFake https://www.youtube.com/watch?v=wrHXA2cSpNU As you have seen, the work of a single person, in 3 days, has improved the work of the special effects studio, which, in this case, had also used DeepFake in combination with other techniques. In addition, Shamook did this using two open-source projects such as DeepFaceLab and MachineVideoEditor. The same author made other substitutions in recent Star Wars films such as that of Governor Tarkin in Rogue One (the original actor, Peter Cushing, died in 1994) or that of Han Solo in the film of the same name (where they hired a new actor instead of rejuvenating Harrison Ford) that proved the DeepFake technique generalised very well to other films. https://www.youtube.com/watch?v=_CXMb_MO3aw https://www.youtube.com/watch?v=bC3uH4Xw4Xo These videos, which went viral, did not go unnoticed by Lucasfilm, who a few months later hired the youtuber as Senior Facial Capture Artist. Outside of the Star Wars universe, Shamook has done face replacements in many other films, usually putting actors in films they have nothing to do with, with hilarious results. Upscaling models to improve the quality of old videos But rejuvenating faces is not the only use that Deep Learning can offer film studios. Another type of models, called upscaling models, are trained to improve the resolution of images and videos (and video games). This is useful when you want to remaster, for example, old films that were digitalised and do not allow for easy upscaling. Fans, again, have taken the lead and are improving the quality of old Star Wars video game trailers using these technologies. https://www.youtube.com/watch?v=UD0yY4Grc_A Some have even dared to restore deleted scenes from the first films, and provide tutorials so that those who have the courage can continue to improve their results. https://www.youtube.com/watch?v=2BcoLsuJMP0 In short, we can see that Deep Learning models are changing the way many businesses are developed. The film industry has a wide range of options to improve its processes using Machine Learning that will allow us to enjoy increasingly realistic and spectacular effects. Note: you can see the process of Luke Skywalker's rejuvenation in The Mandalorian in episode 2 of season 2 (“Making of the Season 2 Finale”) of the docuseries “Disney Gallery: Star Wars: The Mandalorian” available in Disney+.

March 31, 2022

AI & Data

Artificial Intelligence in fiction: Eva, by Kike Maíllo

Are you afraid your food processor will rebel and poison your lentils? Do you think your Roomba is watching you? Do you wear a hat when you see a drone? Then this is the place for you. Today we begin a series of articles on the treatment of Artificial Intelligence in films and series, analysing it from the point of view of the current state of technology, assessing how realistic the emerging technologies are. And why should you believe me? Let's say that until a few years ago I was doing research on robots, simulating them, programming them, and I even wrote a dissertation on them... Shall we start? Plot We open this series with a rather unknown Spanish film, Eva (2011) by director Kike Maíllo, which is probably my favourite film in terms of its treatment of robotics. The film is set in the near future, 2041, in a world where humans coexist with robots that are used for repetitive or heavy tasks, such as cleaning or carrying groceries. In this context, Alex (Daniel Brühl), a cybernetic/robotic engineer returns to his village after a prolonged absence, with the university's assignment to create the first child robot. The film focuses on the relationships between the main character and the family he left behind and features some surprising plot twists. https://www.youtube.com/watch?v=46aIZRK2T2Y Robotics that already exists In the film, Artificial Intelligence and robotics are treated in two very different ways. On the one hand, we have the majority of robots, whose physical structure and intelligence are completely realistic and feasible today or in the near future. From mobile-based assistants, such as PR2, to quadrupedal cargo-carrying robots, such as those produced by Boston Dynamics, these robots represent the commercial version of what is currently in universities or in certain industries. Of particular note is Alex's cat-robot, which is almost a stylised version of Spot. The robotics that we wish existed On the other hand, there are a few robots, such as the assistant Max (Lluís Homar), whose intelligence, appearance, feelings and movements rival those of humans. These robots are the most futuristic part. Let's analyse their characteristics in detail. Let's start with the movement capacity. The realistic and stable movement of robots is a long-standing research topic that, apart from Boston Dynamics with Atlas, no one has managed to solve adequately. Realistic outward appearance, to the point of being mistaken for a human, is an area where art and engineering go hand in hand. Some current developments may momentarily fool a naïve (or myopic) observer, but none withstand the challenge of facial movements, being easily detectable that we are not looking at a person. As for feelings... what exactly are they - appropriate external expressions in the face of certain events? In that case, there is a field of study that, together with the previous point, will at some point achieve realistic results. But if you consider feelings to be more than just facial expressions, then I'm afraid the engineering hasn't gone that far. Some studies consider artificial emotions to be drives that the robot seeks to keep in range (such as being happy and not sad, for example). However, a problem comes in determining what it means for a robot to be happy or sad (does being angry or elated must affect its decisions?) or how situations have to influence it (does it become sad if its battery runs out?). Even thinking further, why would we want a robot with this functionality? What does it bring to its job? We could end up with depressed robots going to the robopsychologist. In short, the intelligence that the film proposes in these quasi-human robots falls far short of what engineering has achieved so far and is the least credible point of the technology that is presented. Retrofuture Other technologies not directly related to artificial intelligence are inspired by existing technologies or those that are projected to exist soon, such as visual touch interfaces to control the computer (bear in mind that the film is from 2011 and that Microsoft Surface, for example, was launched in 2012), or navigation indicators projected on the windscreen of the car (like the head-up displays of some vehicles). As for the holograms that the protagonist uses to watch videos or to design the robot's brain, it has not yet been possible to do without a physical medium. However, it does not seem so ridiculous to think that by 2041 there could be a more or less functional version of what we see on the screen. Rating Eva is a great film that is characterised by its good integration of advanced but manageable technology into a recognisable everyday environment and, with the exception of a few overly fantastical elements, achieves a good overall effect of realism. In order to be able to make a ranking of films and series in the future, we are going to rate the degree of realism of each technology presented, using a scale (out of 5): Artificial Intelligence: 5/5 for non-humanoid robots, 2/5 for humanoid robots, then we give it a 3.5/5 on average. Robotics: 4.5/5 Other technologies: 4/5 Resultado: 4/5 de realismo tecnológico Availability: Eva was available on Filmin but is no longer available on the major platforms. It is available on Amazon.

December 23, 2021

AI & Data

Warning About Normalizing Data

For many machine learning algorithms, normalizing data for analysis is a must. A supervised example would be neural networks. It is known that normalizing the data being input to the networks improves the results. If you don’t believe me it’s OK (no offense taken), but you may prefer to believe Yann Le Cunn (Director of AI Research in Facebook and founding father of convolution networks) by checking section 4.3 of this paper. Convergence [of backdrop] is usually faster if the average of each input variable over the training set is close to zero. Among others, one reason is that when the neural network tries to correct the error performed in a prediction, it updates the network by an amount proportional to the input vector, which is bad if input is large . Another example in this case of an unsupervised algorithm, is K-means. This algorithm tries to group data in clusters so that the data in each cluster shares some common characteristics. This algorithm performs two steps: Assign centers of clusters in some point in space (random at first try, calculating the centroid of each cluster the rest of the time) Associate each point to the closest center. In this second step, the distances between each point and the centers are calculated usually as a Minkowski distance (commonly the famous Euclidean distance). Each feature weights the same in the calculation, so features measured in high ranges will influence more than those measured in low ranges e.g. the same feature would have more influence in the calculation if measured in millimeters than in kilometers (because the numbers would be bigger). So the scale of the features must be in a comparable range. Now you know that normalization is important, let´s see what options we have to normalize our data. A couple of ways to normalize data Feature scaling Each feature is normalized within its limits. Figure 1, normalization formula This is a common technique used to scale data into a range. But the problem when normalizing each feature within its empirical limits (so that the maximum and the minimum are found in this column) is that noise may be amplified. One example: imagine we have Internet data from a particular house and we want to make a model to predict something (maybe the price to charge). One of our hypothetical features could be the bandwidth of the fiber optic connection. Suppose the house purchased a 30Mbit Internet connection, so the bit rate is approximately the same every time we measure it (lucky guy). Figure 2, Connection speed over 50 days It looks like a pretty stable connection right? As the bandwidth is measured in a scale far from 1, let us scale it between 0 and 1 using our feature scaling method (sklearn.preprocessing.MinMaxScaler). Figure 3, Connection speed / day in scale 0-1. After the scaling, our data is distorted. What was an almost flat signal, now looks like a connection with a lot of variation. This tells us that feature scaling is not adequate to nearly constant signals. Standard scaler Next try. OK, scaling in a range didn’t work for a noisy flat signal, but what about standardizing the signal? Each feature would be normalized by: Figure 4, Standard scaling formula This could work on the previous case, but don’t open the champagne yet. Mean and standard deviation are very sensitive to outliers (small demonstration). This means that outliers may attenuate the non-outlier part of the data. Now imagine we have data about how often the word “hangover” is posted on Facebook (for real). The frequency is like a sine wave, with lows during the weekdays and highs on weekends. It also has big outliers after “Halloween” and similar dates. We have idealized this situation with the next data set (3 parties in 50 days. Not bad). Figure 5, Number of times the word "hangover" is used in Facebook / days. Despite having outliers, we would like to be able to distinguish clearly that there is a measurable difference between weekdays and weekends. Now we want to predict something (that’s our business) and we would like to preserve the fact that during the weekends the values are higher, so we think of standardizing the data (sklearn.preprocessing.StandardScaler). We check the basic parameters of standardization. Figure 6, Standard standardization for the above data is not a good choice. What happened? First, we were not able to scale the data between 0 and 1. Second, we now have negative numbers, which is not a dead end, but complicates the analysis. And third, now we are unable to clearly distinguish the differences between weekdays and weekends (all close to 0), because outliers have interfered with the data. From a very promising data, we now have an almost irrelevant one. One solution to this situation could be to pre-process the data and eliminate the outliers (things change with outliers). Scaling over the maximum value The next idea that comes to mind is to scale the data by dividing it by its maximum value. Let´s see how it behaves with our data sets (sklearn.preprocessing.MaxAbsScaler). Figure 7, data divided by maximum value Figure 8, data scaled over the maximum Good! Our data is in range 0,1… But, wait. What happened with the differences between weekdays and weekends? They are all close to zero! As in the case of standardization, outliers flatten the differences among the data when scaling over the maximum. Normalizer The next tool in the box of the data scientist is to normalize samples individually to unit norm (check this if you don’t remember what a norm is). Figure 9, samples individually sampled to unit norm This data rings a bell in your head right? Let’s normalize it (here by hand, but also available as sklearn.preprocessing.Normalizer). Figure 10, the data was then normalized At this point in the post, you know the story, but this case is worse than the previous one. In this case we don’t even get the highest outlier as 1, it is scaled to 0.74, which flattens the rest of the data even more. Robust scaler The last option we are going to evaluate is Robust scaler. This method removes the median and scales the data according to the Interquartile Range (IQR). It is supposed to be robust to outliers. Figure 11, the median data removed and scaled Figure 12, use of Robust scaler You may not see it in the plot (but you can see it in the output), but this scaler introduced negative numbers and did not limit the data to the range [0, 1]. (OK, I quit). There are others methods to normalize your data (based on PCA, taking into account possible physical boundaries, etc), but now you know how to evaluate whether your algorithm is going to influence your data negatively. Things to remember (basically, know your data) Normalization may (possibly [dangerously]) distort your data. There is no ideal method to normalize or scale all the data sets. Thus it is the job of the data scientist to know how the data is distributed, know the existence of outliers, check ranges, know the physical limits (if any) and so on. With this knowledge, one can select the best technique to normalize the feature, probably using a different method for each feature. If you know nothing about your data, I would recommend you to first check the existence of outliers (remove them if necessary) and then scale over the maximum of each feature (while crossing your fingers).

November 1, 2018

Find out more about us

-

At Aguas de Cádiz, we have connected more than 4,000 smart meters with NB‑IoT technology to enable remote reading and continuous consumption monitoring.💧

FEBRUARY 17, 2026

-

💜 Cybersecurity ‘chose’ Ester Tejedor almost without her looking for it. Today, she leads Cybersecurity technology and operations, coordinating the SOCs and the teams working against ever‑evolving threats.

FEBRUARY 17, 2026

-

Tons of waste reach the sea every year. 🌊 At #MWC26 we are showcasing a connected aquatic drone that collects up to 500 kg per day at Marina Port Valencia, together with the Port Authority of Valencia and Fundación Valenciaport (Opentop).

FEBRUARY 17, 2026

Hybrid Cloud

Hybrid Cloud Cyber Security & NaaS

Cyber Security & NaaS AI & Data

AI & Data IoT & Connectivity

IoT & Connectivity Business Applications

Business Applications Intelligent Workplace

Intelligent Workplace Consulting & Professional Services

Consulting & Professional Services Small Medium Enterprise

Small Medium Enterprise Health and Social Care

Health and Social Care Industry

Industry Retail

Retail Tourism and Leisure

Tourism and Leisure Transport & Logistics

Transport & Logistics Energy & Utilities

Energy & Utilities Banking and Finance

Banking and Finance Smart Cities

Smart Cities Public Sector

Public Sector