Ethics in AI and Machine Learning: The gray areas of sensitive variables

Machine learning models use any information provided, regardless of its discriminatory or unfair nature; therefore, human oversight in their development and use is essential.

The more information we provide to a model, the more and better patterns it will find, thus improving, in general, its accuracy. However, certain existing patterns in historical data respond to past discriminations and will therefore lead to unfair and unethical results.

Accuracy and ethics are two sides of the same coin in machine learning.

A priori, given the growing concern with the responsible use of AI, it seems sensible to respond intuitively by mitigating or discarding the use of sensitive variables or "proxies".

In other words, trying to "sterilize" the learning information set in order to avoid the prevalence of undesirable patterns, even at the cost of a drop in accuracy. However, as we shall see, this still depends on the use case.

This is a crucial point in machine learning-based solutions that combine different temporal contexts. In certain domains (such as education, employment, personnel selection, migration, health, ...), it can be argued that diagnostic or profiling models (present) use some information that is not desired in estimation or prescription models (future).

"Regulated" liability (AI Act)

These areas (education, health, security, etc.) are framed by the AI Act as High Risk, and in general those systems which "decision containing risk to health, safety or fundamental rights".

For these systems, a series of requirements are established, such as risk management, governance and data management, information to users, supervision by persons, etc., without expressly mentioning sensitive variables.

In this sense it is raised to Prohibited if the AI "exploits vulnerabilities of a specific group of people because of their age, disability or social or economic situation in a way that distorts the behavior of these people and is likely to cause harm to them or others".

This means that sensitive variables can be used with AI in a responsible manner if they do not harm people in any way, do not entail the above-mentioned risks and meet the legal requirements.

Broadening the perspective of the above, it could be understood that excluding certain sensitive information could harm certain groups, for example, those requiring special attention.

Diagnosis, the X-Ray of the present

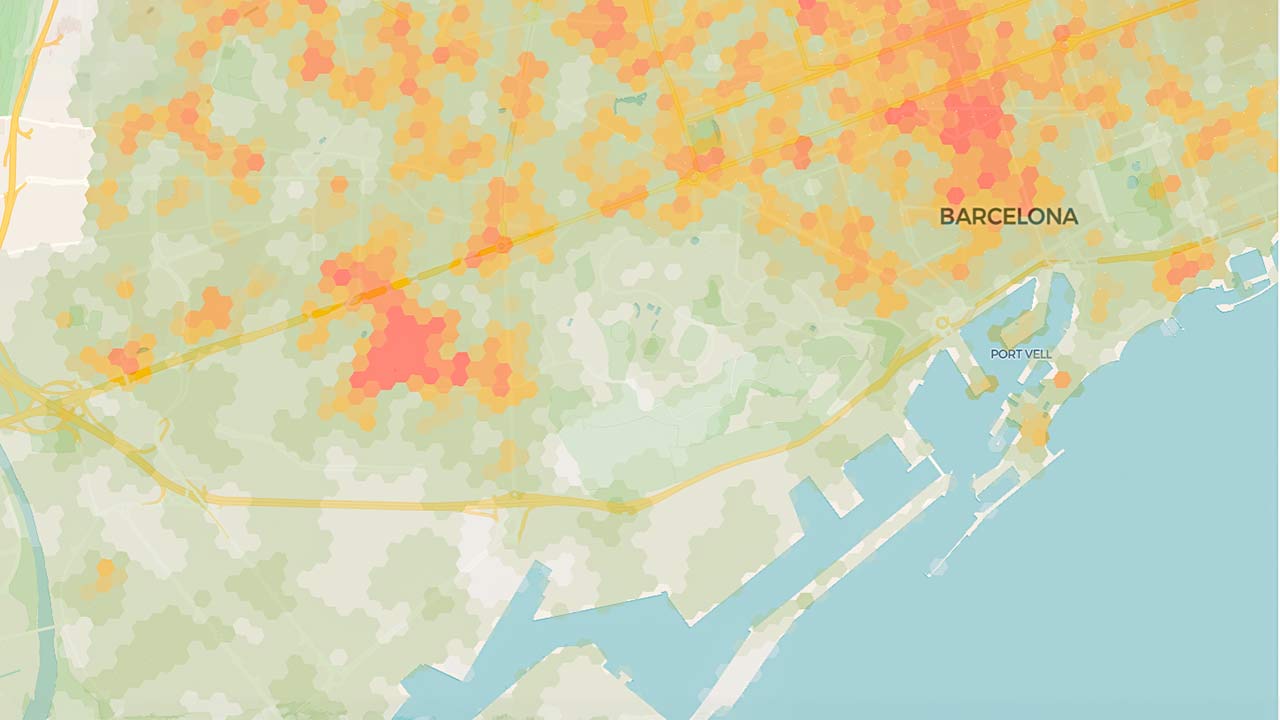

To explain or help understand a current and present situation, the applicable use case is diagnostics or profiling. It acts as a snapshot where the right degree of accuracy is key. When analyzing historical and current data, we want to get a true and realistic picture. If sensitive variables, such as age, gender, geographic location and socioeconomic status, influence the results we will want to keep them. Age and gender, for example, can be determinants in diagnosing disease.

Considering the responsible use of AI, in fields such as education or employment, we will look for alternatives or explanators that avoid perpetuating patterns. We will explore objective proxies such as "time since last job", "time since highest degree", "years of experience" against ageism.

However, if, in the diagnosis of the situation, precision in the results is paramount, in order to obtain a more accurate and realistic picture of the present situation, it may be justifiable to use the variable directly, in this example, age.

Diagnosis helps us to understand the current situation, derived from present and past information.

Let's imagine that we are creating a machine learning system in the health sector, particularly for the detection and treatment of diseases. Let's say our goal is to develop a model for identifying heart disease.

In this diagnostic process, we may need to consider sensitive variables such as age, gender and race, as these factors may be relevant to certain types of heart disease. Older men, for example, may be at higher risk for certain types of heart disease. Therefore, to achieve an accurate diagnosis, it is essential that these variables are included in our model.

Prescription: responsibly building the future

Looking ahead, high-risk systems must avoid becoming prohibitive. As we have seen, the responsible use of AI entails avoiding harm, for example, from the perpetuation of existing patterns in the data.

Under this perspective, when prescribing, models will be sought that avoid biases at the cost of a decrease in the accuracy of the results. As with everything else in the data context, this impact can be measured based on metrics and thus support the decisions derived from it.

We must prioritize ethics and consider the implications of our decisions when making prescriptions.

Returning to the healthcare example, once we have diagnosed the disease, our next step is to prescribe a treatment plan. At this point, since all patients should have access to the same high-quality treatments and we do not want factors such as gender, race or age to influence the type of treatment that is prescribed, we will need to remove these variables.

✅ It should also be remembered that when building machine learning models, even if we remove sensitive variables, we are not guaranteed to avoid bias and discrimination, and this information should be reserved for further analysis.

Considering the combination of model results, it is possible to make prescriptions by combining the result of diagnoses together with forward estimates of contexts, for example by making a "present" diagnosis and then adjusting it in a "future" context.".

Conclusion

It is technically understood that it is impossible to "sterilize" real data sets of undesirable patterns, it is a priority to pay attention to the impact of sensitive information that may cause harm to any group.

To achieve this, data analysis focused on sensitive variables is required, including exploring their use as a predictor within the model, masked with proxies, or as an analytical contrast with diagrams such as PDP or ICE; as well as exploring the impact of their mitigation through metrics.

Hence, it is incumbent on all parts of the value chain to pay special attention to the responsible use of AI; especially the design, development, governance and also functional teams, as they write the tool's user manual.

Hybrid Cloud

Hybrid Cloud Cyber Security & NaaS

Cyber Security & NaaS AI & Data

AI & Data IoT & Connectivity

IoT & Connectivity Business Applications

Business Applications Intelligent Workplace

Intelligent Workplace Consulting & Professional Services

Consulting & Professional Services Small Medium Enterprise

Small Medium Enterprise Health and Social Care

Health and Social Care Industry

Industry Retail

Retail Tourism and Leisure

Tourism and Leisure Transport & Logistics

Transport & Logistics Energy & Utilities

Energy & Utilities Banking and Finance

Banking and Finance Smart Cities

Smart Cities Public Sector

Public Sector

.jpg)