Cyber Security

Cryptography, a tool for protecting data shared on the network

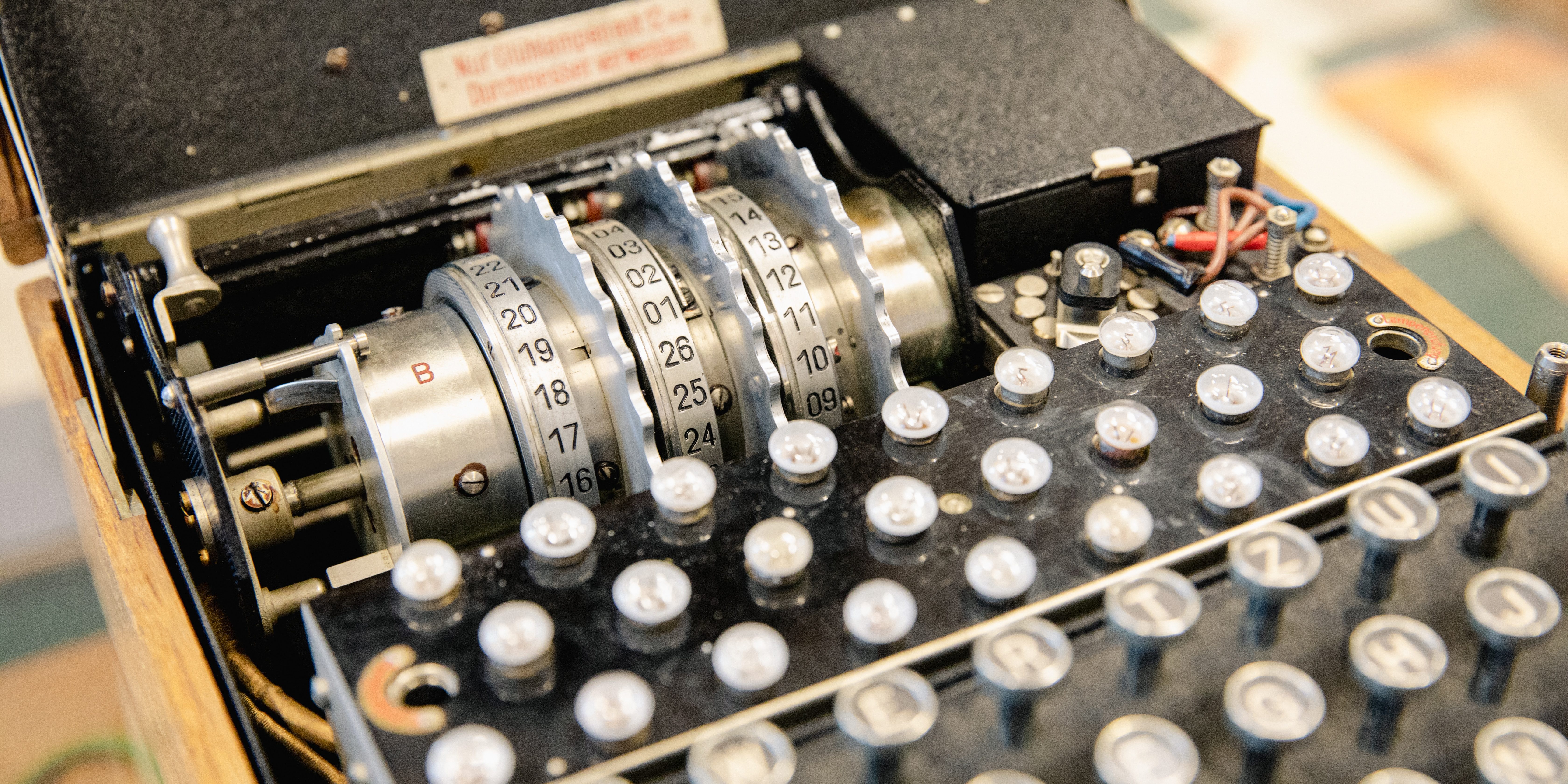

Cyber Security is nowadays an essential element in companies. However, new ways of undermining it are emerging every day. Many have asked themselves: how can companies securely store their data, how can credit card information be protected when making online purchases? The answer to these questions is cryptography. Cryptography becomes vital, especially when there are so many computer security risks, such as spyware or social engineering. That is why the vast majority of websites employ it to ensure the privacy of their users. In fact, according to projections by Grand View Research (2019), from 2019 to 2025, the global encryption software market is expected to grow at an annual rate of 16.8%. How can cryptography be defined? This term is defined as the art of converting data into a format that cannot be read. In other words, messages containing confidential information are encrypted. This prevents unscrupulous people from accessing data that could be breached. Such encrypted data is designed with a key that enables access (Onwutalobi, 2011). To encrypt a message, the sender must manipulate the content using some systematic method, known as an algorithm. The original message, called plain text, can be encoded so that its letters are aligned in an unintelligible order, or each letter can be replaced by another letter. This is known as ciphertext. Why should cryptographic systems be used? 1. They can be used in different technological devices Both an IPhone and an Android device, for example, can have their own encryption methods depending on the needs of the companies. Likewise, it is possible to encrypt content on an SD card or USB memory stick. There are several possibilities, you just have to find the most appropriate method. 2. Working remotely is more secure Organizations today often need to work securely when their employees communicate remotely. A 2018 North American Report published by Shred-It revealed that vulnerability risks are higher when working in this way. Therefore, working collaboratively through data encryption will prevent information from falling into the wrong hands. 3. Cryptography protects privacy t is frightening to think how exposed data can be in companies. According to an article by Hern (2019) published in The Guardian newspaper, in 2018 more than 770 million emails and passwords were exposed. All of this resulted from vulnerabilities in accessing individual data. Therefore, data encryption can prevent sensitive details from being unknowingly published on the Internet. 4. Provides a competitive advantage Providing companies with cryptographic support for their various sources of information and data will give customers greater peace of mind. Organizations are aware of the essentials of this procedure. A study by the Ponemon Institute (2019) revealed that 45 % of businesses have cryptography strategies. Less than 42% have limited data encryption strategies for certain applications or types of data. Cyber Security Cybersecurity: “black swan“ events in a connected world March 21, 2023 Types of encryption The two most popular ways to apply cryptography are: shared secret key encryption and public key encryption, also known as symmetric and asymmetric encryption systems, respectively (Vasquez, 2015). Shared-key encryption Also known as symmetric. It consists of using the same key for encryption and decryption. Any user who has this key can receive the message. This method is fast and can be used for a large volume of data. It is also used with block cipher chaining, which is subject to a key and an initialization vector. All this to form a cipher from a series of data block. The user who wishes to decrypt the message will have to possess the key and initialization vector. Often used with public key encryption because of its vulnerability. Public key encryption Also called asymmetric encryption, it uses two different keys that share a mathematical link. In other words, one key is used for encryption (private key) and another for decryption (public key). The public key can be shared between recipients. The other key is kept only by the originator of the message. Its computational cost is high. Finally, cryptography opens the opportunity for companies to strengthen the security of their customers. This will consolidate their reputation and further commitment to innovations around the latest technologies. Featured photo: Christian Lendl / Unsplash

May 31, 2023

.jpg)

Cloud

Edge Computing Made Simple

Edge computing is one of the technologies that will define and revolutionise the way in which humans and devices connect to the internet. It will affect industries and sectors such as connected cars, video games, Industry 4.0, Artificial Intelligence and Machine Learning. It will make other technologies such as Cloud and the Internet of Things even better than they are now. As you are likely to hear about the term quite often in the coming years, let's take a closer look at what Edge Computing is, explained in simple terms. In order to understand what Edge Computing is, it is necessary to first understand how some technologies such as Cloud Computing. What happens every time our PC, smartphone or any other device connects to the Internet to store or retrieve information from a remote data centre? What Is Cloud Computing The cloud is so present in our lives that you probably use it without even realising it. Every time you upload a file to a service like Dropbox, every time you check your account in the bank app, every time you access your email or even every time you use your favourite social network, you are using the cloud. To simplify it a lot, we can say that using the cloud consists of interacting with data that is on a remote server and which we access thanks to the internet. When we do this, the procedure is more or less as follows: your device connects to the Internet, either through a landline or wireless network. From there, your internet provider, usually an operator such as Telefónica, takes the data from your device to the destination server, using an IP address or a web address (e.g., dropbox.com or gmail.com), to identify the site to which the information should be sent. The Journey of The Data Until It Is Processed in The Cloud The server in question processes your data (processing is a key term here, as we will see), operates on the information and returns a response. For example: when you connect to Gmail via your device, you ask the Google server to show you the current status of your inbox, it processes your request, queries if you have new mail, and returns the response you see on your screen. As the data is in the cloud, it doesn't matter what device you use to send it. Devices Operator Internet Cloud Services The journey is, in a very simplified way, the one that data makes from devices to servers in the cloud. This is true for every device that connects to the server. Although it seems simple, this "journey" of information is a marvel of technology that requires a whole series of protocols and elements arranged in the right place. However, it also has some disadvantages. Let's say, for example, that you live in Spain and the cloud server in question is in San Francisco. Each time you connect, your data has to make the outward journey through the network of your ISP and other operators, wait for data processing at the destination processor(s), and then make the return journeyEach time you connect, your data has to make the outward journey through the network of your ISP and other operators, wait for data processing at the destination processor(s), and then make the return journey. Besides the fact that it is not usual for servers to be so far away, for many of the things we use the cloud for today this is totally normal and valid, the times are so low (we are talking about milliseconds) that we don't even notice it. The problem comes in certain use cases where every millisecond that passes is crucial and we need the latency, the response time of the server, to be as low as possible. Some of these frequent use scenarios have to do with the Internet of Things. Why IoT Matters The Internet of Things, or IoT, is the system made up of thousands and thousands of devices, machines and objects interconnected to each other and to the Internet. With such a large number, it is logical to assume that both the volume of data generated by each of them and the number of connections to the servers will increase exponentially. Movistar Home Some of the objects that are nowadays already regularly connected to the internet of things are for example light bulbs, thermostats, industrial sensors in factories to control production, smart plugs, virtual speakers with voice assistants such as Movistar Home, Alexa and Google Home or even cars such as those from Tesla. The thing is that every time one of these devices connects to the cloud it makes a journey similar to the one explained above. For the moment and in most cases that is enough, but in some cases that journey is too long for the speed and immediacy that we could get if the cloud were simply closer to us. In other words, we still have a lot of room for improvement. The possibilities that can be realised by bringing the cloud closer to where the data is generated are simply incalculable. This is precisely where Edge Computing comes into play. The Advantages of Edge Computing The best definition of Edge Computing is the following: it is about bringing the processing power as close as possible to where the data is being generated. In other words, it is about bringing the cloud as close as possible to the user, to the very edge of the network. Devices Operator Internet Cloud Services What matters when we talk about the edge of the network is that we bring the ability to process and store data closer to the users. This makes it possible to move capabilities that were previously "far away" to a server in the cloud, much closer to the devices. It's a paradigm shift that changes everything. The functions are similar, but because the processing happens much closer, the speed shoots up, the latency is reduced and the possibilities multiply. So you can enjoy the best of both worlds: the quality, security and reduced latency of processing on your PC, along with the flexibility, availability, scalability and efficiency offered by the Cloud. Edge Computing and next generation networks (5G and Optical fibre) This is where the second part of the equation comes into play when it comes to understanding Edge Computing: 5G and Optical fibre. Amongst its many advantages, 5G and Optical fibre offers very high reductions in latency. Latency is the time it takes for information to travel to the server and back to you, the sum of the round-trip and round-trip time explained above. 4G currently offers an average latency of 50 milliseconds. That div can go down to 1 millisecond with 5G and fibre. In other words, not only do we bring the server as close as possible to where it is needed, at the edge, but we even reduce the time it takes for information to travel to and from the server. In order to better understand the important implications of this, let's consider three different scenarios: a connected car, a machine learning algorithm in a factory and a video game system in the cloud. Edge Computing and Connected Cars The connected car of the future will include a series of cameras and sensors that will capture information from the environment in real time. This information can be used in a variety of ways. It could be connected to a smart city's traffic network, for example, to anticipate a red light. It can also identify vehicles or adverse situations in real time, or even know the relative position of other cars around it at all times. This approach will transform the way we travel by car and improve road safety, but the road to it is not without its pitfalls. One of the most important is that all the information collected by the different cameras and sensors ends up being of considerable size. It is estimated that a connected car will generate about 300 TB of data per year (about 25 GB per hour). That information needs to be processed, however moving that amount of data quickly between the servers and the car is unmanageable, we need processing to happen much closer to where the data is generated - at the edge of the network. A connected car receiving information from nearby sensors. (Telefonica) Let's imagine, for example, a road of the future on which there are 50 connected cars that are also fully autonomous. That means sensors that measure the speed of surrounding cars, cameras that identify traffic signs or obstacles on the road, and a whole host of other data. The speed at which communication must take place between them and the server that controls that information has to be minimal. It is a scenario where we simply cannot afford for the information to travel to a remote server in the cloud, be processed, and come back to us. At the same time, an accident, a sudden change in traffic conditions (an animal crossing the road, for example) or any other unforeseen event may have occurred. We need the processor that operates with the information produced by the car sensors to be as close as possible to the car. With the cloud, this would have to go to the antenna (the operator) and from there travel over the Internet to the server, and then back again, triggering latency. With Edge Computing, since part of the server's capabilities are at the edge of the network, everything happens right there. Edge Computing y Machine Learning Thanks to the machine learning models offered by Machine Learning, many factories and industrial facilities are implementing quality control with Artificial Intelligence and Vision.. This often consists of a series of machines and sensors that evaluate each item produced on an assembly line, for example, and determine whether it is well made or has a defect. Machine learning algorithms often work by "training" the Artificial Intelligence with thousands and thousands of images. Continuing with our example, for each image of a product, the algorithm is told whether it belongs to an item that has been manufactured correctly or not. Through repetition, and gigantic databases, the Artificial Intelligence eventually learns which features of the items are flawless and, if it fails on a particular one, it determines that it has not passed quality control. Once the model has been generated, it is usually uploaded to a server in the cloud, where the different sensors on the assembly line check the information they collect. The scheme we mentioned earlier is repeated: the sensors collect the information, from there it has to travel to the server, be processed, checked against the machine learning model, obtain a response and return to the factory with the result. Edge computing significantly improves this process. Instead of having to go to the Cloud server in each case, we can generate a copy (virtualised or scaled down) of the machine learning model that sits at each sensor at the edge of the network. In other words, practically in the same place where the data is generated. Thus, the sensors do not have to send the information to the distant Cloud for each element, but check the information directly against the model at the edge and, if it does not match because the product is faulty, then they send a request to the server. In this way, performance is improved without the need to increase the complexity of the sensors, and the devices can even be simplified by being able to use the processing capabilities deployed at the edge of the network for some of their functions. Obviously, the speed of detection of manufacturing faults is multiplied and the traffic and bandwidth required is greatly reduced. Edge Computing and Videogames Ever since Nintendo's first GameBoy blew the game industry away back in 1989, one of the biggest challenges for the video game industry has been to offer ways to play games on the go. Companies such as Xbox, Google, Nvidia or PlayStation have come a long way, offering cloud-based gaming solutions that allow next-generation games to run on any screen. Stadia makes it possible to play video games anywhere thanks to fibre connectivity and the power of the Cloud. How do they do it? Again, by using the power of the Cloud. Instead of processing the game's graphics on a PC or game console's processor, it's done on big, powerful servers in the cloud that simply stream the resulting image to the user's device. Every time the user presses a button (for example, to make Super Mario jump), the information from that press travels to the server, is processed, and returns. There is a continuous flow of image, as if it were a video streaming like Netflix, to the user. In return, all you need to play is a screen. To be able to perceive that the process from pressing the jump button to Super Mario jumping on your screen is instantaneous, the latency times must be extremely low. Otherwise, there would be an uncomfortable delay (also known as lag) that would ruin the whole experience. Edge computing allows us to bring the power of the Cloud (the servers that process game graphics) to the very edge of the network, greatly reducing the lag that occurs every time the user presses the button and delivering an experience that is virtually identical to what it would be like if the console were right next door. Edge Computing: Why Now and Why This Will Change The Future Of Connectivity Although we have explained the whole process in a very simplified way, the reality is that Edge Computing requires a number of the latest technologies and protocols to work properly. You may have wondered at some point why all this has not been done until now, i.e., why the Cloud was not designed from the outset to be as close as possible to where the data is generated. The answer is that it was impossible. In order for Edge Computing to work properly we need, among other things, the latest generation of connectivity based on optical fibre and 5G. The better the network deployment, the better the Edge Computing. Without the speed and low latency offered by the combination of both, all efforts to bring the power of the Cloud to the edge, where data is processed, would be wasted. The network would simply not be ready. https://www.youtube.com/watch?v=2h7Ctt1TU6A Thanks to their extensive fibre deployments (in countries like Spain there is more fibre coverage than in Germany, the UK, France and Italy combined), companies like Telefónica are especially well prepared to deploy Edge Computing use cases. Edge Computing will change the world in the coming years. It will take the Cloud services we currently enjoy to the next level. Only time and the infinite potential of the internet know what wonderful new technologies and applications await us beyond Edge Computing. Cloud Cloud Computing for a more sustainable future December 14, 2022 Photo: Alina Grubnyak

April 14, 2022

Find out more about us

-

📲 Fermax has connected its video intercoms with managed IoT connectivity, enabling real-time device monitoring, anticipating issues, and ensuring a safer user experience.

FEBRUARY 10, 2026

-

How does AI see us at Telefónica Tech? 👀💙 We asked it to portray our techies in cartoon style: same features, same essence, and small details that reflect their day‑to‑day in cyber, cloud, data, workplace…

FEBRUARY 13, 2026

-

🛜 This is our NOC, which manages 4G/5G Private Mobile Networks 24x7x365: real‑time monitoring, incidents, SIMs, and security from a single platform.

FEBRUARY 11, 2026

Hybrid Cloud

Hybrid Cloud Cyber Security & NaaS

Cyber Security & NaaS AI & Data

AI & Data IoT & Connectivity

IoT & Connectivity Business Applications

Business Applications Intelligent Workplace

Intelligent Workplace Consulting & Professional Services

Consulting & Professional Services Small Medium Enterprise

Small Medium Enterprise Health and Social Care

Health and Social Care Industry

Industry Retail

Retail Tourism and Leisure

Tourism and Leisure Transport & Logistics

Transport & Logistics Energy & Utilities

Energy & Utilities Banking and Finance

Banking and Finance Smart Cities

Smart Cities Public Sector

Public Sector