Edge Computing and the future of Distributed AI

Our experts in infrastructure and digital technologies recently shared a comprehensive vision on Edge Computing and its role in transforming AI during the presentation 'Beyond the cloud: edge computing and the future of distributed AI'. Their knowledge and practical experience deploying these technologies enable us to explore this paradigm shift in computing.

The speakers highlighted that Edge Computing and distributed AI are redefining not only how businesses and end-users access advanced AI capabilities but also how this technology affects privacy, equity, and access to innovation. This shift promises increased efficiency and data control, yet also raises technical, ethical, and social considerations to address.

AI evolution has moved data processing from the cloud toward decentralized infrastructures.

■ Edge Computing enables data processing closer to the point of generation instead of relying on a centralized data center, using distributed computing devices and nodes at the network's edge, known as 'edge nodes,' which may include routers, gateways, local servers, or IoT devices. This reduces latency and enhances system efficiency. Edge Computing Made Simple →

Edge Computing infrastructure as a fundamental pillar

Deploying distributed nodes within a telecommunications infrastructure brings computational capability closer to users. In Spain, the development of 5G and fiber optic networks, with coverage exceeding 92% of the population, has been essential in ensuring the required bandwidth and latency for these solutions. Initiatives like the European project IPCEI-CIS facilitate the deployment of these distributed nodes and their interoperability at the European level.

In addition to connectivity, decentralized data center development provides the necessary resources for local storage, computation, and execution of AI models. This reduces dependency on large centralized data centers and improves businesses' operational autonomy.

Artificial Intelligence at the Edge (Edge AI): real-time inference

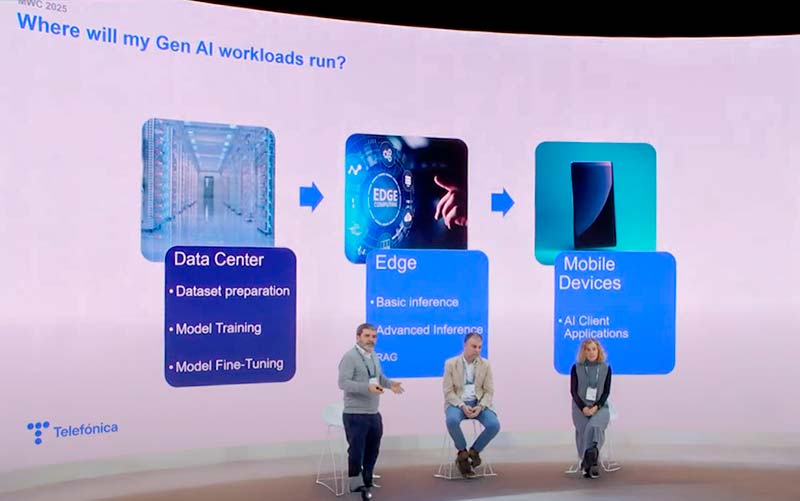

AI evolution has moved through various stages, from traditional machine learning to deep learning and the current Generative AI. At this juncture, distinguishing between training workloads, requiring specialized infrastructure in large data centers, and inference tasks, efficiently executable on Edge AI nodes, is essential.

During the presentation 'Beyond the Cloud: Edge Computing and the Future of Distributed AI' at MWC 2025.

During the presentation 'Beyond the Cloud: Edge Computing and the Future of Distributed AI' at MWC 2025.

With Edge Computing, executing AI models on distributed nodes allows critical applications to process data in real-time without sending it to the cloud.

A key aspect of this approach is efficient resource management through the Operator Platform, an orchestration system enabling three core functionalities:

- Smart Federation enables networks from different operators or segments within a single operator to federate and interconnect. Nodes can thus be shared and used by different owners or operators, expanding the Edge network's reach and capabilities beyond traditional boundaries.

- Smart Allocation facilitates deploying applications based on specific criteria such as latency, computational resources, or data sovereignty requirements. Applications can be dynamically distributed according to customer demand or statically deployed depending on use-case needs, optimizing available resource usage.

- Smart Discovery allows customers to request and connect to the most suitable Edge node based on their specific requirements. For example, a mobile client can automatically connect to the nearest Edge node meeting their service requirements, ensuring the best experience.

These capabilities, centrally managed through Operator Platform, transform a network of independent nodes into an intelligent, cohesive system dynamically adapting to user and application demands.

■ The concept of Cloud Continuum complements these functionalities, seamlessly integrating cloud and Edge nodes, enabling developers to deploy applications without concern for resource physical location.

Data sovereignty and security in distributed AI

A driving factor behind decentralized AI's rise is the necessity to guarantee data sovereignty and protect user privacy. Companies and governments seek solutions to ensure sensitive information isn't processed outside their jurisdiction, mitigating legal and regulatory risks.

The concept of Sovereign AI is particularly relevant in Europe, where data storage location and operating jurisdiction matter significantly. Deploying AI at the edge enables organizations to control data flow, avoiding exposure to external infrastructures. This ensures data remains within the desired jurisdiction and complies with regulations like GDPR.

Applications of Edge AI

The impact of distributed AI is materializing in three major application areas:

- Computer Vision: In industrial environments, computer vision is used for quality control and process optimization in factories, leveraging Edge Computing capabilities to process images in real-time. Digital twins and industrial process monitoring represent prominent use cases requiring local processing and low latency.

Local computer vision for inventory monitoring in retail, protecting customer privacy, is an Edge AI use case. Photo: Sony.

Local computer vision for inventory monitoring in retail, protecting customer privacy, is an Edge AI use case. Photo: Sony.

- Conversational Agents: Implementation of chatbots and virtual assistants based on Retrieval-Augmented Generation (RAG) provides internal technical support within companies by leveraging existing corporate information. These agents access local databases and internal documentation to deliver accurate, contextual responses while preserving sensitive information privacy.

- Big Data and Deep Learning: Advanced applications such as autonomous driving and drone control require real-time data processing and instantaneous decision-making. Edge Computing processes large data volumes near the source of data, reducing latency and enhancing operational safety.

Moreover, solutions are being developed enabling companies to deploy generative AI agents with one-click integration, facilitating connections to corporate data sources like Salesforce or SharePoint while maintaining data privacy and sovereignty.

Technical challenges of Edge AI

While Edge Computing offers significant advantages for distributed AI, implementing it presents technical challenges requiring effectiveness solutions.

- Resource limitations are common in Edge devices due to computing power, memory, and energy compared to cloud servers. Developing more efficient and lightweight AI models is crucial for Edge deployment without compromising accuracy and performance. Techniques like quantization, pruning, and knowledge distillation can reduce AI model size and complexity for Edge deployment.

- Model consistency is essential to maintain coherence across Edge devices, involving version management, update synchronization, and resolving conflicts in divergences. Techniques such as federated learning and transfer learning can maintain model consistency and ensure uniform performance across the Edge network.

- Security and privacy are critical, as data processing and storage occur on distributed devices vulnerable to attacks. Robust security measures, including data encryption, device authentication, and network segmentation, must protect data confidentiality and integrity. Privacy-preserving techniques, such as federated learning and differential privacy, are also essential to protect user privacy and comply with data protection regulations.

- Managing and orchestrating AI applications in distributed Edge environments can be complex. Centralized tools and platforms like the Operator Platform are needed to monitor Edge devices, deploy and update AI models, and optimize performance. Workload orchestration for AI on Edge must consider resources, latency, and security requirements.

- Interoperability among Edge platforms and devices is essential to ensure AI application portability and flexibility. Adopting open standards and common communication protocols facilitates component integration and collaboration among providers and developers.

Future perspectives and social responsibility

The future of Edge Computing and distributed AI depends on technological advancements and addressing social and ethical challenges. AI-driven automation at the Edge can impact employment, necessitating reskilling and training programs for workers.

We must ensure this technology is accessible to everyone, regardless of geographical location or socioeconomic level, bridging the digital divide.

Transparency and accountability in automated decision-making will be crucial. Auditing and tracking mechanisms for decisions made by AI models at the Edge must evolve with technology, establishing clear responsibilities and ensuring progress benefits society as a whole.

Conclusion

Edge Computing and AI are reshaping how businesses implement and utilize technology. Supported by robust telecommunications infrastructures and intelligent distributed resource management, this evolution promises increased efficiency and technical capabilities while ensuring data sovereignty and user privacy.

The success of this transition depends on overcoming technical challenges and ethical and social implications. Collaboration among developers, businesses, and regulators is essential to ensure technology evolves responsibly, protecting privacy, promoting equity, and facilitating responsible innovation.

Edge Computing and distributed AI aren't merely technological trends; they signify a fundamental shift in our relationship with digital technology, bringing AI closer to data generation and decision-making points, promising to transform industries, services, and communities with accessible, secure, and privacy-respecting AI capabilities.

■ You can access here Beyond the cloud: edge computing and the future of distributed AI →

Hybrid Cloud

Hybrid Cloud Cyber Security & NaaS

Cyber Security & NaaS AI & Data

AI & Data IoT & Connectivity

IoT & Connectivity Business Applications

Business Applications Intelligent Workplace

Intelligent Workplace Consulting & Professional Services

Consulting & Professional Services Small Medium Enterprise

Small Medium Enterprise Health and Social Care

Health and Social Care Industry

Industry Retail

Retail Tourism and Leisure

Tourism and Leisure Transport & Logistics

Transport & Logistics Energy & Utilities

Energy & Utilities Banking and Finance

Banking and Finance Smart Cities

Smart Cities Public Sector

Public Sector