How to assess AI maturity to maximize investment and ensure responsible adoption

In 2024, a global logistics company faced a dilemma: after investing in AI for predictive maintenance, automated route planning and customer analytics, its executives still lacked an answer to a key question: “Are we ready to trust AI for critical decision-making?”

Although its AI models worked in most cases, it was unclear whether the organization had the infrastructure, governance, talent and capabilities needed in ethics, privacy, sustainability and Cyber Security to meet new regulations or prevent potential cyberattacks.

What executives were really questioning was not the technology itself, but their company’s AI maturity. The lack of clarity on this aspect could create uncertainty in strategic decision-making in an environment marked by volatility, regulation, competition and connectivity.

■ AI maturity cannot be validated solely through documents or statements: it requires tangible demonstrations that practices, controls and governance mechanisms are operational and effective.

This uncertainty is common. Today, companies recognize that assessing their level and capacity of AI maturity is more of a strategic necessity than a luxury. This determination defines whether AI adoption remains limited to pilot projects or is integrated as a resilient, scalable and reliable component of the organizational DNA.

Why is it necessary to assess AI maturity?

A precise, clear and rigorous assessment of AI maturity provides organizations with a roadmap for investment, highlighting gaps and challenges between current capabilities and strategic ambitions.

Without it, AI adoption risks becoming an expensive collection of disconnected experiments with unclear returns and, in the worst cases, a liability in terms of security, privacy, ethics, sustainability and compliance.

There are currently several AI maturity assessment instruments from organizations such as NIST, OWASP and MITRE, which aim to help companies evaluate, implement, guide and improve AI adoption from a practical perspective.

■ Objective maturity emerges when assessments are tested in real-world contexts —through performance, resilience under pressure and adaptability— rather than in theoretical frameworks disconnected from practice.

Frameworks and models for AI maturity assessment

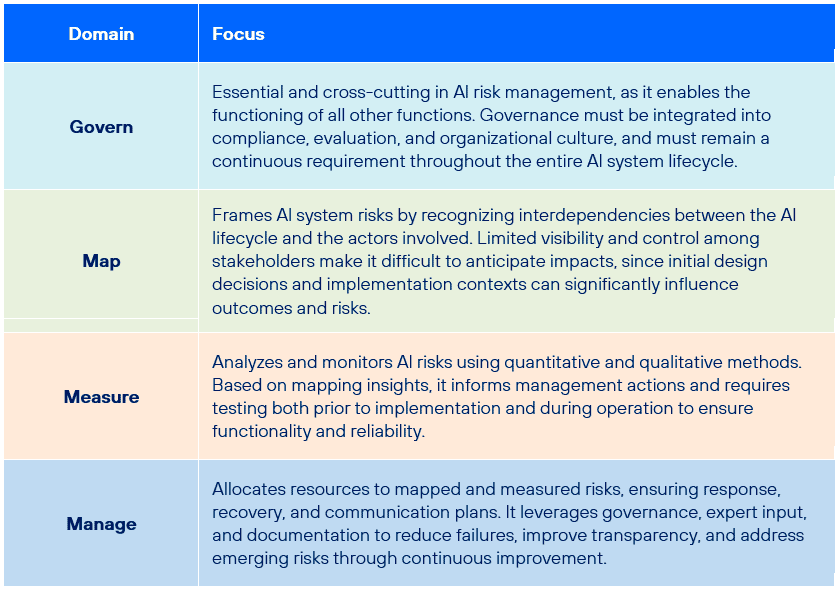

NIST AI Risk Management Framework

The NIST AI Risk Management Framework can be applied adaptively to assess AI maturity from a risk-based perspective across its functions: Govern, Map, Measure and Manage.

By framing AI maturity in terms of governance, reliability and socio-technical integration, this framework enables organizations to critically assess not only whether AI systems perform as expected, but also whether they are trustworthy, explainable, secure and aligned with ethical and regulatory expectations.

This approach strengthens decision-making by linking maturity assessment to risk considerations, helping organizations identify gaps in resilience, accountability and transparency throughout the AI lifecycle.

From a critical and analytical perspective, it transforms maturity assessment from a static compliance exercise into a dynamic process of continuous improvement, embedding risk awareness as a cornerstone of responsible AI adoption.

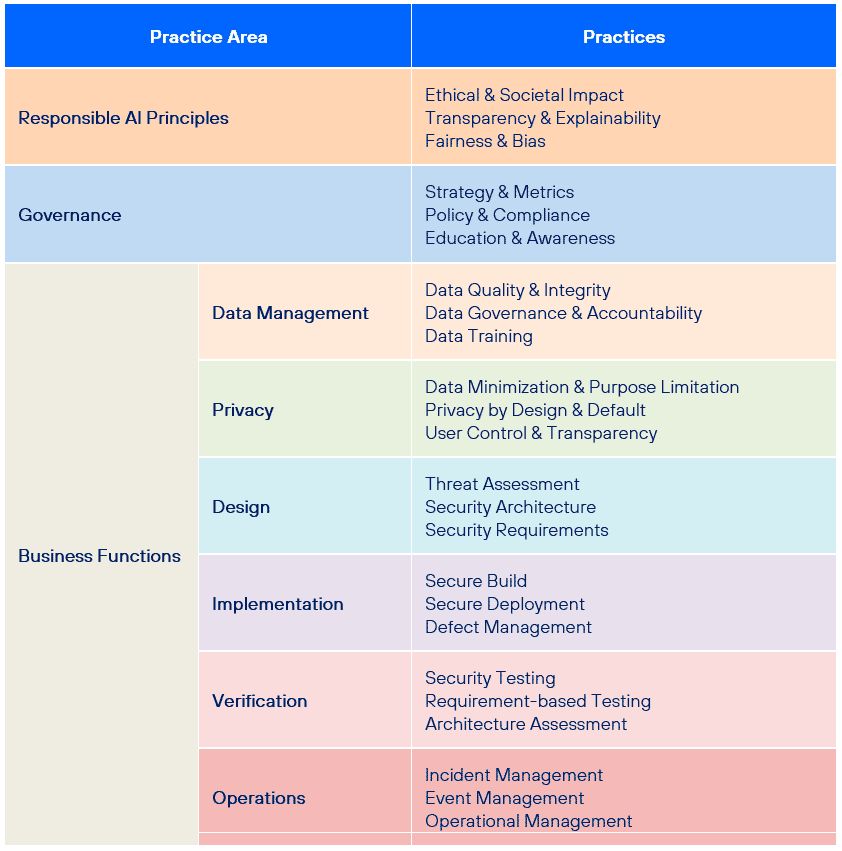

OWASP AI Maturity Assessment (AIMA)

The OWASP AI Maturity Assessment (AIMA) considers domains such as Accountability, Governance, Data Management, Privacy, Implementation, Verification and Operation, while evaluating whether AI systems align with strategic objectives, ethical principles and operational needs. It is based on the OWASP Software Assurance Maturity Model (SAMM).

This model defines three maturity levels:

- Level 1: comprehensive AI strategy with metrics.

- Level 2: continuous improvement.

- Level 3: optimization.

Applying AIMA allows organizations not only to evaluate AI readiness but also to identify gaps that could lead to ethical, operational or cyber security risks.

It naturally enables a more evidence-based decision-making process, ensuring that AI adoption aligns with resilience goals, regulatory expectations and long-term sustainability. Thus, maturity assessment becomes a tool to prioritize investments, foster continuous improvement and build trustworthy AI ecosystems that balance innovation with security and accountability.

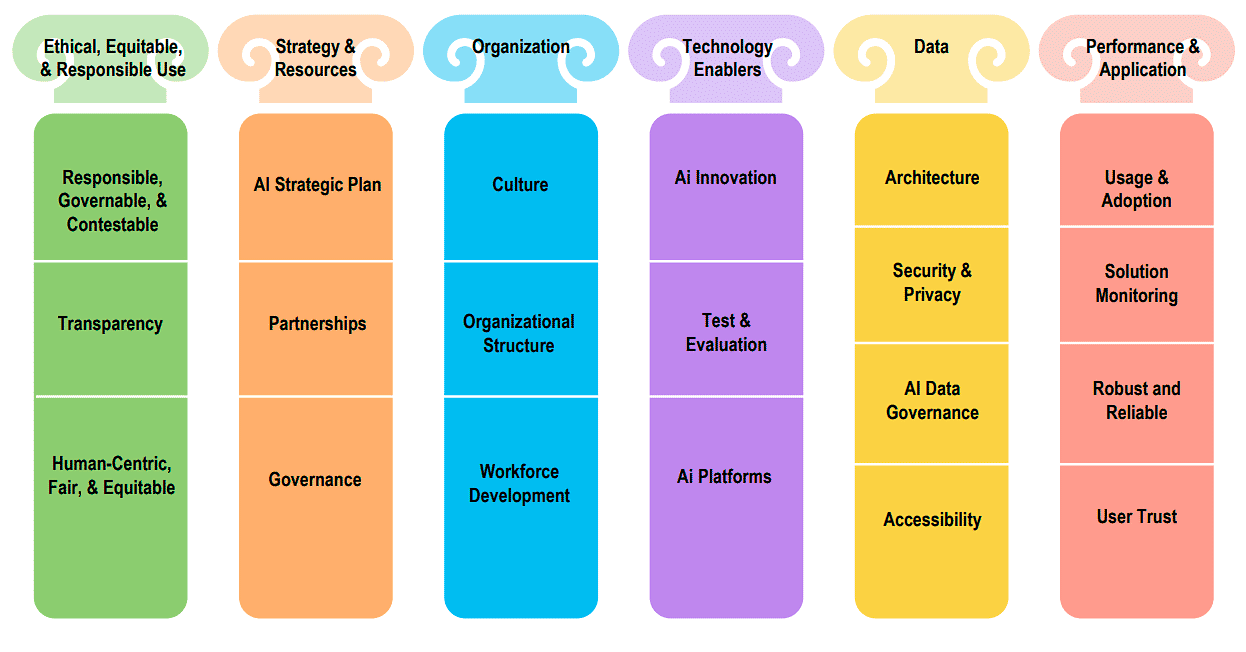

MITRE AI Maturity Model

In addition, the MITRE AI Maturity Model, together with its Assessment Tool (AT), is structured around the following domains, recognized as critical for successful AI adoption: Ethical, Equitable and Responsible Use; Strategy and Resources; Organization; Technology Enablers; Data; and Performance and Application.

Source: Mitre.

Source: Mitre.

These pillars and dimensions are evaluated across five readiness levels, adapted from the Capability Maturity Model Integration (CMMI), using qualitative and quantitative methods to describe hierarchical, scalable progress throughout AI adoption.

Unlike ad hoc or narrowly focused assessments, this framework emphasizes maturity in governance, risk management, workforce readiness, operability and alignment with strategic objectives.

Fundamentally, this approach enables organizations not only to benchmark their current state but also to identify gaps —such as ethical oversight, resilience and adaptability— often overlooked when AI is assessed solely through performance metrics.

The model drives evidence-based decisions, linking AI maturity levels with organizational outcomes and presenting maturity development as a progressive process that requires aligning people, processes and technology, rather than a static goal. This elevates maturity assessment from a compliance checklist to a strategic tool for sustainable and responsible AI adoption.

Assessing AI maturity is key to resilience and responsible innovation

Evaluating maturity capabilities is no longer a theoretical exercise but a strategic necessity for organizations operating in an increasingly digital and interconnected environment. These assessments help companies understand not only where their AI initiatives stand today, but also how to align investments, governance and skills to deliver sustainable value in the future.

By examining AI capabilities across dimensions such as data readiness, technology infrastructure, governance, security, talent and ethical adoption, organizations can transform maturity assessments into viable roadmaps for innovation and resilience.

■ In this sense, assessing AI maturity is not just about meeting requirements on paper, but about demonstrating through measurable actions that an organization is prepared to leverage AI responsibly, securely and sustainably.

Ultimately, assessing AI maturity is less about reaching a final destination and more about enabling a continuous journey of adaptation, accountability and trust. As AI systems advance and associated risks, opportunities and regulatory frameworks evolve, organizations that integrate maturity assessment into their strategic decision-making will be better positioned to deploy AI responsibly, strengthen resilience and achieve sustainable competitive advantage in the long term.

Hybrid Cloud

Hybrid Cloud Cyber Security & NaaS

Cyber Security & NaaS AI & Data

AI & Data IoT & Connectivity

IoT & Connectivity Business Applications

Business Applications Intelligent Workplace

Intelligent Workplace Consulting & Professional Services

Consulting & Professional Services Small Medium Enterprise

Small Medium Enterprise Health and Social Care

Health and Social Care Industry

Industry Retail

Retail Tourism and Leisure

Tourism and Leisure Transport & Logistics

Transport & Logistics Energy & Utilities

Energy & Utilities Banking and Finance

Banking and Finance Smart Cities

Smart Cities Public Sector

Public Sector