Improving LLMs performance using the 'Method Actor' technique

In the world of Generative AI, LLM models like Copilot, Perplexity, or ChatGPT have proven to be capable and highly useful tools for a wide variety of tasks. However, when it comes to more complex tasks, these models often fall short. This is because they lack the ability to effectively contextualize and break down problems into manageable parts, similar to what a person would do when facing the same task.

This is where the 'Method Actor' approach comes into play—a technique that, according to a recent study, significantly improves LLMs' performance in tasks that require more complex processes.

What is the 'Method Actor' approach applied to LLMs

The 'method actor' approach is a mental model for guiding prompt engineering and architecture for LLMs. This approach considers LLMs as actors, prompts as scripts, and responses as performances.

The central idea is that, just as method actors in film or theater immerse themselves deeply in the character they portray to achieve a more authentic and convincing performance, LLMs can 'get into the role' assigned in the prompt to produce more accurate and coherent responses.

⚠️ Assigning a role (roleplay) to an LLM model is good practice for maintaining effective conversations with Generative AI models. It can also be used to execute attacks on LLMs and bypass security restrictions or induce the model to provide restricted information, as we saw in Attacks to Artificial Intelligence (I): Jailbreak.

Key principles of the 'Method Actor' approach

- Prompt engineering is like writing scripts and directing: Prompts should provide context, motivation, and 'stage direction' to guide the LLM.

- Performance requires preparation: Just as an actor prepares for a role, the LLM needs 'preparation' in the form of additional information and intermediate steps.

- Decomposition of complex tasks: Tasks should be divided into simpler subtasks where imitation and authenticity produce equivalent results.

- Compensation for limitations: When imitation fails, methods that do not depend on LLMs should be used to compensate.

Examples

Solving a complex mathematical problem

- Simple prompt:

> Solve the differential equation: dy/dx = 3x² + 2x – 5 - 'Method actor' prompt:

> You are a mathematician specialized in differential calculus. Break down the differential equation dy/dx = 3x² + 2x – 5 into its simplest parts, explain each step of the solution, and provide the final solution.

Writing a literary analysis

- Simple prompt:

> Write an analysis of the use of symbolism in 'The Great Gatsby' - 'Method Actor' prompt:

> You are a literature professor specialized in the work of F. Scott Fitzgerald. Analyze the use of symbolism in 'The Great Gatsby,' focusing on the main symbols such as Dr. T. J. Eckleburg's eyes, the color green, and the valley of ashes. Explain how each symbol contributes to the overall theme of the novel.

⚠️ Making prompts too long is sometimes not good. It can cause the problem lost in the middle: when there is too much text in the prompt (you introduce a very long instruction), the LLM may 'forget' what you've asked and lose coherence in its response.

Specific improvements introduced by the 'Method Actor' model

In a recent study, researcher Colin Doyle describes how applying the 'method actor' technique introduces several key improvements in LLM performance. According to him, it achieves:

- Greater accuracy in complex reasoning tasks: By breaking down tasks and providing richer context, LLMs can tackle complex problems more effectively.

- Better handling of context: The method allows for more efficient management of the LLM's context window, resulting in more coherent and relevant responses.

- Reduction of hallucinations: By focusing on 'performance' rather than the generation of thoughts, it reduces LLMs' tendency to produce false or irrelevant information.

- Enhanced flexibility: The approach allows adapting prompts and even the system architecture to the specific needs of each task.

■ In LLMs, when working with API calls, role labels are often used: system, assistant, and user. And sometimes, especially in exercises of N-shot prompting for training or performance evaluation, a forced dialogue between the roles is used beforehand.

Study findings

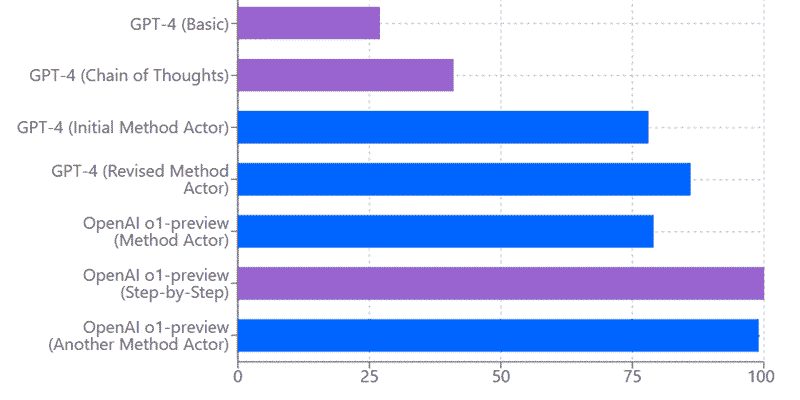

The researcher applied the 'method actor' technique to solve the Connections puzzles from The New York Times. This is a game that requires identifying groups of related words. Doyle shares the results of testing different techniques on models:

Graph generated using Claude

Graph generated using Claude

Puzzles solved with GPT-4

— Basic approach: 27%

— 'Chain of Thought' approach: 41%

— Initial 'method actor' approach: 78%

— Revised 'method actor' approach: 86%

Puzzles solved with OpenAI's eo1-preview

— 'Method actor' approach: 79%

— Step-by-step approach: 100%

— 'Method actor' approach: 99% and 87% perfectly solved

According to the study, these results prove that the 'method actor' technique can improve LLMs' performance in complex tasks or mathematical challenges and that it can even "surpass human experts in certain tasks."

Conclusion

The 'method actor' technique can improve our interaction with LLMs. Turning these models into actors capable of playing a role helps to obtain more accurate and coherent results in a wide variety of complex tasks.

This approach enhances the performance of existing LLMs and opens new possibilities for the development of more sophisticated and capable AI systems.

This research is a good reminder that sometimes the key to unlocking AI potential is not in the technology itself but in how we conceptualize and use it. Thinking of LLMs as actors on a set allows us to better leverage their capabilities, overcome their limitations, and open new possibilities with more intuitive and effective human-machine interaction.

■ You can download Colin Doyle's paper here: LLMs as Method Actors: A Model for Prompt Engineering and Architecture.

— CONTRIBUTED BY Manuel de Luna —

Hybrid Cloud

Hybrid Cloud Cyber Security & NaaS

Cyber Security & NaaS AI & Data

AI & Data IoT & Connectivity

IoT & Connectivity Business Applications

Business Applications Intelligent Workplace

Intelligent Workplace Consulting & Professional Services

Consulting & Professional Services Small Medium Enterprise

Small Medium Enterprise Health and Social Care

Health and Social Care Industry

Industry Retail

Retail Tourism and Leisure

Tourism and Leisure Transport & Logistics

Transport & Logistics Energy & Utilities

Energy & Utilities Banking and Finance

Banking and Finance Smart Cities

Smart Cities Public Sector

Public Sector