Project Zero, discovering vulnerabilities with LLM models

Introduction

That generative artificial intelligence will transform the discovery of vulnerabilities in software is something that few people doubt, what is not yet clear is simply when.

For those who don't know, Project Zero is a Google initiative created in 2014 that aims to study and mitigate the potential impact of 0-day vulnerabilities (a 0-day vulnerability is a vulnerability that attackers know about and for which there is no patch available from the vendor) in the hardware and software systems that users around the world depend on.

Traditionally, identifying software flaws is a laborious and human error-prone process. However, generative AI, particularly one whose architecture has been designed as an agent capable of reasoning and with auxiliary tools that allow it to simulate a specialized human investigator, can analyze large volumes of code efficiently and accurately, identifying patterns and anomalies that might otherwise go unnoticed.

Therefore, in this article we will review the advances made by Project Zero's security researchers in the design and use of systems based on the capabilities of generative artificial intelligence to serve as a catalyst in the search, verification and remediation of vulnerabilities.

Origins: Naptime Project

These Google security researchers began designing and testing architecture based on LLMs for vulnerability discovery and verification in mid-2023. The details of this project are perfectly detailed in a mid-2024 article on their blog, which we highly recommend reading for those interested.

We will focus here on detailing its design principles and architecture as it is interesting to understand the key components for an LLM to work effectively in the search for vulnerabilities.

Design principles

Based on the Project Zero team's broad experience in vulnerability scanning, they have established a set of design principles to improve the performance of LLMs by leveraging their strengths while addressing their current limitations for vulnerability scanning. These are summarized below:

- Room for reasoning: It is crucial to allow LLMs to engage in deep reasoning processes. This method has proven to be effective in a variety of tasks, and in the context of vulnerability research, encouraging detailed and explanatory answers has led to more accurate results.

- Interactive environment: Interactivity within the program environment is essential, as it allows models to adjust and correct their errors, improving effectiveness in tasks such as software development. This principle is equally important in security research.

- Specialized tools: Providing LLMs with specialized tools, such as a debugger and a scripting environment, is essential to mirror the operating environment of human security researchers.

Access to a Python interpreter, for example, enhances an LLM's ability to perform precise calculations, such as converting integers to their 32-bit binary representations. A debugger enables LLMs to accurately inspect program states at runtime and address errors effectively. - Seamless verification: Unlike many reasoning-related tasks, where verification of a solution can introduce ambiguities, vulnerability discovery tasks can be structured so that potential solutions can be automatically verified with absolute certainty. This is key to obtaining reliable and reproducible baseline results.

- Sampling strategy: Effective vulnerability research often involves exploring multiple hypotheses. Rather than considering multiple hypotheses on a single trajectory, a sampling strategy is advocated that allows models to explore multiple hypotheses across independent trajectories, enabled by integrating verification within the system end-to-end.

Naptime Architecture

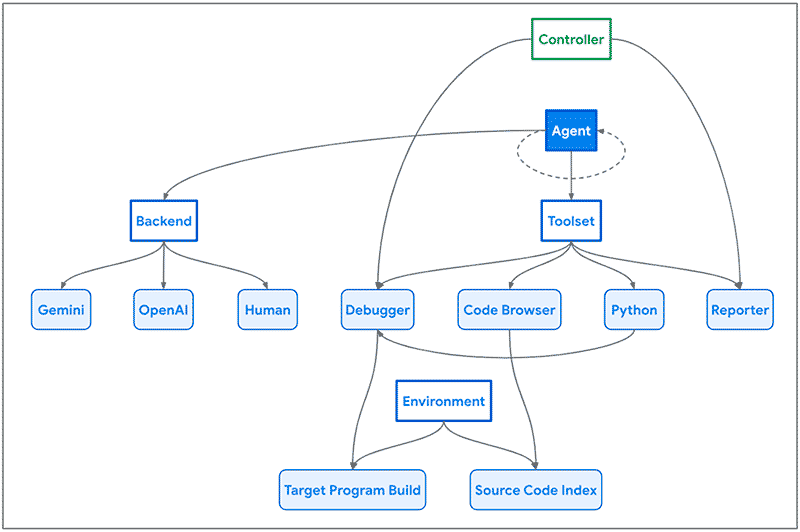

NapTime architecture - Image extracted from the Project Zero article.

NapTime architecture - Image extracted from the Project Zero article.

As we see in the image above, Naptime's architecture focuses on the interaction between an AI agent and a target code base. The agent is provided with a set of specialized tools designed to mimic the workflow of a human security researcher.

These tools include a code browser, a Python interpreter, a debugger, and a reporting tool, which allow the agent to navigate the code base, execute Python scripts, interact with the program and observe its behavior, and communicate its progress in a structured way.

Origins: Benchmark – CyberSecEval 2

Another important milestone, for the evolution and measurement of the value of these smart systems for vulnerability scanning, occurred in April 2024 with the publication and presentation by Meta researchers of a testbed designed to evaluate the security capabilities of LLMs, called CyberSecEval 2, extending an earlier proposal by the same authors, to include additional tests for prompts injection and code interpreter abuse, as well as vulnerability identification and exploitation.

This is crucial, as such a framework allows for standardized measurement of system capabilities as improvements are generated, following the principle of popular business wisdom:

What is not measured cannot be evaluated.

Evolution towards Big Sleep

A key motivating factor for Naptime and subsequently Big Sleep was the ongoing discovery in the wild of exploits for variants of previously found and patched vulnerabilities.

The researchers point out that the task of variant analysis is more suited to current LLMs than the more general problem of open-source vulnerability research.

We remove a lot of ambiguity from vulnerability research by providing a specific starting point, such as the details of a previously fixed vulnerability, and start with a well-founded theory:

This was a previous bug; there's probably another similar one out there somewhere.

- On these premises, Naptime has evolved into Big Sleep, a collaboration between Google Project Zero and Google DeepMind.

- Big Sleep equipped with the Gemini 1.5 Pro model has been used to obtain the following result.

First real results obtained

Researchers testing BigSleep, in an article, which we again strongly encourage you to read, have managed to discover the first real vulnerability in the well-known and popular open source database project SQLite.

In a very short way: they collected a series of recent commits in the SQLite repository. They adjusted the prompt to provide the agent with both the commit message and a diff of the change, and asked the agent to check the current repository for related issues that might not have been fixed.

Big Sleep discovered and verified a memory mismanagement vulnerability (Stack Buffer Underflow) in SQLite.

Eureka! Big Sleep found and verified a memory mismanagement vulnerability (Stack Buffer Underflow) that was reported to the authors of SQLite and, due to its same-day remediation, did not affect users of the popular database as it was not released in any official distribution.

Conclusions

Finding vulnerabilities in software before it is released means there is no room for attackers to compete, vulnerabilities are fixed before attackers have a chance to use them. These early results support the hope that AI may be key in this step to finally turn the tables and achieve an asymmetric advantage for defenders.

Initial results support the hope that AI can provide an asymmetric advantage for cybersecurity defenders.

In our view, the current lines of generating models with greater reasoning capacity (e.g. the o1 model announced by OpenAI) and the emergence of agents that provide the system with greater autonomy to interact with its environment (e.g. computer use by Anthropic) will be key, in the near future, to closing the gap in this type of system for the discovery of vulnerabilities with capabilities equal to or even superior (or rather complementary) to those of human researchers.

There is still a lot of work ahead for these systems to be an effective aid for the discovery and remediation of vulnerabilities, in particular for complex cases, but undoubtedly the architecture designed and the natural evolution of LLMs allow us to glimpse a near future where their use will be predominant and key to improving the global security of the world's software.

Let us end with an adaptation of the famous words the astronaut Neil Armstrong said:

One small step for LLMs, one giant leap for cyber security defense capabilities

Image: Freepik.

Hybrid Cloud

Hybrid Cloud Cyber Security & NaaS

Cyber Security & NaaS AI & Data

AI & Data IoT & Connectivity

IoT & Connectivity Business Applications

Business Applications Intelligent Workplace

Intelligent Workplace Consulting & Professional Services

Consulting & Professional Services Small Medium Enterprise

Small Medium Enterprise Health and Social Care

Health and Social Care Industry

Industry Retail

Retail Tourism and Leisure

Tourism and Leisure Transport & Logistics

Transport & Logistics Energy & Utilities

Energy & Utilities Banking and Finance

Banking and Finance Smart Cities

Smart Cities Public Sector

Public Sector