Cyber Security

AI & Data

Google: Real-time detection and protection against phone scams

Introduction Scammers steal over a billion dollars annually from individuals, with phone calls being their preferred method. Even more alarming, phone scams are evolving, becoming increasingly sophisticated, harmful, and difficult to detect. For this reason, many operators and tech giants are seeking solutions powered by Artificial Intelligence (AI) to improve defense on two fronts: identifying fraudulent calls in real-time and creating innovative strategies to divert attackers' attention and protect their customers. In this article, we’ll review the basics of phone scams, how the industry is leveraging AI to develop detection and user protection tools, and the impact of these advancements on user privacy, concluding with insights and basic tips to defend against these attacks. AI is becoming the first line of defense against phone scams. Let’s break it down: What is a phone scam? A phone scam occurs when someone attempts to deceive you into revealing personal information, money, or access to your devices through a call, text message, WhatsApp, or voicemail. Scammers may impersonate a legitimate company, a government agency, or even someone you know, such as a friend, family member, or colleague, in an attempt to gain your trust. Once they succeed, they typically request your financial data, passwords, or personal information, which they can use to steal your money or identity. Most common phone scam types Phishing: You may receive a call or text message claiming to be from your bank, a delivery company, the government, or a service provider, asking you to confirm personal details. These messages often convey urgency and might sound serious or too compelling to pass up, such as a special offer or a warning about losing access to a service. Tech support: Someone pretends to be from a tech company, warning you about an issue with your device. They’ll request remote access or payment to fix the problem, but in reality, they’re accessing your personal data. Missed calls: You receive a missed call from an unrecognized number, often from abroad. When you return the call, you’re unknowingly charged a premium rate. Prizes and lotteries: You’re told you’ve won a prize, but to claim it, you must pay a fee or provide personal details. If it sounds too good to be true, it probably is. Google: Smarter AI-powered protection against scams Google recently announced the integration of real-time scam detection into the Google Phone app, available on many Android phones. This feature, currently in beta, will continue evolving based on feedback from users who opt to test it. The rollout will start with Google’s Pixel devices and is expected to expand to more Android devices soon. How does Google’s scam detection feature work? Google’s scam detection functionality employs powerful on-device AI —a critical consideration for privacy, as we’ll discuss later— to notify you in real-time of potential scam calls by detecting conversational patterns commonly associated with scams. For example, if a caller claims to be from your bank and urgently asks you to transfer funds due to a supposed account breach, the new tool processes the call to determine whether it’s likely fraudulent. If so, it can issue an audio alert, vibration, and visual warning that the call might be a scam. Real-time alert generated by Google’s scam detection tool What about privacy? In this instance, Google, mindful of potential pushback from privacy-conscious users, has followed a privacy by design and by default (PbD) principle to safeguard your privacy and ensure users always maintain control of their data. Scam detection is disabled by default, and you can choose whether to enable it for future calls. The AI detection model and processing are entirely on-device, meaning no audio or transcription of the conversation is stored on the device, sent to Google’s servers, or retrievable after the call. At any time, users can disable this feature for all calls via the Google Phone app settings or for a specific call. O2: AI simulates an elderly persona to distract scammers Meanwhile, British telecom operator O2, part of the Telefónica group, continues investing in fraud prevention through AI-powered tools to combat spam and new call identification services at no additional cost to users. O2 has developed dAIsy, a cutting-edge AI bot designed to engage scammers over the phone for as long as possible. While they’re busy talking to dAIsy, they’re not bothering you. More information about dAIsy is available in the following video. The inspiration for this AI bot stems from an earlier tool called Lenny, a voice chatbot that uses sixteen pre-recorded audio clips to mimic a slow-speaking, tech-challenged elderly person. Lenny has also been used as a tool to counter unsolicited telemarketing calls. While it’s too early to evaluate the results of this new tool, if it achieves results similar to its predecessor, Lenny—despite its limited response options—has managed to keep unwanted callers on the line for an hour or more on occasion. This promising outcome inspired the creation of this new “endearing” robot. Conclusions Attackers employ previously unseen capabilities enabled by Generative Artificial Intelligence, such as voice cloning and real-time translation, to make identifying scam calls more challenging. It’s reassuring and logical to see the cybersecurity industry respond accordingly, designing and implementing tools that leverage this disruptive and innovative technology to protect users, as demonstrated in this article with the examples of Google and O2. These AI-based protection tools will democratize and become an integrated part of our lives in the near future. Until that happens, common sense remains our greatest ally. Here are some basic tips to protect yourself from scams, which will remain relevant even as AI helps us become more resilient: Be cautious about unsolicited calls: If someone calls asking for personal information, hang up and call the company directly using a verified number. Don’t share personal details: Banks, government agencies, and service providers will never ask for passwords or PINs over the phone. Be wary of urgent requests: Scammers often create a sense of urgency to manipulate victims. Take a moment to think before responding. Block suspicious numbers: If you keep receiving calls from a dubious number, block it. We close with a well-known quote from the late English playwright Noël Coward: It’s discouraging to think how many people are shocked by honesty and how few by deceit.

December 23, 2024

Cyber Security

AI & Data

Project Zero, discovering vulnerabilities with LLM models

Introduction That generative artificial intelligence will transform the discovery of vulnerabilities in software is something that few people doubt, what is not yet clear is simply when. For those who don't know, Project Zero is a Google initiative created in 2014 that aims to study and mitigate the potential impact of 0-day vulnerabilities (a 0-day vulnerability is a vulnerability that attackers know about and for which there is no patch available from the vendor) in the hardware and software systems that users around the world depend on. Traditionally, identifying software flaws is a laborious and human error-prone process. However, generative AI, particularly one whose architecture has been designed as an agent capable of reasoning and with auxiliary tools that allow it to simulate a specialized human investigator, can analyze large volumes of code efficiently and accurately, identifying patterns and anomalies that might otherwise go unnoticed. Therefore, in this article we will review the advances made by Project Zero's security researchers in the design and use of systems based on the capabilities of generative artificial intelligence to serve as a catalyst in the search, verification and remediation of vulnerabilities. Origins: Naptime Project These Google security researchers began designing and testing architecture based on LLMs for vulnerability discovery and verification in mid-2023. The details of this project are perfectly detailed in a mid-2024 article on their blog, which we highly recommend reading for those interested. We will focus here on detailing its design principles and architecture as it is interesting to understand the key components for an LLM to work effectively in the search for vulnerabilities. Design principles Based on the Project Zero team's broad experience in vulnerability scanning, they have established a set of design principles to improve the performance of LLMs by leveraging their strengths while addressing their current limitations for vulnerability scanning. These are summarized below: Room for reasoning: It is crucial to allow LLMs to engage in deep reasoning processes. This method has proven to be effective in a variety of tasks, and in the context of vulnerability research, encouraging detailed and explanatory answers has led to more accurate results. Interactive environment: Interactivity within the program environment is essential, as it allows models to adjust and correct their errors, improving effectiveness in tasks such as software development. This principle is equally important in security research. Specialized tools: Providing LLMs with specialized tools, such as a debugger and a scripting environment, is essential to mirror the operating environment of human security researchers. Access to a Python interpreter, for example, enhances an LLM's ability to perform precise calculations, such as converting integers to their 32-bit binary representations. A debugger enables LLMs to accurately inspect program states at runtime and address errors effectively. Seamless verification: Unlike many reasoning-related tasks, where verification of a solution can introduce ambiguities, vulnerability discovery tasks can be structured so that potential solutions can be automatically verified with absolute certainty. This is key to obtaining reliable and reproducible baseline results. Sampling strategy: Effective vulnerability research often involves exploring multiple hypotheses. Rather than considering multiple hypotheses on a single trajectory, a sampling strategy is advocated that allows models to explore multiple hypotheses across independent trajectories, enabled by integrating verification within the system end-to-end. Naptime Architecture NapTime architecture - Image extracted from the Project Zero article. As we see in the image above, Naptime's architecture focuses on the interaction between an AI agent and a target code base. The agent is provided with a set of specialized tools designed to mimic the workflow of a human security researcher. These tools include a code browser, a Python interpreter, a debugger, and a reporting tool, which allow the agent to navigate the code base, execute Python scripts, interact with the program and observe its behavior, and communicate its progress in a structured way. Origins: Benchmark – CyberSecEval 2 Another important milestone, for the evolution and measurement of the value of these smart systems for vulnerability scanning, occurred in April 2024 with the publication and presentation by Meta researchers of a testbed designed to evaluate the security capabilities of LLMs, called CyberSecEval 2, extending an earlier proposal by the same authors, to include additional tests for prompts injection and code interpreter abuse, as well as vulnerability identification and exploitation. This is crucial, as such a framework allows for standardized measurement of system capabilities as improvements are generated, following the principle of popular business wisdom: What is not measured cannot be evaluated. Evolution towards Big Sleep A key motivating factor for Naptime and subsequently Big Sleep was the ongoing discovery in the wild of exploits for variants of previously found and patched vulnerabilities. The researchers point out that the task of variant analysis is more suited to current LLMs than the more general problem of open-source vulnerability research. We remove a lot of ambiguity from vulnerability research by providing a specific starting point, such as the details of a previously fixed vulnerability, and start with a well-founded theory: This was a previous bug; there's probably another similar one out there somewhere. On these premises, Naptime has evolved into Big Sleep, a collaboration between Google Project Zero and Google DeepMind. Big Sleep equipped with the Gemini 1.5 Pro model has been used to obtain the following result. First real results obtained Researchers testing BigSleep, in an article, which we again strongly encourage you to read, have managed to discover the first real vulnerability in the well-known and popular open source database project SQLite. In a very short way: they collected a series of recent commits in the SQLite repository. They adjusted the prompt to provide the agent with both the commit message and a diff of the change, and asked the agent to check the current repository for related issues that might not have been fixed. Big Sleep discovered and verified a memory mismanagement vulnerability (Stack Buffer Underflow) in SQLite. Eureka! Big Sleep found and verified a memory mismanagement vulnerability (Stack Buffer Underflow) that was reported to the authors of SQLite and, due to its same-day remediation, did not affect users of the popular database as it was not released in any official distribution. Conclusions Finding vulnerabilities in software before it is released means there is no room for attackers to compete, vulnerabilities are fixed before attackers have a chance to use them. These early results support the hope that AI may be key in this step to finally turn the tables and achieve an asymmetric advantage for defenders. Initial results support the hope that AI can provide an asymmetric advantage for cybersecurity defenders. In our view, the current lines of generating models with greater reasoning capacity (e.g. the o1 model announced by OpenAI) and the emergence of agents that provide the system with greater autonomy to interact with its environment (e.g. computer use by Anthropic) will be key, in the near future, to closing the gap in this type of system for the discovery of vulnerabilities with capabilities equal to or even superior (or rather complementary) to those of human researchers. There is still a lot of work ahead for these systems to be an effective aid for the discovery and remediation of vulnerabilities, in particular for complex cases, but undoubtedly the architecture designed and the natural evolution of LLMs allow us to glimpse a near future where their use will be predominant and key to improving the global security of the world's software. Let us end with an adaptation of the famous words the astronaut Neil Armstrong said: One small step for LLMs, one giant leap for cyber security defense capabilities Image: Freepik.

November 20, 2024

Cyber Security

Guest registration controversy in Spain

This situation will be very familiar to readers; we've all experienced it. You arrive at a hotel or tourist accommodation and present your ID so they can collect the necessary information to register travelers. Nowadays, with the internet and the diversification of the tourism sector—empty receptions and portals with access codes—the procedure can be carried out in many ways, even online through data requests via messaging apps like WhatsApp. In my case, I've always felt uncomfortable providing this personal information online. I've even generated specific images of my national ID, often rejected by the property owner, that hide data that, in my view, is not necessary for the purpose of registration. In this article, we'll analyze a new controversy surrounding a legislative twist that could imply greater exposure in terms of privacy and an increased administrative burden for accommodations and individuals offering these services, all in the name of greater protection and operational capacity for national security. Achieving a balance between security and privacy is a very difficult target to hit. What is the origin of the controversy? Until now, the regulation governing these guest registrations dated back to 2003 and included a set of 14 data points such as the type and number of identity document, date of birth, full name, date of entry, etc. The new regulation, whose implementation date has been postponed until December 2, 2024, considerably increases the data to 42 pieces of information that must be collected by owners of tourism-oriented accommodations. It now becomes necessary to register personal data such as current address, email, contact mobile number, the payment method used for the accommodation, or the relationship to minors under 14 if they are also included among the travelers. Why are these changes being made? The reasons stated by the Ministry of Interior to justify the need for this new registry are outlined in the regulation itself: Currently, the greatest threats to public safety are posed by both terrorist activity and organized crime, both of a marked transnational character. In both cases, lodging logistics and motor vehicle acquisition or use play a special role in criminals' modus operandi. Their hiring is done today through countless means, including telematics, which provides greater privacy in these transactions. This data must be provided through an online platform called SES Hospedajes, created for this purpose by the Ministry of Interior. However, both privacy and data protection experts and significant members of the hospitality sector express concern about the implementation of this new regulation. Hospitality sector expresses disagreement. They believe it notably increases the administrative burden and slows down the check-in processes at accommodations, particularly for small businesses or individuals providing these services, directly impacting customer satisfaction. From a privacy perspective, experts like Borja Adsuara, a professor and lawyer in Digital Law, show concern and consider the increase and nature of the data requested for the intended purpose in the upcoming regulation—which will come into effect this December—to be disproportionate. The interconnection with state security and law enforcement databases must have proper authorization. Protection of personal information The Spanish Data Protection Agency has issued a technical report on the impact of the new royal decree, and we strongly encourage full reading. Some conclusions can be drawn: The agency recommends an "impact assessment on data protection of the collection and communication described" to ensure compliance with the principle of data minimization under the personal data protection regulation. The agency highlights that there are European directives yet to be transposed in Spain regarding the processing of personal data for the purposes of prevention, investigation, detection, or prosecution of criminal offenses, which, it warns, may render the provisions mentioned in the new royal decree meaningless. The agency mentions that it sees the need to clarify who exactly are the competent authorities to receive information and questions the need to include the Ombudsman in general, given its purpose. The agency adds that it will be necessary to have due legitimacy for the interconnection with state security forces' databases to take place within the scope of a specific investigation. Conclusions The balance between security and privacy is a very difficult target to hit; there will always be reasons to justify one position or the other. Regarding the specific topic of this article, in my opinion—and agreeing with the Spanish Data Protection Agency analysis-it is necessary to clarify and ensure compliance with the principles of data minimization, adequacy to the purpose of data processing, and conduct a detailed and rigorous impact analysis that ensures national security. But without risking, beyond what is strictly necessary, individuals and citizens' privacy. On the other hand, the use of an online platform for submitting information, while it may facilitate implementation and usability, can also, in my view, generate transcription errors or a greater exposure of an "attractive" asset for cybercriminals, such as a database with sensitive and relevant information. With the increase in cyberattacks and leaks of confidential information, the fortification of these databases or files must be carried out with the utmost care and following basic security principles like zero trust, principles of least privilege, and strict access control. We conclude with a reflection from Edward Snowden: Arguing that you don’t care about privacy because you have nothing to hide is like arguing that you don’t care about free speech because you have nothing to say. ______ Cyber Security Tourism sector cyber-resilience: challenges and best practices October 21, 2024

November 6, 2024

Cyber Security

Thousands of vulnerable traffic lights must be replaced in the Netherlands

Smart IoT devices represent a significant step forward in urban infrastructure management, part of the global Smart Cities initiative. The benefits are clear: better preventive maintenance, efficient resource management, and the ability to respond remotely in emergencies. However, these advantages must be accompanied by deep reflection, observation, and the implementation of security measures to minimize the risks posed by potential attackers. The attack surface expands, and in this case, it has a tangible impact on the physical world. Therefore, the principle of security by design should be strictly followed. This is easier said than done. Largely because technology is advancing at such a fast pace, properly modeling threats becomes significantly more challenging as new innovations appear. These innovations are rarely fully anticipated in the initial implementation phase but emerge as time goes on. In this article, we will review the recent discovery of a vulnerability affecting tens of thousands of traffic lights in the Netherlands, which will require manual replacement of the same as the only option of remediation, as a high-cost project estimated to last for six years until 2030 by the Dutch government. It is planned to replace the traffic lights by 2030. What happened? Almost all traffic light installations in the Netherlands can detect approaching road users and adjust accordingly. In other words, they are smart. These traffic lights can be influenced by a system called traffic signal preference, primarily used to stop conflicting traffic and allow emergency vehicles to pass through. The system, known as KAR, has been used in the Netherlands and Belgium since 2005. It reduces response times and improves traffic safety. Earlier this year, Alwin Peppels, a security engineer working for Dutch security firm Cyber Seals, initiated an investigation and discovered a vulnerability that could allow malicious actors to change traffic lights at will. According to research, bad actors can exploit this vulnerability using SDR (software-defined radio) technology to send commands to the control boxes located next to the traffic lights. This exploit specifically targets the emergency radio signal used by ambulances and fire trucks to force traffic lights to turn green, allowing them to pass easily through intersections during emergencies. There is a possibility that an attack on this new system may be much more damaging than this experiment, since you could potentially control all traffic lights throughout an entire province. In his experiment, Peppels built a similar system and "hacked" the traffic lights with just the press of a button. Aside from the ease of execution, this attack could be carried out from kilometers away and affect multiple intersections simultaneously, making the potential impact substantial. This has forced the Dutch government to take extreme and costly measures. Other precedents Back in 2020, Dutch ethical hackers conducted an experiment, this time by reversing apps designed for cyclists, and discovered they could cause delays in at least 10 cities. They simply spoof traffic data to interfere with traffic lights. This investigation was presented at DEFCON 2020. You can watch a video of it here if you want to dive deeper into the details. For some context on the importance of this precedent, those who haven’t visited Amsterdam (those who have will understand immediately, as is the case with me) or any other part of the Netherlands may not realize the country’s impressive cycling infrastructure: over 35,000 kilometers of bike paths and more than 20 million bicycles (more than the population itself). Mitigation Returning to the vulnerability discovered by Peppels, the researcher shared his findings—details of which were not fully disclosed to prevent abuse—with the Dutch cybersecurity agency. The solution, confirmed by the Ministry of Infrastructure and Water Management, was to establish a plan to replace all traffic lights. However, there are tens of thousands of them, so the process will take time: the current plan is to complete replacement by 2030. In addition, emergency services and public transport must upgrade their vehicles to work with the new system. The current traffic control system (KAR) will be replaced by a new system for traffic signals that has already been designed in the Netherlands, called "Talking Traffic." These new signals will be connected to the web via mobile networks rather than controlled by radio, making them invulnerable to this specific hack. However, authorities acknowledge that new risks may emerge. As Peppels himself states: “An attack on this new system could, in theory, be much more damaging than my experiment, as you could potentially control all the traffic lights in an entire province. This new system will be used for ten, maybe twenty years, and we only have one chance to build it correctly and securely. We shouldn’t sacrifice security for convenience.” To maximize their lifetime under 'safe' conditions, the safety-by-design paradigm must be followed. Conclusions The rise and democratization of software-defined radio technology, with widely accessible devices, pose a significant risk to all those older systems controlled or modifiable by radio signals, especially older ones that haven't considered basic factors like authentication (as we saw in our previous article), access control, or potential replay attacks. For radio systems currently being designed and deployed, it is essential to follow the security-by-design paradigm, at least to maximize their lifespan under "safe" conditions. Aside from the security component in the design and use of smart devices for traffic control and identification, citizen privacy must also be considered. Recently, the Dutch Data Protection Authority expressed concerns about the privacy risks posed by these types of smart traffic systems. According to the Dutch Data Protection Authority, road authorities have not thought carefully about the privacy risks associated with these traffic lights. It is also not always clear with whom the data is shared or who is responsible for collecting and using it. According to the General Data Protection Regulation (GDPR), these matters must always be clarified before data collection begins. As the saying states, Intelligence is the ability to adapt to change. We must apply this principle to the design and security of our "smart" devices.

October 23, 2024

Cyber Security

POCSAG pagers: vulnerable by design

Pagers, or "beepers," are personal communication devices that were highly popular before the advent of mobile phones. They allow users to receive text messages or alerts anytime and anywhere, which revolutionized communication at the time. Today, pagers are less common but still in use, despite security issues due to their simplicity and lack of modern protection measures. In this article, we will analyze the inherent vulnerabilities in the design of certain protocols used by pagers, such as POCSAG. The use of these insecure protocols, combined with the emergence and democratization of SDR (Software Defined Radio) hardware, forms a dangerous cocktail that, in our view, should prompt a reconsideration of their suitability in critical sectors like healthcare or industry. How do pager networks work? A pager network operates by transmitting messages over a radio frequency to devices that are always listening. Each pager has an individual identifier, known as a capcode or RIC (Radio Identification Code), which tells it which messages to receive. Each pager is programmed to respond to one or more capcodes, determining which messages it should receive and how it should react. This system allows for both individual messaging and broadcasting, where a single message can be sent to multiple devices sharing the same capcode. However, these networks lack any form of authentication or encryption, meaning messages sent to pagers are transmitted openly. This creates a significant security risk, as anyone with a basic radio transmitter can inject messages into the network simply by knowing the frequency and the capcode. While this lack of authentication can be useful for quickly setting up personal pager networks, it poses a threat in real-world. For example, attackers could manipulate critical systems like hospital pager networks or industrial control systems, potentially causing severe disruptions. Types of messages sent to a pager Pagers allow the reception of multiple types of message. The main ones are: Alert Messages: Trigger simple signals like vibrations or audible notifications, often used in emergency situations. Numeric Messages: Such as short codes previously agreed upon by service users or phone numbers. Alphanumeric Messages: These are the most complex, allowing both text and numbers. ✅ This flexibility makes pager networks useful for sending anything from critical emergency alerts to more detailed instructions or notifications. How is a POCSAG message composed? Below, we detail the main parameters required to send a POCSAG message: Bitrate: This is the speed at which the message will be transmitted. The most common bit rate for POCSAG transmissions is 1200 bps, although some networks use 512 or 2400 bps. Phase: In POCSAG transmissions, there are two possible phase configurations: N and P. This setting determines how the signal is modulated. Most pagers will work with either, but it's essential to match the network's configuration for correct message delivery. Type: This determines the message format. You can choose between alert, numeric, or alphanumeric. Function: This represents the pager function code. Function codes can trigger different responses, such as sound, vibration, or a specific display mode. Message: This field contains the actual body of the message to be transmitted. ✅ To transmit the message, the pager's capcode, which is its unique identifier, is also required. The capcode is usually found on the pager back. Is spoofing easy in POCSAG? What is needed? It's surprisingly simple and even inexpensive to do this with basic hardware like the popular HackRF, making it portable through a battery system such as a portapack, often sold together for a price around €200. Additionally, this hardware can be enhanced with new capabilities using the well-known Mayhem firmware. With just that, one would have the necessary equipment to intercept communications in a pager network and even inject messages once the network frequency and individual device identifiers have been obtained. Vulnerability by design Since POCSAG lacks authentication or encryption for obtaining capcodes for potential subsequent attacks or impersonation (in true wireshark style), one can capture the capcode and frequency by receiving and decoding pager network transmissions using any SDR device capable of listening to and capturing network messages. Conclusions In this article, we review how certain messaging protocols like POCSAG, used in pager networks, are inherently insecure due to lack of authentication and encryption. The system treats any correctly formatted transmission as legitimate, making it very easy to spoof a message on the network using readily available and affordable tools. The design of these protocols, obsolete in terms of security, is a risk in itself. This underscores the need for stronger security measures or to move away from outdated technologies that are still widely used but no longer meet modern security requirements. ____ Cyber Security Classified Cyber Ranges: the invisible battlefield of military cyber defense September 24, 2024

October 9, 2024

Cyber Security

Digital wallets: Maximum usability, maximum security?

Introduction Most of us are now accustomed to digital wallets on our mobile devices. We rarely carry physical bank cards with us in our daily lives and travels, at least in my case. If you’re also a heavy mobile digital wallet user, the research presented in this scientific article will interest you. We’ll analyze the vulnerabilities identified in the study, their impact, and explore the researchers’ proposed solutions, drawing conclusions along the way. Digital wallets To start, let’s define digital wallets and their utility. They represent a new form of payment technology, providing a secure and convenient way to make contactless payments through smart devices like mobile phones. The most well-known applications include Gpay (Android), ApplePay (iOS), PayPal, among others. Through the app, we can incorporate our bank cards, authorize this new form of payment, and from then on, carry the card on our mobile device, enabling us to make payments or even withdraw cash from ATMs using the NFC technology embedded in our mobile terminals. This undoubtedly enhances user convenience and usability since, nowadays, the mobile phone is the first item we ensure we have before leaving home. With digital wallets and the digitalization of national identification systems (such as the driver’s license or the national ID), it’s almost the only thing needed, along with our keys. However, the intriguing research we reference raises the question of whether the balance between usability, which is undeniable, and security is adequate. This poses a series of important questions: Authentication: How effective are the security measures imposed by the bank and the digital wallet application when adding a card to the wallet Authorization: Can an attacker pay using a stolen card through a digital wallet? Authorization: Are the victim’s actions, i.e., (a) blocking the card and (b) reporting the card as stolen and requesting a replacement, sufficient to prevent malicious payments with stolen cards? Access control: How can an attacker bypass access control restrictions on stolen cards? As a result of digital wallet payments, a new player enters the scenario: the digital wallet application and its integration with smart devices, as well as the trust that banks place in them. This new digital payment ecosystem, which allows for decentralized delegation of authority, is precisely what makes it susceptible to various attacks. Summary table of findings from the research presented last week at the USENIX Security Conference Next, we’ll delve into the main weaknesses and vulnerabilities uncovered by the researchers, as summarized in the previous table. There is too much reliance on digital wallets by banks without checking ownership of cards. Authentication: the process of adding cards to the digital wallet According to the research, the main issue lies in the fact that some banks do not enforce multi-factor authentication (MFA) and allow users to add a card to a wallet using knowledge-based authentication, a procedure for which attackers can exploit leaked or exposed personal data of the victim to impersonate the legitimate cardholder when adding the card to the digital wallet. ✅ For example, an attacker could add a stolen card to their digital wallet using the victim’s postal code, billing address, or other personal details that can be easily discovered online or included with the stolen card package by a dark web vendor. In multiple cases, the wallet user can choose the authentication mechanism to use from the options available in agreements between the bank and the digital wallet app. However, in reality, the most secure option should always be chosen from among those available. Authorization: at time of payment At the time of payment, it’s common, at least in my experience, to use biometric parameters to approve payments on a point-of-sale terminal, which, by design, isn’t entirely correct. It's strong authentication from a technical standpoint, but it doesn’t demonstrate what is required when using a physical card (the possession of the card and our legitimate use of it, such as with a classic PIN) but rather the possession of a smart terminal and a card available in a digital wallet, which isn’t the same. Numerous banks allow the use of the same card on multiple terminals. This is useful, for example, for users who use more than one terminal or for parents who allow certain bank cards to be used by their children. ⚠️ However, this facilitates attackers' work if they obtain a stolen card, exploiting the time between the theft and notification of the loss. If they manage to associate the card with their digital wallet, they can then use it for future payments simply by owning a terminal, something they logically meet. Authorization: what happens to stolen cards? An even more surprising finding of the research is the lack of rigor in critical processes, such as the notification of card blocking or theft by the victim. The researchers found that even if the victim detects that their card has been stolen, blocking and replacing the card doesn’t work 100%, and the attacker can still use the card previously added to their wallet without requiring (re)authentication. The main issue here is that banks assign digital wallets a PANid (Primary Account Number Identification) and a payment token when adding a card. When a card is replaced, some banks issue a new PANid and a payment token to all wallets where the card was added, assuming all wallets are controlled by the legitimate owner. These tokens allow attackers to continue using their digital wallets, now authorized to make purchases with the reissued card. ⚠️ This is serious, a clear example of excessive trust between the bank and the digital wallet: assuming that the cards in digital wallets are legitimate, they associate the new card identifier with the same payment token already present with the old card, allowing for fraudulent use even if the card has been reported to the bank by the victim. Access control: one-time vs. recurring payments The last point highlighted in the research is the difference between bank policies on individual payments and recurring payments. Banks generally allow recurring payments even on blocked cards to minimize the impact on customers’ services, such as a Netflix subscription or similar. However, it’s the online store that exclusively decides and labels whether a payment is marked as recurring or one-time. The verification of the recurring nature of a payment leaves much to be desired, as the researchers empirically discovered, allowing for payment of services that logically should not be recurrent due to the characteristics of the service itself, such as purchasing a gift card or renting a car, nor due to the amount or frequency of payments. This lack of validation in recurring payments can enable an attacker to use a stolen card for payments that would be blocked if they were labeled as one-time payments, with the complicity of a fraudulent business, or simply by seeking out merchants that default to such payment labels. Mitigations Let’s review the measures the authors propose to address deficiencies identified in their research. Authentication: This can be easily prevented by enforcing MFA and sending an SMS or push notification to the victim’s phone number, a challenge that few attackers could overcome, at least not passively. Authorization: Once the digital wallet is authenticated and a payment token is issued, the bank uses the token indefinitely, which never expires. This establishes unconditional trust in the wallet, which neither expires nor changes, even during critical events such as card loss, device loss, and card deletion. Banks should adopt a continuous authentication protocol, re-authenticate the digital wallet, and refresh the payment token periodically, at a minimum after critical events such as card loss. Recurring Payments: Today, the bank relies on the transaction type label assigned by the merchant to decide on the authorization mechanism. The bank should evaluate the transaction metadata and validate it (e.g., time, frequency, and type of service/product) with the transaction history information to assess whether the transaction is of a certain type or not. The mobile payment system needs to be more secure to prevent fraud and abuse. Conclusions This study is based on American banking entities but is likely applicable to other banking entities worldwide. The trust banks place in digital wallets is currently excessive, seeking a smooth interaction with the end-user —a naturally good thing— but critical processes such as adding a card to a wallet should be carefully reviewed and fortified to ensure the legitimate ownership of the card by the user and to periodically verify that this continues to be the case over time. Biometrics cannot guarantee strong authentication for digital wallet payments. The use of biometrics to make a payment gives the appearance of strong authentication, but in the case of digital wallets, it doesn’t verify the essential aspect of a payment, which is that a user legitimately possesses a terminal, not something actually related to the card we’re using. This design flaw is a security gap through which attackers can slip… It seems there’s a need to significantly improve the “abuse cases” in the secure development cycle of digital wallet applications and their integration with banking entities. As they say in Galicia, Friends, yes, but the cow is worth what it’s worth.

August 28, 2024

Cyber Security

Digicert incident, or when a certificate revocation ends in a lawsuit

Introduction We are already more than used to browsers warning us more and more aggressively when we are not browsing securely. This protection is done through the use of TLS/SSL certificates. The communication between our browser and the server that provides us with the information we are accessing is encrypted and protected by means of public keys included in these TLS certificates. The process of obtaining and managing these certificates is a game with very clear rules that are defined jointly by the main Internet browsers, Chrome, Firefox, Safari, Edge, and the certification authorities in a forum known as CABF (Certificate Authority Browser Forum). Imagen generada automáticamente con IA A recently detected technical incident by DigiCert, one of these Certificate Authorities (CA ), has required the urgent invalidation and reissuance of a significant volume of certificates, but some customers, in particular some critical infrastructure, have not been happy about this urgency and have sued Digicert for damages. In this article we will review what has happened, the impact it has had and draw some conclusions about what steps will be taken to improve this process in the future. What happened? DigiCert warned in late July that it would massively revoke SSL/TLS certificates due to an error in how the company verified whether a customer owned or operated a domain and required affected customers to reissue certificates within 24 hours (the deadline was July 31). DigiCert is one of the prominent certificate authorities (CAs) providing SSL/TLS certificates, including Domain Validated (DV), Organization Validated (OV) and Extended Validation (EV) certificates. ✅ These certificates are used to encrypt communication between a user and a website or application, increasing security against malicious network monitoring and MiTM (man in the middle) attacks. When issuing a certificate for a domain, the certificate authority must first perform Domain Control Verification (DCV) to confirm that the customer is indeed the owner of the domain. One of the methods used to validate domain ownership is to add a string with a random value in the certificate's CNAME DNS record and then perform a DNS lookup for the domain to ensure that the random values match. Let's take an example from DigiCert's own technical incident report: If a certificate is requested for foo.example.com, a valid DNS CNAME record can be added in three ways, one of them being: 1. _valueRandom.foo.example.com CNAME dcv.digicert.com In this form of validation, an underscore prefix ('_') is required with the Random value. The underscore prefix requirement in this case is based on RFC1034, which requires domain names to begin with an alphanumeric character. Including an underscore means that the subdomain used for validation can never match an actual domain. Not including the underscore is considered a security risk because there is a possibility of a collision between a real domain and the subdomain used for verification. DigiCert after an internal investigation discovered that the problem was that for a period of approximately 5 years (since August 2019), their platform did not include validation of that prefix in the domain owner identification mechanism, nor did they indicate it in their documentation. Even when customers successfully proved their identity, and it is highly unlikely that this was abused in any way, this broke the CA/B Forum rules, initiating the urgent revocation process marked in the “rules of the game”. 4.9.1.1 Reasons for Revoking a Subscriber Certificate […] With the exception of Short‐lived Subscriber Certificates, the CA SHALL revoke a Certificate within 24 hours and use the corresponding CRLReason (see Section 7.2.2) if one or more of the following occurs: 5. The CA obtains evidence that the validation of domain authorization or control for any Fully‐Qualified Domain Name or IP address in the Certificate should not be relied upon. Incident impact In this case, the company discovered that nearly 85,000 TLS certificates (approximately 0.4% of all DigiCert certificates) were issued without the presence of the aforementioned underscore while there was a bug present in their platform that did not verify this point. Has this never happened before? Several CAs have ignored trivial security incidents like this in the past. Flaws in verification procedures that occurred and were overlooked, especially when the chances of something like this being actively exploited by a malicious actor are minuscule. However, Digicert's error occurred at a “hot” time, just as Google had withdrawn trust from two CAs in recent weeks - GlobalTrust and entrust. It seems that Digicert did not want to anger Google's security team because of the risk that removing trust from their certificates would pose to all their customers. So DigiCert decided to follow the established procedure to the letter and revoke all those certificates it issued and were affected while the bug was active on its platform. When the legal departments come into play DigiCert claims the process was going very well for the most part until it began hearing from critical service operators, where certificates could not be replaced with that window of urgency due to strict maintenance and uptime requirements. Things got uglier when one of DigiCert's customers, U.S. healthcare payment processor Alegeus Technologies, sued the company and obtained a favorable temporary injunction preventing DigiCert from revoking its certificate. Digicert then contacted the CABF forum to resolve this delicate situation and agreed to an extension of the reissuance deadline until August 3 for certain affected critical infrastructure operators and a longer deadline, yet to be set by the courts, for the case with an active lawsuit. Conclusions As conclusions, we highlight two main points: Digicert in its incident report has committed to release the code used for the domain validation check (scheduled for November 2024). This will allow the community to review the code and try to ensure that this type of error is detected earlier to minimize the impact. It seems clear from the CABF “rules of the game” that certain exceptions should be included regarding 24-hour emergency certificate revocations for critical infrastructure operators who will not be able to honor that window for obvious continuity reasons. It is one thing to urgently revoke a TLS certificate of a common business, and quite another to urgently revoke the certificate of an emergency center service such as 112 or an emergency reporting service used by the petrochemical industry, for example. Cyber Security Understanding Digital Certificates July 12, 2022

August 21, 2024

Cyber Security

Microsoft Secure Future Iniciative: Drum roll

Introduction Many of our readers (at least those with some white hairs) will remember the famous email from Bill Gates to all Microsoft employees, back in 2002, prioritizing security over any other feature within the Seattle-based technology giant. That email entitled “Trustworthy Computing” was a paradigm shift, prioritizing secure development throughout the company and changing the more or less widespread view among Microsoft users that its software contained many bugs and design problems that made it unstable.. It's been more than 20 years... but once again Microsoft has been forced to make a similar communication through CEO Satya Nadella following a series of high-profile incidents that have once again affected Microsoft's reputation and called into question its security culture and posture by many cyber security experts globally. In this article we will review the incidents that have led to this new security drumbeat at Microsoft, how this may affect the maintenance of legacy systems (a policy deeply rooted in Microsoft's culture) and the key points, we believe, of this new statement. What is the Microsoft SFI - Secure Future Initiative? Microsoft launched the Secure Future Initiative in November 2023 to prepare for the growing scale and high impact of cyberattacks. SFI brings together all parts of Microsoft to advance cyber security protection across the company and its products. An article published this May details the acceleration and extension of SFI within the company following recommendations received by the U.S. State Department's cyber security committee. The image below is a brief summary of this new turn of the screw. Secure Future Initiative Summary. Source: Microsoft. The SFI plan is based on these three safety principles: Secure by design: Security comes first when designing any product or service. Secure by default: Security protections are enabled and enforced by default, require no additional effort, and are not optional. Secure operations: Security controls and monitoring will be continuously improved to address current and future threats. Its impact is summed up in this powerful phrase that Nadella himself repeats in his email to his more than 200,000 employees: We are making security our top priority at Microsoft, above anything else. Response to recent attacks suffered by Microsoft It is clear that this acceleration of the SFI plan is in response to several high-impact incidents suffered by Microsoft in the recent past. 2021: Several attackers targeted Microsoft Exchange servers with 0-day exploits in early 2021, allowing them to access email accounts and install malware on servers hosted by several companies. 2023: A group of Chinese attackers, known as Storm-0588, gained access to U.S. government emails thanks to an exploit in the Microsoft cloud. 2024: The same attackers behind the SolarWinds incident, known as Midnight Blizzard, were recently able to spy on the email accounts of some members of Microsoft's senior leadership team last year and even steal source code in early 2024. Legacy systems support vs. security In the interesting email from Microsoft's CEO (which we obviously recommend reading for those interested in delving deeper into this topic), we rescue a sentence that may lead to significant changes in Microsoft's culture and policy to date. This will, in some cases, mean prioritizing security over other things we do, such as releasing new features or providing continuous support for legacy systems. Microsoft's effort to support legacy systems is well known to the industry. Something that many of its competitors do not treat with such courtesy and often hinders, or at least slows down, Microsoft's ability to deliver software. We might be seeing a shift in that direction. How to ensure the implementation of the SFI plan? - The wallet? Among the action items to ensure proper implementation of the new security prioritization approach Nadella mentions catches the eye with this sentence: We will also foster accountability by basing part of the senior leadership team's compensation on our progress toward meeting our security plans and milestones. In other words, beyond the pillars of the plan described in his article, Microsoft is willing to make a strong bid to ensure its proper execution through a modulation of Microsoft leadership compensation based on the progress and milestones of the SFI plan. We believe that this statement will undoubtedly generate internal interest in the company... "you can't play with your bread and butter”. Conclusions Microsoft is clear that trust is a fundamental pillar for its customers and with this new email to all its employees, "refreshes" its importance and focus after that famous email from Bill Gates at the beginning of the 21st century. Trust is a very ungrateful characteristic, like muscle, it is gained ounce by ounce but lost in a pronounced rapid fashion if it is eroded or neglected. That Microsoft has adopted security as a top priority again is great news for customers, as the move will drive competition among companies as to which one is more secure. Will we see in the near future companies outright touting their security as an advantage in future earnings presentations? Perhaps it is too much to dream... Cyber Security Four cyber security milestones that shaped the future of malware May 22, 2023

May 22, 2024

Cyber Security

Massive attack detected after Automatic plugin vulnerability for Wordpress

Introduction Wordpress is, in a very prominent way, the leader in content management systems. Its figures are dazzling, it supports more than 43% of the world's websites with nearly 500 million sites. Taking into account this market dominance, attackers are increasingly interested in finding vulnerabilities to allow in the popular CMS. Typically, and historically, the Wordpress core is relatively secure. They have a strong security team and good development cycle practices. So how to proceed? The attackers' answer is to look at the weakest link of the platform, in this case its extensibility. Wordpress plugins are components, which can be developed by third parties, to provide a certain functionality, an easier administration, an out-of-the-box newsletter, a rotating image gallery, etc. Wordpress has no less than 70,000 plugins developed and many end users use them to speed up the process of creating the website they want to create. In turn, many plugins are tremendously popular. There are more than 70,000 Wordpress plugins widely used to streamline website creation. Will the development processes be as secure as those of the Wordpress base system? The answer is that it depends on those third parties, and as in every family, there is everything. This draws the interest of attackers who scan plugins looking for their gateway to the Wordpress manna. There have been countless occasions when attacks on such plugins have been discovered, with this or this other recent example, with varying impact depending on the popularity of the plugin. In this article we will discuss a recent vulnerability found in March 2024 in the Automatic plugin that is being actively exploited. What is the Automatic plugin like, is it popular? The Automatic wordPress plugin publishes content from almost any website to WordPress automatically. It can import from popular sites like Youtube and X (...Twitter) using its APIs or from almost any website, using its scraping modules. You can even generate content using OpenAI GPT. Automatic is a plugin developed by ValvePress with more than 38,000 paying customers. Researchers at security firm Patchstack revealed last month that versions 3.92.0 and earlier of the plugin had a vulnerability with a severity rating of 9.9 out of a possible 10. The plugin's developer, ValvePress, released a patch, which is available in versions 3.92.1 and later and should logically be installed immediately by anyone using this plugin. The release of the patched version, however, does not explicitly mention the fix for the vulnerability so we could talk about a silent patch. This is not considered a good practice because it does not reflect the criticality of the upgrade to end users. What vulnerabilities have been found? The vulnerability (CVE-2024-27956) is a SQL injection that could allow unauthenticated attackers to create administrator accounts and take control of a WordPress site. This class of vulnerabilities stems from a bug in a web application to properly query databases. SQL syntax uses apostrophes to indicate the beginning and end of a data string. When inserting strings with specially positioned apostrophes into vulnerable fields on the website, attackers can execute specially manipulated SQL statements that perform several sensitive actions: return sensitive data, grant system administrative privileges or more generally abuse the operation of the web application. The picture for an attacker could not be better. We are talking about unauthenticated access, i.e. it is not necessary to have access to user credentials of the victim Wordpress website, nor administrator or even content creator and allows to create administration accounts, i.e. to become a superuser of the website. This vulnerability in Wordpress allows you to create administrator accounts and gain full control of the website without being a previous user or administrator. Is it actively trying to be exploited? Wordpress security firm WPScan published a post on the exploitation of this vulnerability, where they revealed that they have recorded over 5 million attempts to exploit the vulnerability since its disclosure. The summary exploitation process would be as follows: SQL Injection: Attackers exploit the SQLi vulnerability in the plugin to execute unauthorized database queries. Administrator User Creation: Attackers can create new administrator-level user accounts within WordPress with the ability to execute arbitrary SQL queries. Malware Upload: Once an admin-level account is created, attackers can upload malicious files to host malware that will later be downloaded by victims, and also typically shells or backdoors to maintain access. Once a WordPress site is compromised attackers often rename vulnerable files for two main reasons: To evade detection and maintain access, i.e., seeking persistence on systems and making it difficult for website owners or security tools to identify or block the problem. It can also be a way attackers find to prevent other malicious actors from successfully exploiting their already compromised sites, a bit selfish these attackers, aren't they? Mitigations Considering the criticality of this threat, website owners should take immediate steps to protect their WordPress sites. Plugin Updates: Ensure that the Automatic plugin is updated to the latest version. User Account Review: Review and audit user accounts within WordPress, removing any unauthorized or suspicious admin users. Security Monitoring: Employ robust security monitoring tools and services to detect and respond to malicious activity on your website. Upon any hint of suspicion or even without any if as a website owner you use WordPress with the Automatic plugin you should do a review of the indicators of compromise shared in the WPScan article. Conclusions Wordpress's market position for website creation will continue to attract the attention of cybercriminals, now and in the future, so these attacks will continue to occur frequently. Here are some basic security recommendations if you are a user owning a website managed with Wordpress: First of all, think about the need to install plugins, carefully balancing the ability to keep plugins up to date, which is crucial for security, versus the ease of use or functionality they provide. Only install actively maintained plugins and review their use periodically to remove those that are not needed. Depending on the criticality of the website and the data it hosts, evaluate the need to install specialized continuous security monitoring tools in the cloud as offered by several manufacturers of security products specialized in Wordpress. Wordpress is probably one of the best and most accessible alternatives for the creation and management of websites, but it is necessary to take care of its security, as any other system accessible from the web. Cyber Security The Hacktivist, an online documentary about Cyber Security pioneer Andrew Huang January 2, 2024

May 15, 2024

Cyber Security

Malware distributed during fraudulent job interviews

Introduction A social engineering campaign, called DEV#POPPER, has recently been detected using fake npm packages under the guise of job offers to trick developers into downloading a Python backdoor. The attack chain starts with fraudulent interviews, asking developers to download malware disguised as legitimate software. In this case, it is a ZIP archive hosted on GitHub that contains a seemingly harmless npm module, but actually hosts a malicious JavaScript file called BeaverTail and a Python backdoor called InvisibleFerret. As reported in an initial analysis by Securonix, this activity has been linked to North Korean threat actors who continue to refine their arsenal of attacks, constantly updating their techniques and operational capabilities. In this post we will review, at a high level, the attack chain, and capabilities, ending with some recommendations and conclusions. Are you a developer looking for a job? we have "good news/bad news" In a high-demand environment such as software development, the practice of including technical tests during the selection process to evaluate the capabilities of candidates has become established. Leaving aside the appropriateness or not of this alternative (in my view not very effective, to demonstrate the technical capability of a potential new employee), the reality is that it is a fairly widespread practice and that, from the point of view of the attacker, it generates the ideal stress situation in the candidate. The candidate is likely to act in a less than cautious and complacent manner since an important step in his professional life is at stake. Conducting technical tests in job interviews can create a stressful environment for candidates, who may act recklessly and complacently as they feel their professional future is at stake. Urgency and trust in a third party in order not to lose the open opportunity are classic mechanisms of psychological manipulation used in social engineering campaigns and which seek typical human psychological vulnerabilities as deep-rooted as trust, fear, or the simple desire to be useful. Attack chain The following is a simplified image of the attack chain: Image extracted from the analysis of Palo Alto. In short, attackers set up fake job interviews for developers, pretending to be legitimate job interviewers. During these fraudulent interviews, developers are often asked to perform tasks that involve downloading and running software from sources that appear legitimate, such as GitHub. The software contains a malicious Node JS payload that, once executed, compromises the developer's system. In the case analyzed by Securonix, the attack trigger is hidden in a javascript file, called ImageDetails.js, in the backend of the job candidate's supposed practical exercise. Apparently simple code that uses Mongoose, a well-known Mongoose, atabase object modeling package in Node JS. However, hidden in an abnormally long line and after a comment is the initial payload obfuscated by base64 encoding, variable substitution and other common techniques. In the following image we can see an animation of the hiding of the initial code: When the victim then installs this npm (Node Package Manager) package, the attack is triggered, which consists of four phases detailed in the securonix analysis, which we invite all those who are more technically curious to read. Attack capabilities The attack consists of two main components: The installation of an information-stealing malware and a backdoor on the victim's machine. As an information extractor on the victim user's machine, data such as the following is tracked and sent to the attacker's server: Cryptocurrency wallets and payment method information found in the victim's browser. Data for fingerprinting. Name of the victim's host. Type and version of the Operating System. Login user name. Etc. The second part of the attack is the most damaging, installing a remote access trojan (usually known as RAT, Remote Access Trojan), which in turn gives the attacker the following capabilities once the exploit has been initiated: Persistence: Network connection and session creation: Used for persistent connections: This establishes persistent TCP connections, including structuring and sending data in JSON format. File system access: Contains functions to traverse directories and filter files based on specific extensions and directories to exclude. It can also locate and potentially exfiltrate files that do not meet certain criteria, such as file size and extension. Remote command execution: The script contains several functions that allow the execution of system shell commands and scripts. This includes browsing the file system and executing shell commands. Included is the ability to download the popular AnyDesk client to gain additional control of the victim's machine. Exfiltration of information: the Python script is able to send files to a remote FTP server with the ability to filter files based on their extension. Other functions exist to help automate this process by collecting data from various user directories such as Documents and Downloads. Clipboard and keystroke logging: The script includes capabilities to monitor and exfiltrate clipboard contents and keylogger. Conclusions Social engineering is, and will continue to be, the main attack vector for breaching security from a human perspective. The particular case of using uncomfortable, urgent or stressful environments for victims, such as a job interview, fits what cybercriminals are looking for to maximize the likelihood of a successful attack. This method is effective because it exploits the developer's professional commitment and trust in the job application process, where refusal to perform the interviewer's actions could compromise the job opportunity. Attackers tailor their approach to appear as credible as possible, often by mimicking real companies and replicating real interview processes. This appearance of professionalism and legitimacy lulls the interviewee into a false sense of security, making it easier to deploy malware without arousing suspicion. This type of attack is not new; Palo Alto unit 42 analyzed previous attacks during job interviews in late 2023 and even in 2022. The protection mechanisms against these threats could be summarized as follows: Work computers should not be used for personal activities, such as job interviews. In general, no one should install unknown files from unverified sources on their computers. GitHub accounts that contain a single repository with few or no updates should be distrusted. It is becoming increasingly difficult to differentiate between potential fraudulent accounts with the ability to generate networks of "controlled" users to grant themselves activity by attackers. Perhaps the most important and effective thing, in these cases, is to verify, slowly, the existence and legitimacy of the companies offering job interviews, and also to confirm that the interviewers really work for the companies they claim to represent. Cyber Security Protect your credentials: Job offer scams March 18, 2024

May 8, 2024

Cyber Security

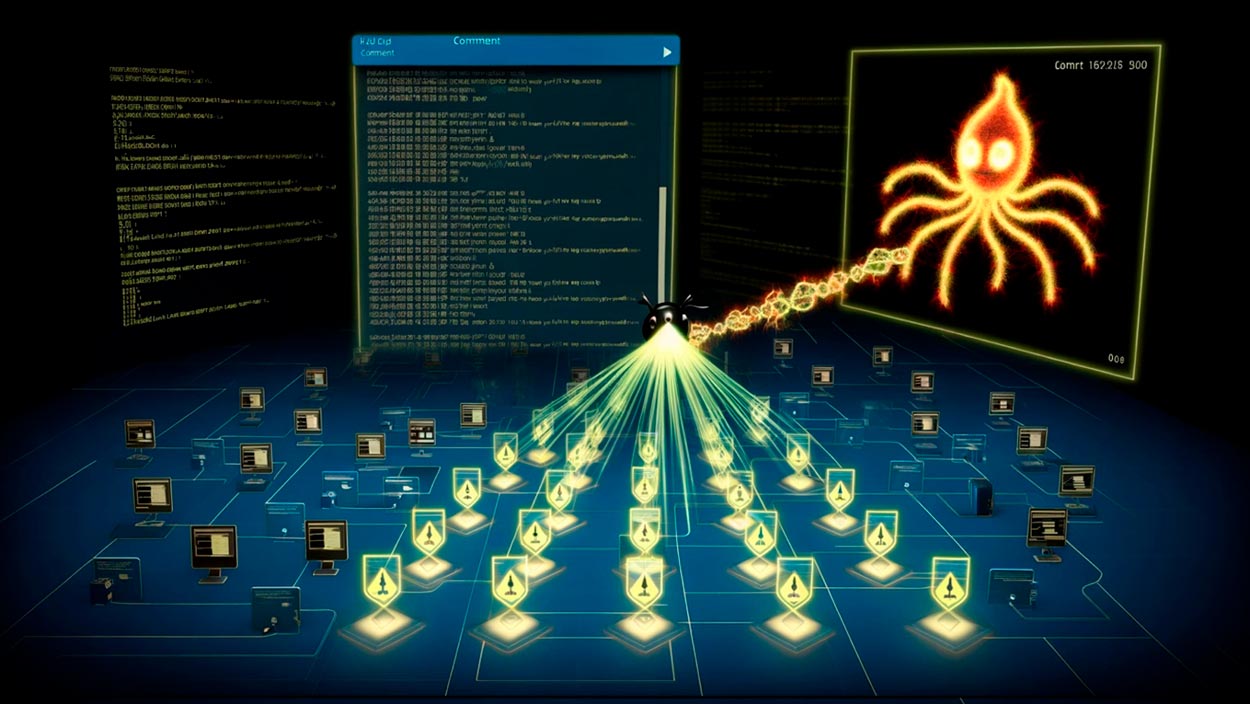

Malware distribution: Files in GitHub comments

Cybercriminals are always trying to find new ways of distributing malware that look the more legitimate and innocuous the better. In this article we will have a look at the latest chapter in this eternal saga with the abuse of Github, comments, an attack recently discovered by researchers at security firm McAfee. What happened? McAfee researchers have published a technical report on the distribution of the Redline Infostealer malware. The interesting thing about the report from our point of view is that within the attack chain an abuse of uploading files within comments on Github issues of Microsoft projects has been discovered. For example, in the well-known c++ library management project, vcpck. It is not that a Microsoft repository was distributing malware since February, but that the legitimate repository was used for the malware to host files that are not part of vcpkg but were uploaded as part of a comment left in a commit or issue. The attackers were able to make the distribution url look completely legitimate, even though it distributed malware. How does this distribution mechanism work? McAfee publishes this distribution scheme for the case of the stealer they were analyzing: Image extracted from the McAfee study on the Redline InfoStealer distribution. Focusing on the initial part of the attack chain, one can see the use of Microsoft's vcpkg GitHub repository as the initial url. This is possible since, by leaving a comment, a GitHub user can attach a file (compressed files, documents, etc.), which will be uploaded to GitHub's CDN (Cloud Delivery Network) and associated with the related project using a unique URL in the following format: https://www[.]github[.]com/{usuario_del_proyecto}/{nombre_del_repositorio}/files/{id_del_archivo}/{nombre_del_archivo} This is due to a potential bug or bad design decision by GitHub. Instead of generating the url after a comment is posted, GitHub automatically generates the download link after adding the file to an unsaved comment. This allows threat actors to attach their malware to any repository without them knowing. Even if the attacker decides not to publish the comment or delete it after it has been published, the files are not removed from the GitHub CDN, and the download URLs continue to work. Impact Since the URL of the file contains the name of the repository in which the comment was created, and since almost all software companies use GitHub, this flaw can allow cybercriminals to develop extraordinarily robust and trusted malicious urls. Although the detected attack focused on Microsoft code repositories, the attack surface is actually very wide, any public code repository hosted on Github (or even Gitlab which made the same design decision as evidenced). Let's review three examples of potentially relevant uses of this attack technique: Impersonation of security tools: An attacker could upload malicious versions of popular security patches or tools within repositories that host cybersecurity software. They could, for example, upload a trojanized version of an update file for a popular antivirus tool, tricking users into downloading and executing malware under the guise of improved security. Plugins for IDE development tools: In repositories that store development tools or plugins, attackers could upload malicious updates or extensions. This could be particularly effective in repositories for IDEs such as Visual Studio Code or popular development frameworks, where additional files could be presented as performance improvements or new features. Exploitation of firmware and hardware projects: In repositories dealing with firmware or hardware drivers, uploading compromised firmware files or driver updates could lead to direct manipulation of physical devices. Users downloading these updates could unknowingly install firmware that could alter device behavior or allow remote access. Mitigation Unfortunately, even if a company learns that their repositories are being abused to distribute malware, there is no configuration to manage the files attached to their projects. Once detected an organization can contact GitHub to individually remove such a file... but clearly this mitigation does not scale properly. Another alternative to protect a GitHub account from being abused in this way and undermining your reputation would be to disable comments. GitHub allows you to temporarily disable comments for up to six months at a time. However, restricting comments can significantly affect the development of a project, as it will not allow users to report bugs or suggestions. Conclusions Malware distribution continues and will continue to creatively search for trusted places where it can generate assets to unleash its attack chains.... this will be a reality today as you read this or 5, 10 years from now is what is called an "evergreen" field where attackers are still looking for fish in the sea. In this article we have reviewed how a "slight" design flaw in the uploading of files within the Github code management platform can cause a significant flow of apparently legitimate urls to appear for malware distribution. We warn about the special relevance of this new attack vector in firmware projects, hardware, IDEs and their add-ons and security tool repositories. 🧠 Mental Note: Until an effective mitigation by GitHub, which will undoubtedly come, it is necessary to be especially attentive to Github urls not included in official releases and downloadable source code repositories. As a final curiosity, creativity is not only found on the attackers' side. There have been detected "legitimate" uses of this ability to enter CDN prior to publication on Github, for example, for storing images for a blog as we can see in the following tweet: Tweet with reference to the use of Github's CDN for blog image storage. Sooner or later it will be addressed by the platform and is not a long term alternative. ______ Cyber Security Dangerous friendships (or how a disguised collaboration on Github can ruin your day) October 12, 2023

April 30, 2024

AI & Data

Stanford AI Index: Will LLMs run out of training data?