Stanford AI Index: Will LLMs run out of training data?

Introduction

The prestigious American university Stanford has recently published its study on the current state of Artificial Intelligence from multiple perspectives: research, technical, sectorial and an analysis of the perception of this technology by the general public.

This is the seventh edition of this study that continues to be a reference and a more than interesting reading for all of us who are involved in the field of Artificial Intelligence in one way or another... good, (and long) reading for the weekend.

Imagen generada automáticamente con IA

In this article we will review the main highlights of the study and explore the possible limitation of the collapse of LLM models by the use of synthetic data, a particular issue that we have already discussed in the conclusions of our article on data poisoning included in the series on attacks on Artificial Intelligence systems.

Highlights of the Stanford study

In this section we review the main points of the study and provide our views on some of them.

- AI outperforms humans in some tasks, but not all. AI has outperformed humans in several benchmark fields: automatic image classification, visual reasoning, and English comprehension to name a few.

However, it lags behind in more complex tasks such as advanced-level mathematics, common-sense reasoning (the least common of the senses), and planning. - Industry continues to dominate cutting-edge AI research. In 2023, industry produced 51 notable machine learning models, while academia contributed only 15. There were also 21 notable models resulting from industry-academia collaborations in 2023.

- The cost of training next-generation models keeps rising. OpenAI's GPT-4, for example, is estimated to have cost approximately $80 million in computation alone to train, while for Google's recent Gemini Ultra model the computational bill is estimated to be in the region of $200 million. Here we should not only think of the direct cost but also of its environmental impact, as is the case with cryptocurrencies. In this case it is true that it is an initiative that is more centralized in large technology corporations, but we are convinced that in the medium to long term this will be an important topic of debate.

- The United States leads China and the EU as the main source of leading AI models. In 2023, 61 notable AI models originated from U.S.-based institutions, outpacing the EU's 21 and China's 15.

- Investment in generative AI soars. Despite a decline in total private investment in AI last year, funding for generative AI skyrocketed, nearly octupling from 2022 to reach $25.2 billion. Major players in the generative AI space, including OpenAI, Anthropic, Hugging Face and Inflection, reported substantial funding rounds.

- Robust and standardized evaluations from the perspective of responsible and ethical LLM AI are clearly insufficient.

New research reveals a significant lack of standardization in responsible AI reporting. Major developers, including OpenAI, Google, and Anthropic, primarily test their models against different responsible AI benchmarks. This practice complicates efforts to systematically compare the risks and limitations of leading AI models. - The data are clear: AI makes workers more productive and leads to higher quality work. In 2023, several studies evaluated the impact of AI on the work environment, suggesting that AI enables faster task completion and improved output quality. These studies also demonstrated the potential of AI to close the skills gap between low-skilled and high-skilled workers or the process of onboarding to an organization. However, other studies caution that the use of AI requires proper oversight may not prove counterproductive.

- Scientific progress is further accelerated, thanks to AI. In 2022, AI began to advance scientific discovery. However, 2023 saw the launch of even more significant science-related AI applications, from AlphaDev, which makes algorithmic classification more efficient, to GNoME, which facilitates the materials discovery process.

- The number of AI regulations is increasing dramatically. In Europe with the well-known Artificial Intelligence Act, focusing on the US, the number of AI-related regulations has increased significantly in the last year and in the last five years. In 2023, there were 25 AI-related regulations, up from just one in 2016. Last year alone, the total number of AI-related regulations grew by 56.3%.

- People around the world are more aware of the potential impact of AI. An Ipsos survey shows that, in the last year, the proportion of those who think AI will drastically affect their lives in the next three to five years has increased from 60% to 66%. In addition, 52% express nervousness toward AI products and services, marking a 13-percentage point increase since2022.

Will LLMs run out of training data?

The main improvements of Large Language Models (LLM) have so far been achieved by brute force. By this we mean the ingestion, during the training phase of the foundational models, of an increasing amount of data as the main vector of progress.

Still, the question is,

Is this sustainable over time? Based on the predictions of experts in the field the answer is, in a nutshell, no.

In research by Epoch historical and computational power projections have been generated to try to estimate when AI researchers might expect to run out of data.

The historical projections are based on observed growth rates in the data sizes used to train foundation models. Computational projections adjust the historical growth rate based on projections of computational availability.

✅ Researchers estimate, for instance, that computer scientists could exhaust the stock of high-quality linguistic data by 2026, exhaust low-quality linguistic data in two decades, and consume image data for a range between the late 2030s to mid-2040s.

There are two alternative ways to alleviate this limitation in the improvement of models:

- Synthetic data generation: Where a Generative Artificial Intelligence system creates new data that is then used to train other foundational models.

- The improvement of models through a paradigm that does not involve further consumption of training data but rather refining their architecture or capabilities through other avenues of improvement.

There are many scientific publications today focused on the generation of synthetic data by Generative AI systems, especially relevant in environments or sectors where the existence of data is scarce or difficult to access due to confidentiality or privacy issues.

✅ Let's think, for example, in the field of health or education: Synthetic data generation can be an efficient and ethical solution to improve LLM models without the need to use real data that may compromise the privacy or security of individuals or organizations.

However, this alternative requires careful design and evaluation to ensure that the data created are plausible, relevant, and diverse, and that they do not introduce bias or distortion into the models that use them.

Collapse of the model

Automatically generated image with AI

Automatically generated image with AI

Apart from the need to advance and investigate mechanisms for generating synthetic data that ensure a design that is similar to those that occur naturally in a real, unbiased environment, there is a second component or risk known as the phenomenon of model collapse, which we will explain in a little more detail.

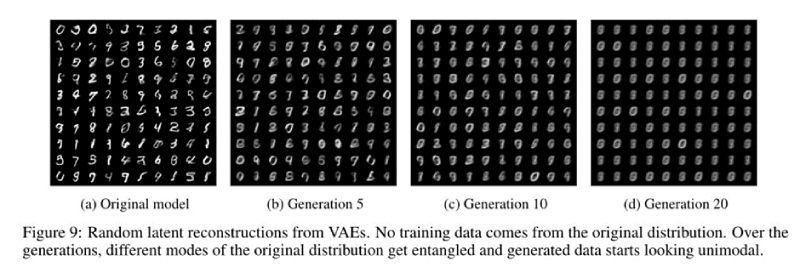

Recent studies, such as that of a team of British and Canadian researchers, detail that as more and more synthetic data is used, models trained in this way lose the ability to remember the true distribution of the data and produce an output with an increasingly limited distribution.

In statistical terms, as the number of synthetic generations increases, the tails of the distributions disappear, and the generation density shifts toward the mean. This pattern means that, over time, the generations of models trained predominantly on synthetic data become less varied and are not as widely distributed.... as can be seen in the image below:

Image extracted from a study by a team of British and Canadian researchers on the phenomenon of model collapse.

Image extracted from a study by a team of British and Canadian researchers on the phenomenon of model collapse.

Conclusions

- Stanford's study on the state of AI offers a multidimensional view of the current state of artificial intelligence, from research to public perception, an interesting read that can be consumed in chapters, whichever ones the reader finds most interesting for easier digestion.

- The data scarcity problem for LLMs: Large language models (LLMs) face the challenge of improving their performance without relying on an increasing consumption of data, which is not sustainable over time.

- Synthetic data generation as a potential solution and its risks: One of the alternatives to overcome the data limitation is synthetic data generation using generative AI systems, which can create plausible, relevant, and diverse data to train other models. However, this option has risks such as model collapse or the introduction of biases.

Finally, a similarity with respect to the problem of model collapse: inbreeding in human collectives.

As the data ingested by an LLM model comes from another LLM model, the marginal gain on the new data decreases until it becomes a problem rather than a benefit in terms of performance.

We all know the dangers of inbreeding and it seems that Artificial Intelligence is not exempt from this from a data point of view.

Will we be able to generate data that is diverse and similar to what would be produced naturally at the speed required by the advancement of artificial intelligence?

Hybrid Cloud

Hybrid Cloud Cyber Security & NaaS

Cyber Security & NaaS AI & Data

AI & Data IoT & Connectivity

IoT & Connectivity Business Applications

Business Applications Intelligent Workplace

Intelligent Workplace Consulting & Professional Services

Consulting & Professional Services Small Medium Enterprise

Small Medium Enterprise Health and Social Care

Health and Social Care Industry

Industry Retail

Retail Tourism and Leisure

Tourism and Leisure Transport & Logistics

Transport & Logistics Energy & Utilities

Energy & Utilities Banking and Finance

Banking and Finance Smart Cities

Smart Cities Public Sector

Public Sector