AI Risks: a comprehensive look at Artificial Intelligence incident management and security

AI is rapidly becoming an essential part of business operations, with significant potential to transform multiple industries. However, alongside its promises of efficiency, agility, and innovation, AI also introduces risks that companies must actively consider.

These risks can range from algorithmic failures to AI-powered cyberattacks, making the landscape of potential AI-related incidents broad and unpredictable.

AI risks can range from algorithmic failures to AI-powered cyberattacks, making the incident landscape broad and unpredictable.

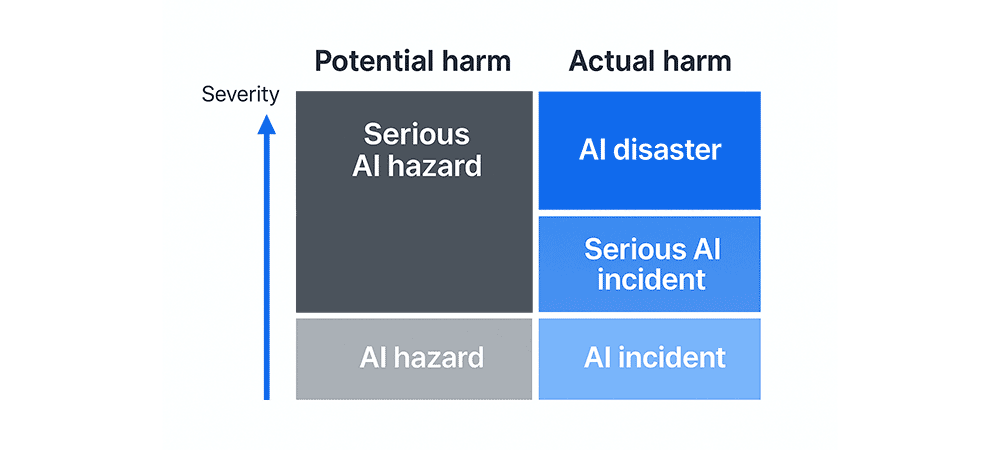

AI Incident classification and definitions

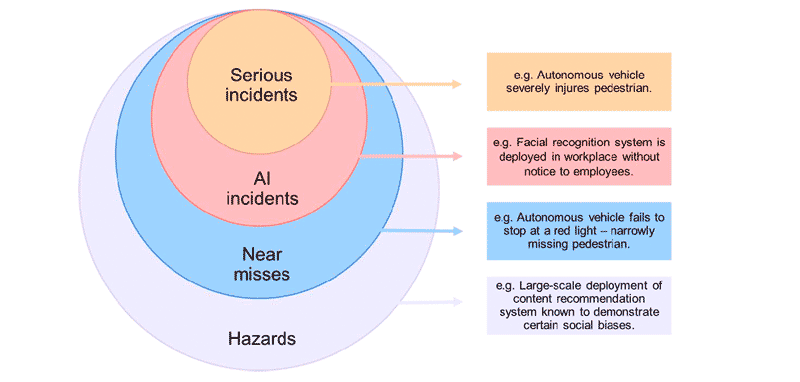

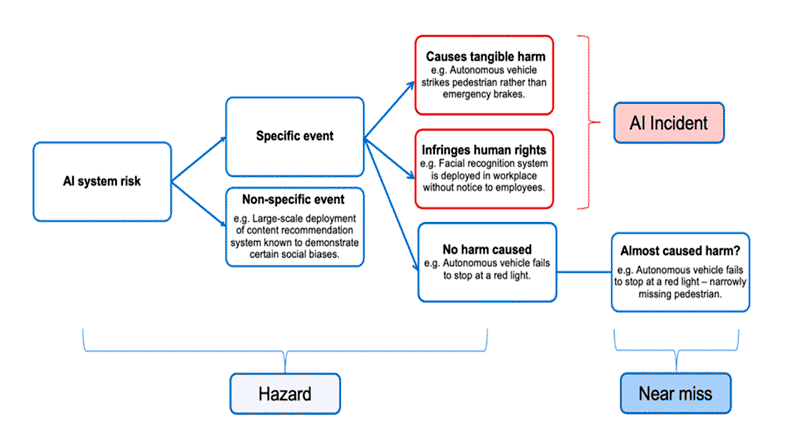

According to the OECD, an AI Incident is defined as an event, circumstance, or series of events in which the development, use, or malfunction of an AI system directly or indirectly causes actual harm—such as injuries to individuals, disruptions to critical infrastructure, violations of human rights, labor or intellectual property laws, or damage to property, communities, or the environment.

This definition aligns with the European Union's Artificial Intelligence Act under the category of a Serious Incident.

- By contrast, an AI Hazard refers to an event where an AI system has the potential to cause harm but hasn’t yet done so. If left unmanaged, such a hazard could evolve into a full-blown incident with serious consequences, and might also qualify as a Serious AI Hazard.

The real danger arises when a risk materializes into tangible harm—to people, property, or the environment—thus constituting an actual incident.

- An AI Disaster is a severe AI incident that disrupts the functioning of a community or society to the extent that it challenges or overwhelms its capacity to respond using internal resources. The effects of an AI disaster can be immediate and localized or widespread and long-lasting.

- A Near Miss is an event in which an AI system almost caused harm, but the damage was averted due to circumstantial factors rather than built-in safety measures.

Source: OECD. AI Incident Concepts by Level.

Source: OECD. AI Incident Concepts by Level.

Source: OECD. Key Differences Between Incidents, Hazards, and Near Misses.

Source: OECD. Key Differences Between Incidents, Hazards, and Near Misses.

To illustrate these concepts, I participated in controlled testing for a globally impactful project on AI application in clinical or medical diagnostics.

An AI incident occurs when an AI system malfunction leads to actual harm, such as disruptions to critical infrastructure or violations of rights.

An example from the healthcare sector

A renowned hospital at the forefront of scientific and technological advances in healthcare sought to implement an AI-based diagnostic system to assist physicians in proactively identifying diseases through medical image analysis.

This system was trained on large datasets to detect clinical and pathological conditions such as cancer, fractures, and infections. Throughout the process, we prioritized continuous evaluation of risks and potential failures associated with AI-related incidents.

Following my involvement in the project, I conducted an in-depth investigation after a Serious Incident. The incident involved an erroneous diagnosis that resulted in an incorrect low-risk prognosis for a patient who was, in fact, suffering from an aggressive disease.

The implementation of AI systems in healthcare must be accompanied by robust safety measures and incident management protocols to protect patients and uphold the integrity of healthcare operations.

This situation could have led to a critical delay in treatment, potentially worsening the patient's condition and causing irreversible harm. In evaluating the scenario, we always consider the standard diagnostic outcomes and processes without AI involvement for comparison.

Following this, we officially identified the case as an AI Incident. Root cause analysis revealed that a software update introduced an error into the algorithm, which increased the rate of false negatives in detecting the condition. This meant early-stage patients could be discharged without further testing, delaying essential treatment.

We also encountered Near Misses, where medical staff reviewed AI-generated results and noticed the system was misclassifying benign pathologies as malignant in patient scans. Medical professionals identified this issue timely to preventing unnecessary and invasive procedures.

■ In parallel, through the identification of Hazards, we discovered that the AI model exhibited biases in its training data, showing significantly better performance for certain patient groups while underperforming with others. This raises serious concerns about ethical and regulatory implications stemming from misdiagnoses.

Conclusion

AI highlights the critical intersection between Cyber Security, privacy, and data protection. Effective AI incident management requires not just technical oversight, but also comprehensive risk governance.

As AI systems become increasingly embedded in business and societal functions, it's essential to implement thorough oversight, secure protocols, and control strategies, along with incident response frameworks that help prevent, detect, and mitigate risks.

In the realm of AI, safeguarding outcomes depends on securing both the technology and the processes that govern it.

Ultimately, managing AI risks is not just about prevention—it’s about cultivating a culture of safety, accountability, and continuous improvement. This allows AI to thrive while minimizing harm. Only by confronting these risks head-on can we build a future where AI serves as a powerful tool for progress, innovation, and social good.

Hybrid Cloud

Hybrid Cloud Cyber Security & NaaS

Cyber Security & NaaS AI & Data

AI & Data IoT & Connectivity

IoT & Connectivity Business Applications

Business Applications Intelligent Workplace

Intelligent Workplace Consulting & Professional Services

Consulting & Professional Services Small Medium Enterprise

Small Medium Enterprise Health and Social Care

Health and Social Care Industry

Industry Retail

Retail Tourism and Leisure

Tourism and Leisure Transport & Logistics

Transport & Logistics Energy & Utilities

Energy & Utilities Banking and Finance

Banking and Finance Smart Cities

Smart Cities Public Sector

Public Sector