Anticipating the unthinkable: how can companies prepare for AI incident management?

AI systems are increasingly integrated into and transforming business environments due to their powerful capabilities. This growing integration underscores the need for robust, forward-looking incident management strategies. Traditional incident response approaches are no longer sufficient when it comes to managing the complexities of emerging and disruptive innovation technologies based on AI.

It’s essential to keep in mind the unique features and particularities of AI—such as its nature, probability of errors, self-learning capabilities, and ability to operate autonomously—which highlight the need to rethink how incidents are managed.

AI systems are not like traditional systems. By their very nature, AI models rely on machine learning algorithms that predict or generate content by identifying patterns in large datasets, which makes them difficult to predict and control, often behaving in complex ways.

AI is vulnerable to attacks by malicious actors and prone to errors, biases, and unintended behaviors.

The need for a holistic approach to AI management

Cyber incidents impact the confidentiality, availability, or integrity of data or information systems. In the case of Artificial Intelligence (AI), these cybersecurity dimensions are crucial. Moreover, organizations must have broad and visible oversight of AI systems throughout their entire lifecycle, which will facilitate a systemic and holistic approach to incident management.

Following the approval of the AI Act regulation, many organizations have started defining their AI policies—though not always effectively—which creates gaps in their incident management and response strategies.

According to the OECD, every organization should first understand that AI is defined as:

“A machine-based system that, for explicit or implicit objectives, infers how to generate outputs such as predictions, content, recommendations or decisions from received inputs, and which can influence physical or virtual environments. AI systems vary in their levels of autonomy and ability to adapt after deployment.”

Implementing AI policies and strategies

Organizations are increasingly moving away from specifying what is not AI, which can lead to confusion when managing incidents. AI is now integrated into many applications, including traditional ones. It is therefore essential to consider AI’s scope within an entire system, including its dependencies and integrations, as these are key.

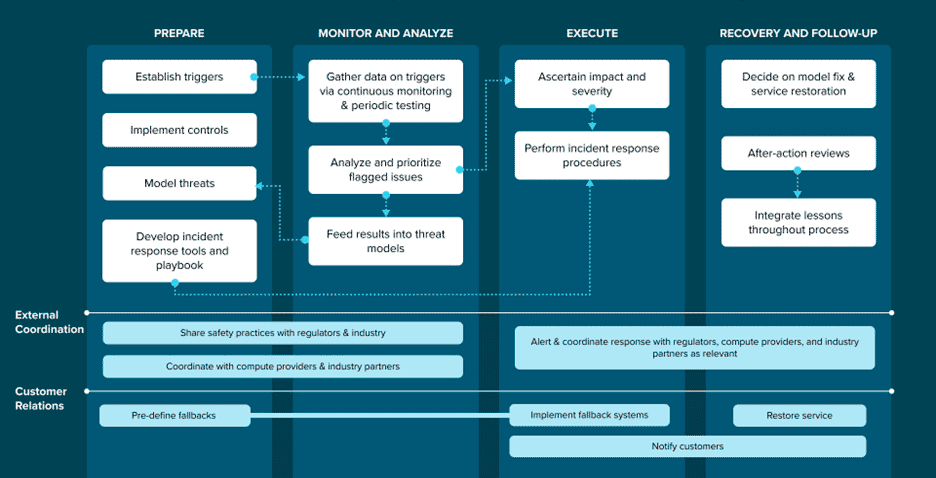

The Institute for AI Policy and Strategy (IAPS) proposes a high-level framework outlining a four-phase process inspired by National Institute of Standards and Technology (NIST) cyber incident response practices. These four phases—preparation, monitoring and analysis, execution, and recovery and follow-up—provide a structured approach to AI incident response.

Source: IAPS. End-to-end process for AI incident management

Source: IAPS. End-to-end process for AI incident management

Phase 1: Preparation

- AI governance model: Establish clear governance models to streamline decision-making in critical situations, aligned with AI Act regulatory practices.

- Risk management: Improve readiness by integrating comprehensive risk assessments into AI security and privacy frameworks. This includes evaluating wider interdependencies across supply chains and ecosystems, whether third-, fourth-, or nth-party.

- Internal organizational capability: Develop tools, procedures, and decision-making frameworks to respond swiftly to incidents. This includes threat identification, corrective and mitigation actions, and defining response protocols.

- Continuity management: Define alternative solutions to reduce service disruption impact on users, ensuring resilience and reliability.

- Focus on cyber resilience: Expand readiness efforts to include proactive defense controls, threat intelligence sharing, and scenario-based simulations for AI-driven security incidents, including those without a “cyber” nature—privacy must also be considered.

- Crisis management: Implement a structured response plan that integrates AI incident management with broader strategies for cybersecurity, privacy, and business continuity.

Phase 2: Monitoring and analysis

- Data collection: Ensure adequate visibility of the AI model or system to collect data from various sources and assess its capabilities, behavior, and real-time use.

- Anomaly detection: Once data is collected, it’s crucial to analyze it to detect anomalies and classify incidents, escalating them to decision-makers for timely intervention.

- Integration with threat modeling: Relevant findings may be incorporated into threat modeling processes to strengthen security measures and enhance AI risk assessment maturity.

Phase 3: Execution

- Decision-making and mitigation actions: Once an AI incident is identified, determine corrective actions and implement necessary adjustments to the model or system.

- Regulatory compliance: Notify, alert, and coordinate with relevant regulatory authorities to ensure alignment with legal and industry standards.

- Impact mitigation: Consider implementing alternative measures and notifying stakeholders based on the model’s or system’s scope, aiming to prevent greater disruption despite minimal downtime.

Phase 4: Recovery and follow-up

- Recovery and restoration: Focus actions on restoring affected AI models or systems to normal service functionality and maintaining business operations.

- Lessons learned: Conduct a thorough review of the incident to extract key takeaways and improve future response strategies. Use the findings to update policies, enhance AI governance, and apply best practices across the sector.

- External collaboration and cooperation: Sharing insights and information with regulators and industry partners boosts preparedness and aligns response strategies across the ecosystem.

■ You can consult several AI incident databases as useful information sources to gain a broad perspective on current trends, such as: MIT AI Incident Tracker, AIID AI Incident Database, AI Controversy Repository, MITRE AI Risk Database, OECD AI Incidents Monitor (AIM), DAIL The Database of AI Litigation, Label Errors Database, Goals, Methods, and Failures (GMF) y el Center for Security and Emerging Technology (CSETv1).

Conclusion

In an era where AI systems are increasingly embedded in critical operations, it is essential to have a solid incident response framework in place.

AI incidents lie not only in reacting to them, but also anticipating them before they occur.

As AI models and systems become more sophisticated and integrated into critical infrastructures, resilience is no longer optional—it’s fundamental. By implementing a structured approach that includes preparation, monitoring, execution, and post-incident follow-up, organizations can ensure rapid and effective response, while achieving sustainable improvements in security, privacy, and trustworthiness.

The future of AI incidents lies not only in reacting to them, but in anticipating them before they occur.

However, true resilience goes beyond internal controls. The future of AI depends on proactive governance, continuous learning, and a commitment to adaptability, because in the fast-evolving landscape of Artificial Intelligence, the best defense is a prepared, responsive, and forward-looking strategy.

Much like how brakes allow cars to go faster by giving drivers control, a well-designed AI incident response plan enables organizations to accelerate AI adoption by ensuring they can quickly address and recover from potential issues. With the right strategies in place, companies can harness AI power confidently, knowing they are prepared for any challenge that may arise.

Hybrid Cloud

Hybrid Cloud Cyber Security & NaaS

Cyber Security & NaaS AI & Data

AI & Data IoT & Connectivity

IoT & Connectivity Business Applications

Business Applications Intelligent Workplace

Intelligent Workplace Consulting & Professional Services

Consulting & Professional Services Small Medium Enterprise

Small Medium Enterprise Health and Social Care

Health and Social Care Industry

Industry Retail

Retail Tourism and Leisure

Tourism and Leisure Transport & Logistics

Transport & Logistics Energy & Utilities

Energy & Utilities Banking and Finance

Banking and Finance Smart Cities

Smart Cities Public Sector

Public Sector