You can run Generative AI models on your computer: Step-by-step instructions to install LM Studio

Recently David compiled a list of projects that allow us to run LLM models on modest machines, including personal computers. LLM models have transformed how we interact with and utilize AI. Now, these powerful models can be executed on personal computers, enabling tasks like translation, data extraction and insights, text analysis and summarization, or content creation.

Some of the most exciting features of available LLMs include their ability to generate coherent and creative text, perform natural language processing tasks like reviewing and summarizing text, and develop intelligent applications that can understand and respond to users’ needs more efficiently.

Step-by-step installation of LM Studio

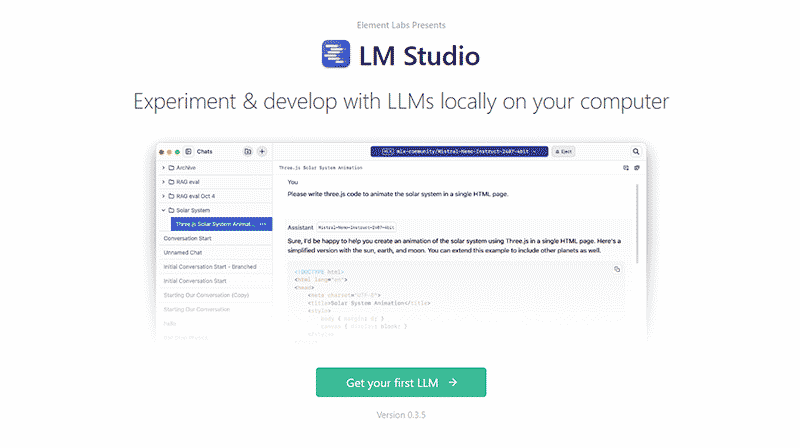

LM Studio is an outstanding tool for getting started and running LLM models locally on your computer, even on a relatively modest machine, provided it meets the minimum requirements. LM Studio is available for Mac, Windows, and Linux.

💻 For Mac users, LM Studio requires an Apple Silicon processor (M1/M2/M3/M4). Alternatively, Msty is a comprehensive option for running LLM models on Macs with Intel processors.

This guide focuses on installation on a modest Windows computer, though the process is similar for other operating systems.

Step 1: Check system requirements

Before you start, ensure your computer meets the minimum requirements to run LM Studio. The two most critical requirements are processor and RAM. For a Windows PC, 16 GB of RAM and an AVX2-compatible processor are recommended for LM Studio to function correctly.

- Open System information.

- Look for Processor and note down its full name.

- Visit Intel’s ARK website (or the equivalent site for your processor brand, like AMD) and locate your processor using its full name or number.

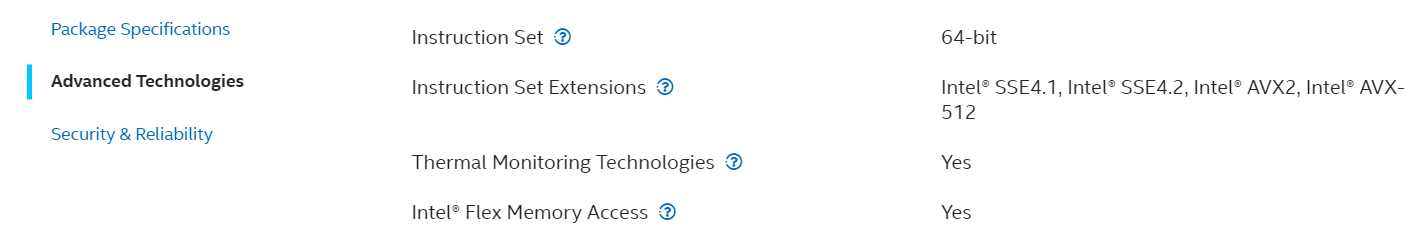

- On Intel’s website, check under Advanced Technologies to see if your processor supports AVX2 in the Instruction Set Extensions section.

For example, an Intel Core i5-1035G1 processor is compatible because it supports AVX2.

For example, an Intel Core i5-1035G1 processor is compatible because it supports AVX2.

■ For small LLM models or initial testing, 8 GB of RAM might suffice. For medium and large models, you’ll need 16 GB, 32 GB, or more: the more RAM you have, the better LM Studio will perform.

Step 2: Download LM Studio

- Use your web browser to visit the LM Studio website.

- Click on the version you want to download (in this case, Windows).

- Save the installation file to your computer, such as on the Desktop or in the Downloads folder.

■ If using browsers like Edge or Chrome, make sure your download settings don’t block executable files.

Step 3: Install LM Studio

- Run the installation file to start the setup.

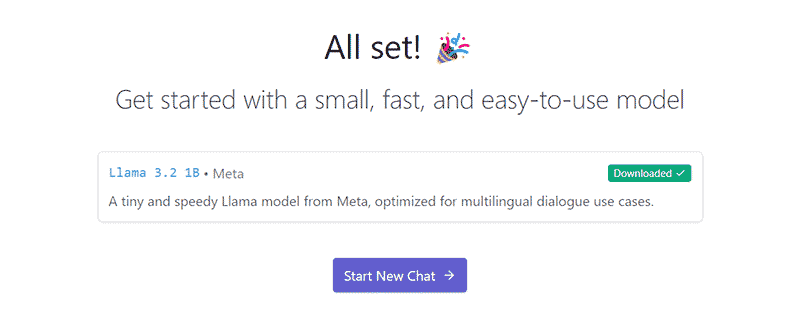

- Follow the installer instructions. You can leave the default installation options, including the suggested LLM model (Llama 3.2 1B by Meta) or download and install DeepSeek R1.

- Once the installation is complete, you can select the option to Start New Chat.

Step 4: First launch of LM Studio

- When you open LM Studio for the first time, it welcomes you.

- By default it uses the model installed during setup. This model is a good starting point because it requires less space and RAM, making it ideal for initial testing.

- You can interact with the model in your preferred language.

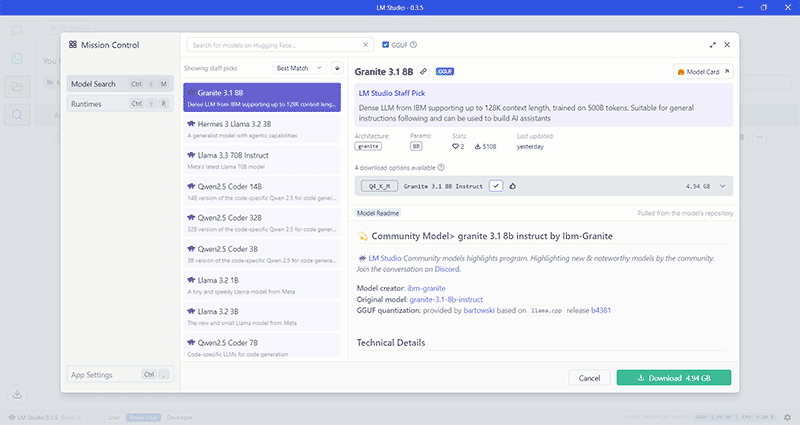

- You can also add other available models, such as Granite by IBM, using the search icon (magnifying glass) on the left.

- Click on the model you want to download.

- Ensure you’ve selected the latest version (usually at the top). Once you’ve selected the correct model, click the Download button.

- The download will begin and you’ll see a progress bar. Wait for it to complete.

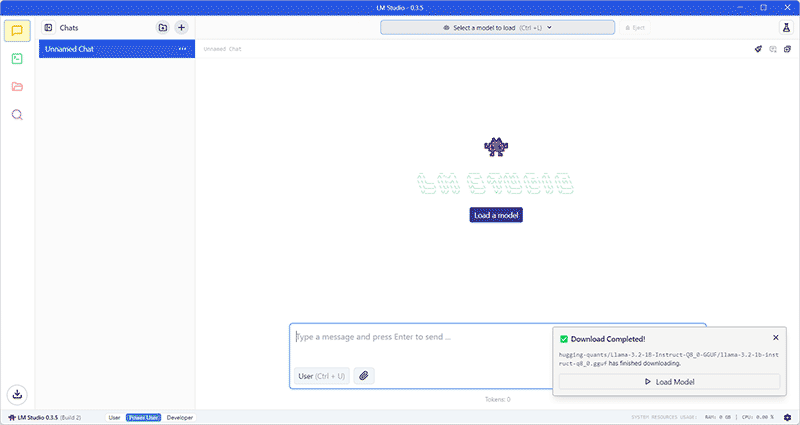

Step 5: Run the downloaded model

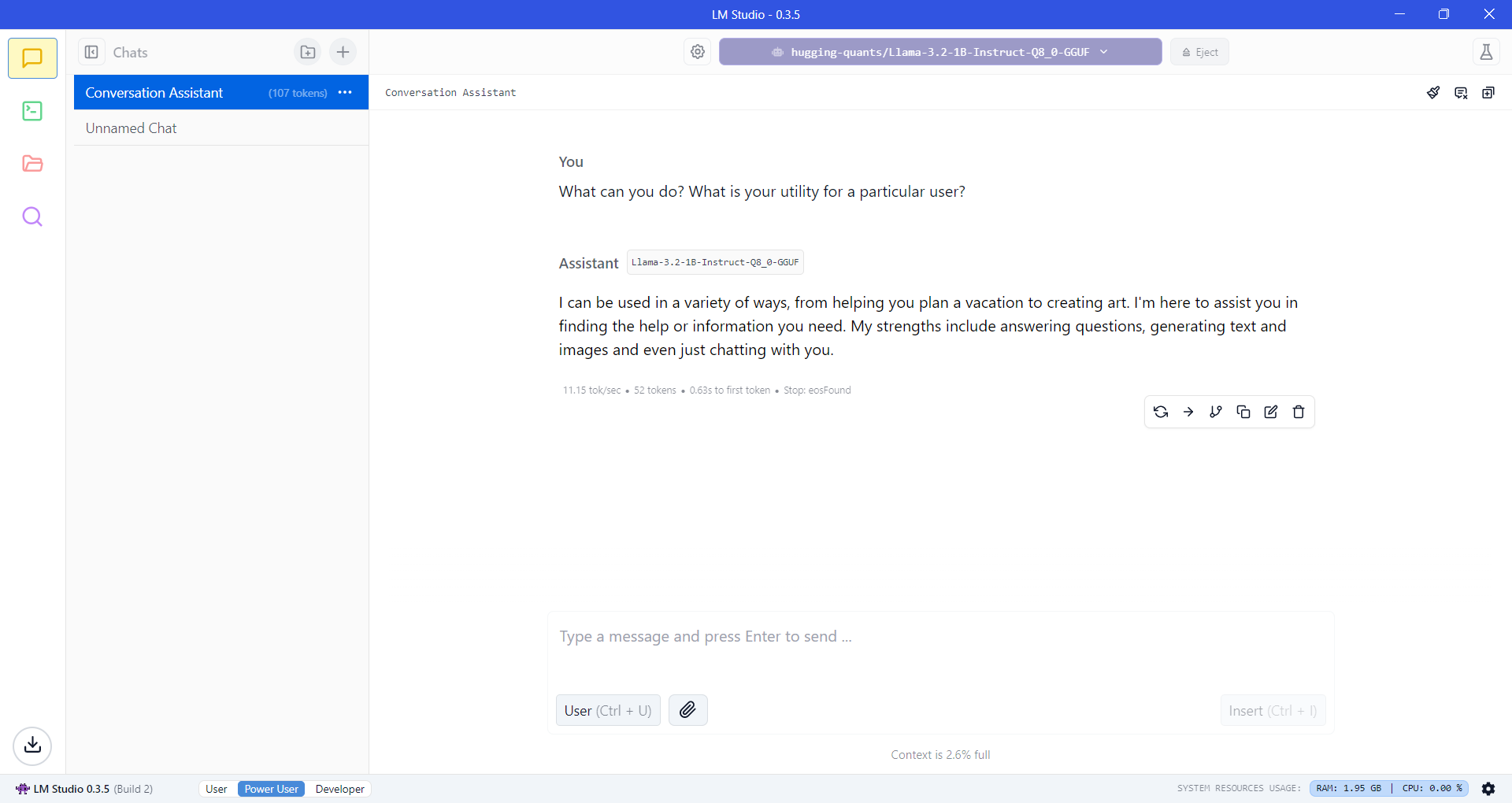

- Once the model is downloaded, return to the Chat tab in the sidebar.

- In the dropdown menu Select a model to chat with from the downloaded models list if you have more than one.

- You can now type messages in the text box, and LM Studio will execute the model to generate responses.

■ Download additional models in LM Studio to compare results and explore capabilities.

Remember that each model takes up disk space, and some require more processor power or RAM, which may affect performance depending on your computer's specifications.

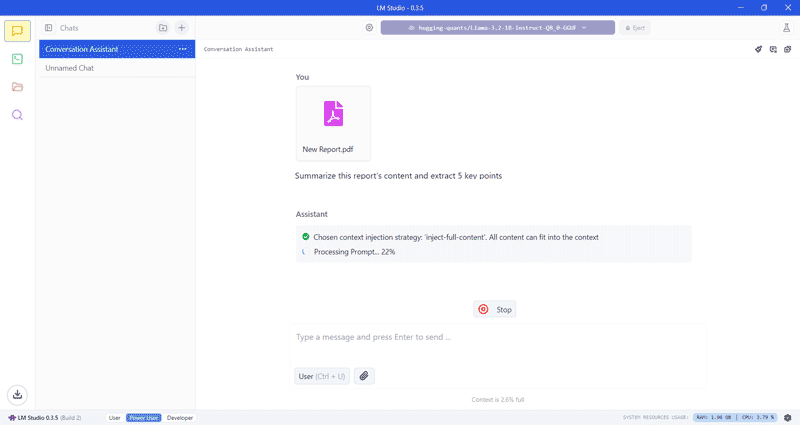

File uploads and RAG

LLMs are limited to the data they were trained on and cannot incorporate new or private information independently.

The Retrieval Augmented Generation (RAG) feature solves this by allowing users to upload personal and private documents from their computers, which the model can then reference to provide more precise and relevant answers.

This enables more accurate and relevant responses, customized to the content you're providing.

- LM Studio allows up to 5 files at a time, with a combined maximum size of 30 MB. Supported formats include PDF, DOCX, TXT, and CSV.

- When asking LM Studio about these documents, be specific and include as many details as possible to help the model retrieve the most relevant information.

- LLM will analyze your query and documents to give you a response. Play around with different documents, queries, and prompts.

—Example: If you upload a specific contract or private agreement in PDF format, you can attach it to your query and ask the model for details about its terms.

Developer Mode and advanced options in LM Studio

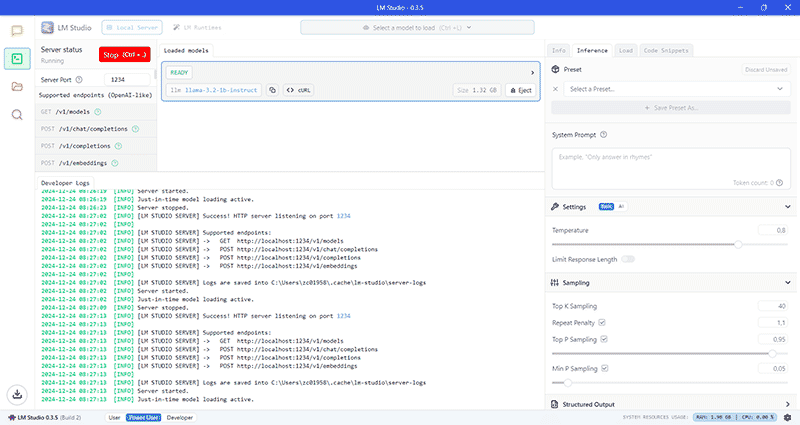

Developer mode in LM Studio offers more options for configuring your LLM model, such as integrating external applications, optimizing performance, or adjusting response accuracy.

Local server: Run the model in a server environment for access from multiple devices or integrations.

Local server: Run the model in a server environment for access from multiple devices or integrations.

- Temperature: Controls the randomness of model responses. Lower values generate more consistent and predictable outputs, while higher values introduce variability and 'creativity' (with an increased risk of incoherence).

- Top-K and Top-P: Determine how many options the model considers when generating text, balancing response variability and precision. Top-K produces stricter outputs, while Top-P introduces more flexibility.

- System Prompt: Customize the initial context or instructions given to the LLM, such as setting tone, style, roleplay or behavior ('You are an expert on [topic]', 'Provide concise answers', or 'Include examples in your responses')

—Example: If you're using the model to summarize text, you can set the temperature to a low value to make the responses more concise and predictable. Or, if you use LM Studio to write professional emails, you can tell it to 'Act as a professional assistant. Make sure your messages are clear, formal, and action-oriented.'

■ Changes to these options may affect performance, optimizing RAM and CPU usage, for example. Also, they affect the quality of responses, improving relevance and adaptability.

Advantages of running LLMs on your personal computer

- Privacy: Work with personal documents like contracts, notes, or letters without uploading them to external services, protecting sensitive information.

- Autonomy: No need for an internet connection or reliance on external servers, ensuring uninterrupted access.

- Optimization: You can customize the model for specific tasks, such as report automation or content generation related to your field.

- Cost savings: Avoid subscription fees or limitations of free-tier cloud versions if you don’t need extensive computational power.

- Learning: Experimenting with models and configurations enhances your understanding of how to leverage this technology effectively. Learning this technology can help you come up with creative ideas and solve problems.

—Example: If you’re organizing emails or personal documents in PDF format, you can use LM Studio with RAG to quickly locate specific messages related to a topic or date, saving time and effort.

o Experiment with other models available in LM Studio, but remember that larger models may require more RAM and processing power.

o Adjust LM Studio settings (such as memory usage) based on your hardware and desired results.

o Keep LM Studio updated to the latest version to benefit from new features and bug fixes.

______

Hybrid Cloud

Hybrid Cloud Cyber Security & NaaS

Cyber Security & NaaS AI & Data

AI & Data IoT & Connectivity

IoT & Connectivity Business Applications

Business Applications Intelligent Workplace

Intelligent Workplace Consulting & Professional Services

Consulting & Professional Services Small Medium Enterprise

Small Medium Enterprise Health and Social Care

Health and Social Care Industry

Industry Retail

Retail Tourism and Leisure

Tourism and Leisure Transport & Logistics

Transport & Logistics Energy & Utilities

Energy & Utilities Banking and Finance

Banking and Finance Smart Cities

Smart Cities Public Sector

Public Sector